Hello all!

I’m encountering some issues regarding filetransfer speed.

Setup:

Xeon E-2244G

128GB Ram

M1015 HBA

8x Seagate Exos X16 (16GB CMR drives) (5x RAID-Z2, 2P, 1 HS)

Intel X520 10Gbe network

Dragonfish 24.04.2

Desktop:

R9 5950x

64GB ram

Intel x520

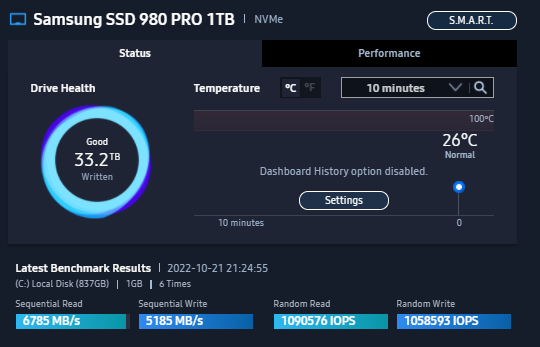

Samsung 980Pro Nvme (Pcie 4.0)

Windows 11 24H2

Problem?

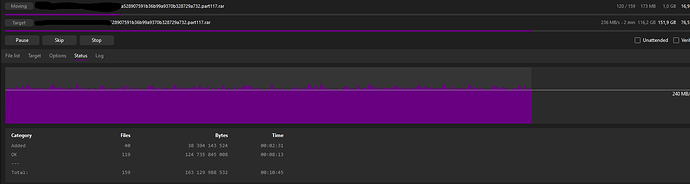

Transferring 150GB @250-300MB/s is not what it used to be…?

Read/write from that SMB share, capped at 300MB/s. (which is suspiciously close to SATA2 speeds)

When did this happen?

Really don’t know, but i presume when upgrading from Bluefin. I would have noticed if it has been any longer.

As my internal tests on my desktop confirm, the Nvme drive is performing good:

The HDD speeds are somewhere between 140MB to 250MB/s read/write, so i should saturate that 10Gbe link.

Now, when i configured the server, back in 2022, i got the SMB share running and saturating the 10Gbe connection both ways. (running Truenas Core v?)

This thread came up and this is exactly what i’m experiencing, however changing that setting he mentions to something else doesn’t fix the problem or any change in the transfer speeds.

Or perhaps i’m not correct in the way i tried:

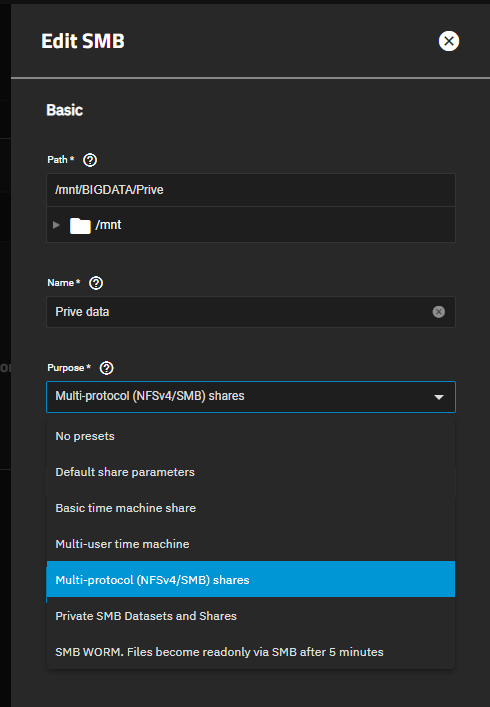

Here i tried ‘no presets’, ‘Multi-Protocol Share’ and it was originally on ‘private SMB Datasets and Share’.

All the same speeds.

Investigating and playing around further didn’t help…

(deactivate SMART testing, backup, active connected clients, restart server, restart service, checked my desktop on various things…)

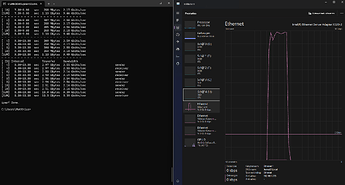

I did checked the connection speed between desktop and the server:

Same results when reversing.

So perfect.

Is Truenas Scale having issues with SMB protocol? As i do see this problem pop up many times in the forum.

Thank you all!