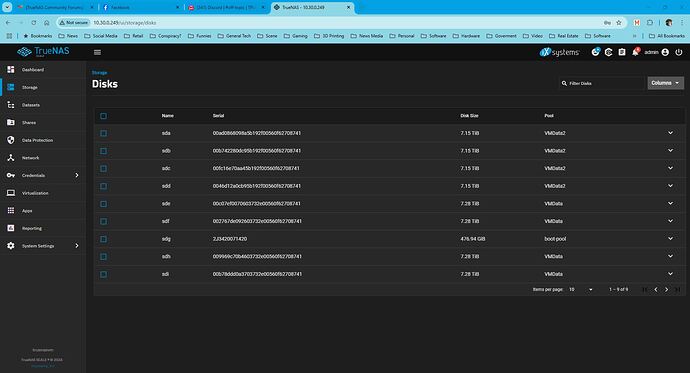

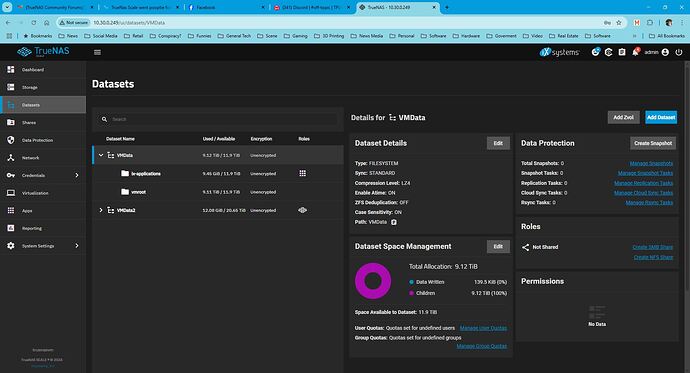

Woke up to this nightmare… this morning my data had all been exported by Truenas Scale by itself.

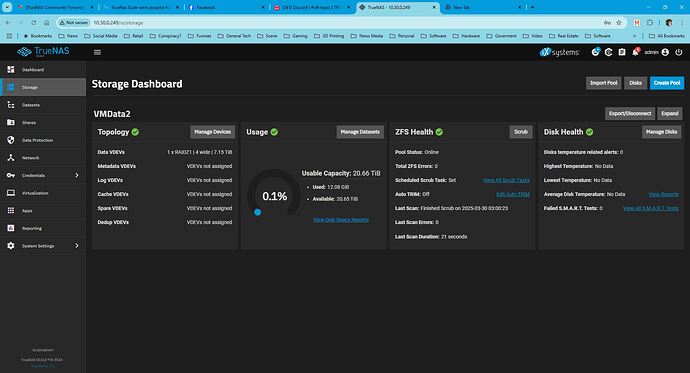

now i re-installed Truenas Scale and attempted import and didn’t get very far.

wonder if anyone can help.

before i go jump off a bridge,

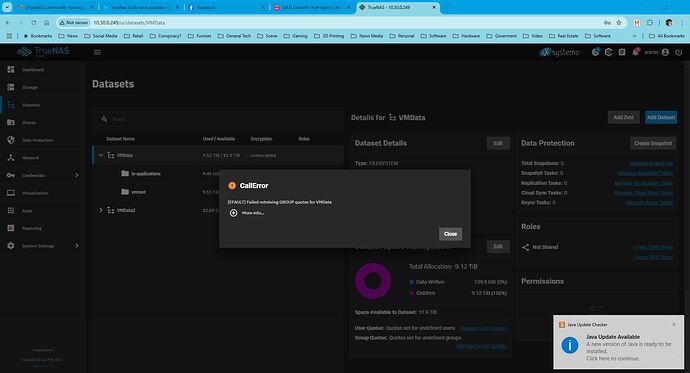

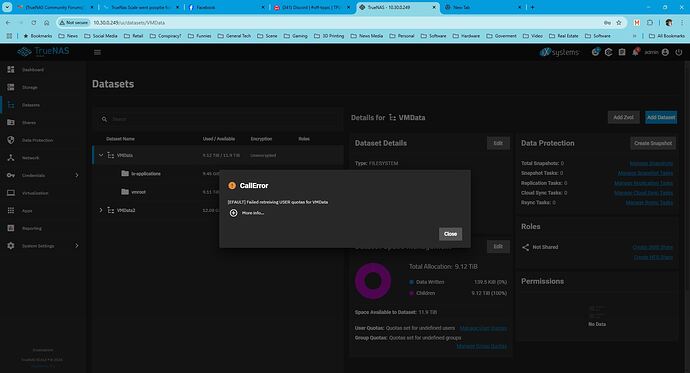

error below

concurrent.futures.process.RemoteTraceback:

“”"

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs/pool_actions.py", line 231, in import_pool

zfs.import_pool(found, pool_name, properties, missing_log=missing_log, any_host=any_host)

File “libzfs.pyx”, line 1374, in libzfs.ZFS.import_pool

File “libzfs.pyx”, line 1402, in libzfs.ZFS.__import_pool

libzfs.ZFSException: cannot import ‘VMData’ as ‘VMData’: I/O error

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File “/usr/lib/python3.11/concurrent/futures/process.py”, line 261, in _process_worker

r = call_item.fn(*call_item.args, **call_item.kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/worker.py”, line 112, in main_worker

res = MIDDLEWARE._run(*call_args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/worker.py”, line 46, in _run

return self._call(name, serviceobj, methodobj, args, job=job)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/worker.py”, line 34, in call

with Client(f’ws+unix://{MIDDLEWARE_RUN_DIR}/middlewared-internal.sock’, py_exceptions=True) as c:

File “/usr/lib/python3/dist-packages/middlewared/worker.py”, line 40, in call

return methodobj(*params)

^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/schema/processor.py”, line 183, in nf

return func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs/pool_actions.py", line 211, in import_pool

with libzfs.ZFS() as zfs:

File “libzfs.pyx”, line 534, in libzfs.ZFS.exit

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs/pool_actions.py", line 235, in import_pool

raise CallError(f’Failed to import {pool_name!r} pool: {e}', e.code)

middlewared.service_exception.CallError: [EZFS_IO] Failed to import ‘VMData’ pool: cannot import ‘VMData’ as ‘VMData’: I/O error

“”"

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File “/usr/lib/python3/dist-packages/middlewared/job.py”, line 509, in run

await self.future

File “/usr/lib/python3/dist-packages/middlewared/job.py”, line 554, in _run_body

rv = await self.method(*args)

^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/schema/processor.py”, line 179, in nf

return await func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/schema/processor.py”, line 49, in nf

res = await f(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/pool/import_pool.py", line 114, in import_pool

await self.middleware.call(‘zfs.pool.import_pool’, guid, opts, any_host, use_cachefile, new_name)

File “/usr/lib/python3/dist-packages/middlewared/main.py”, line 1629, in call

return await self._call(

^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/main.py”, line 1468, in _call

return await self._call_worker(name, *prepared_call.args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/main.py”, line 1474, in _call_worker

return await self.run_in_proc(main_worker, name, args, job)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/main.py”, line 1380, in run_in_proc

return await self.run_in_executor(self.__procpool, method, *args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/usr/lib/python3/dist-packages/middlewared/main.py”, line 1364, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

middlewared.service_exception.CallError: [EZFS_IO] Failed to import ‘VMData’ pool: cannot import ‘VMData’ as ‘VMData’: I/O error