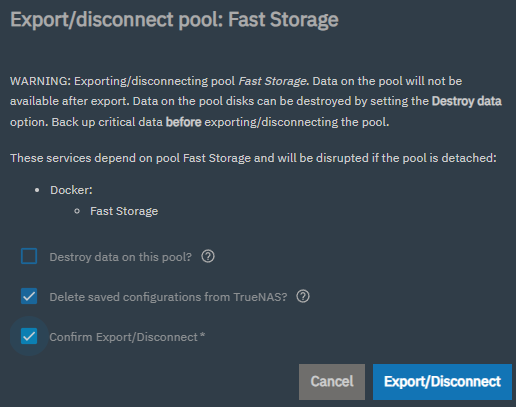

For exporting the pool via the GUI:

concurrent.futures.process._RemoteTraceback:

"""

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/dataset_actions.py", line 70, in umount

with libzfs.ZFS() as zfs:

File "libzfs.pyx", line 534, in libzfs.ZFS.__exit__

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/dataset_actions.py", line 72, in umount

dataset.umount(force=options['force'])

File "libzfs.pyx", line 4321, in libzfs.ZFSDataset.umount

libzfs.ZFSException: cannot unmount '/mnt/.ix-apps/app_mounts/jellyfin/transcodes-ix-applications-backup-system-update--2024-11-07_14:38:57-clone': no such pool or dataset

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/lib/python3.11/concurrent/futures/process.py", line 261, in _process_worker

r = call_item.fn(*call_item.args, **call_item.kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/worker.py", line 116, in main_worker

res = MIDDLEWARE._run(*call_args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/worker.py", line 47, in _run

return self._call(name, serviceobj, methodobj, args, job=job)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/worker.py", line 41, in _call

return methodobj(*params)

^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/schema/processor.py", line 178, in nf

return func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/dataset_actions.py", line 75, in umount

handle_ds_not_found(e.code, name)

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/dataset_actions.py", line 11, in handle_ds_not_found

raise CallError(f'Dataset {ds_name!r} not found', errno.ENOENT)

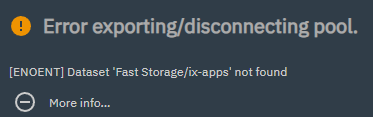

middlewared.service_exception.CallError: [ENOENT] Dataset 'Fast Storage/ix-apps' not found

"""

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/job.py", line 515, in run

await self.future

File "/usr/lib/python3/dist-packages/middlewared/job.py", line 560, in __run_body

rv = await self.method(*args)

^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/schema/processor.py", line 174, in nf

return await func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/schema/processor.py", line 48, in nf

res = await f(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/pool_/export.py", line 111, in export

await delegate.delete(attachments)

File "/usr/lib/python3/dist-packages/middlewared/plugins/docker/attachments.py", line 31, in delete

await (await self.middleware.call('docker.update', {'pool': None})).wait(raise_error=True)

File "/usr/lib/python3/dist-packages/middlewared/job.py", line 463, in wait

raise self.exc_info[1]

File "/usr/lib/python3/dist-packages/middlewared/job.py", line 515, in run

await self.future

File "/usr/lib/python3/dist-packages/middlewared/job.py", line 560, in __run_body

rv = await self.method(*args)

^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/api/base/decorator.py", line 88, in wrapped

result = await func(*args)

^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/docker/update.py", line 101, in do_update

catalog_sync_job = await self.middleware.call('docker.fs_manage.umount')

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 977, in call

return await self._call(

^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 692, in _call

return await methodobj(*prepared_call.args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/docker/fs_manage.py", line 33, in umount

return await self.common_func(False)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/docker/fs_manage.py", line 20, in common_func

await self.middleware.call('zfs.dataset.umount', docker_ds, {'force': True})

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 977, in call

return await self._call(

^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 700, in _call

return await self._call_worker(name, *prepared_call.args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 706, in _call_worker

return await self.run_in_proc(main_worker, name, args, job)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 612, in run_in_proc

return await self.run_in_executor(self.__procpool, method, *args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 596, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

middlewared.service_exception.CallError: [ENOENT] Dataset 'Fast Storage/ix-apps' not found

With

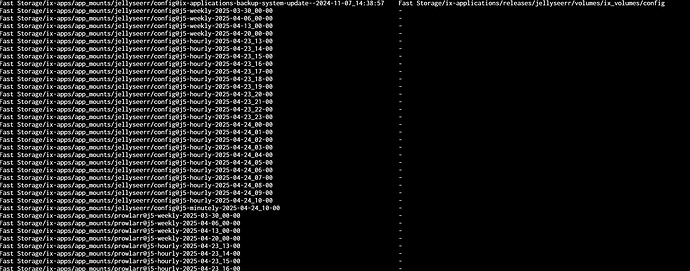

zfs list -t snapshot -o name,clones -r 'Fast Storage'

I get

admin@Johny5[~]$ sudo umount 'Fast Storage'/ix-apps

umount: /mnt/.ix-apps: target is busy.

admin@Johny5[~]$ sudo zfs destroy -R 'Fast Storage'/ix-apps/app_mounts/jellyfin/transcodes@ix-applications-backup-system-update--2024-11-07_14:38:57

cannot unmount '/mnt/.ix-apps/app_mounts/jellyfin/transcodes-ix-applications-backup-system-update--2024-11-07_14:38:57-clone': no such pool or dataset

cannot destroy snapshot Fast Storage/ix-apps/app_mounts/jellyfin/transcodes@ix-applications-backup-system-update--2024-11-07_14:38:57: snapshot is cloned

With: sudo nano /proc/mounts

These are listed

Fast\040Storage/ix-apps/app_mounts/jellyfin/transcodes-ix-applications-backup-system-update--2024-11-07_14:38:57-clone /mnt/.ix-apps/app_mounts/jellyfin/transcodes-ix-applications-backup-system-update--2024-11-07_14:38:57-clone zfs rw,noatime,xattr,posixacl,casesensitive 0 0