The issue is with server cases, not with server boards or CPUs in themselves. But if noise and cooling is a concern, I’d go for Xeon D-1500 or Atom C3000.

And the noise floor is defined by spinning drives. 16 drives are not going to be silent…

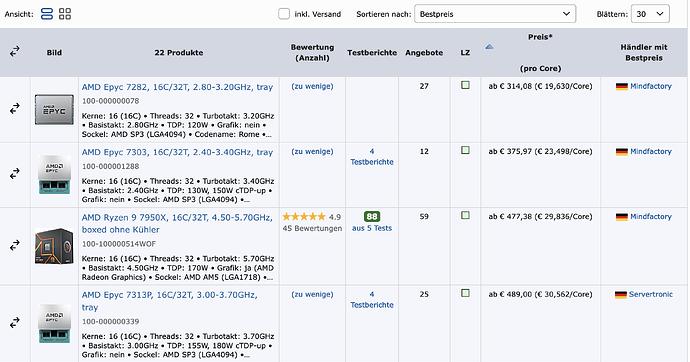

More, a lot more, PCIe lanes. And cheap DDR4 RDIMM. Idle power, however, is going to be much higher on the EPYC.

To do WHAT exactly? A NAS, in itself, is mostly idle; only the monthly scrub will bring it close to full CPU use. So these 16 cores have been picked up, hopefully, for another reason than running ZFS… Then, by design, an EPYC can run 24/7 at full speed on all cores within its declared thermal enveloppe—try that on a consumer Intel CPU for a laugh!

That is the point I cannot clarify without going through the full manual—and then maybe even not. There may be other candidates as well, but that’s a lot to research.

I don’t see your use case and the design goal.

Why precisely 16 cores and 192 GB RAM? (That should not be for ZFS alone.)

Why a 4*NVME pool next to the big and bulky HDD storage?

And why is there no mention of a NIC, ever? What’s the point of any flash storage to serve files at 1 Gb/s (or 2.5)?

Two lanes limits the total throughput to and from all drives. Which is an obvious bottleneck with 16 drives.

More critically, it also limits the power that is available to the HBA. A 9300-16i has an extra power connector because it could draw more than what the PCIe slot can deliver. A 9305-16i can feed fully off a PCIe x8 slot, but probably not on x2.

Otherwise get any board which can bifurcate the “GPU lanes” x8/x8 to two slots and throw in an adapter with a PCIe switch for the M.2.

Generally, i’m looking for balance in the allocation of resources. Balance which I do not see, for instance, in the pairing of a cheap consumer motherboard and a large amount of expensive DDR5 RAM (it’s still two times more expensive par GB than DDR4).

![]()