Hey guys!

I could really use a bite of expert input on a pressing issue…

System:

- TrueNAS Scale VM under Proxmox - latest electric eel

- TrueNAS has its own 6x SATA controller via passthrough

- 3x 6 TB HDDs are sitting in a SATA backplane, which connects to the SATA controller

- All of the HDDs are the same model exact model, size and age

- 2x HDDs in ZFS mirror config

- 1x HDD configured as hot spare

Now after one of my active HDDs started to fail I looked up the official Docs on how to replace a failing HDD here [I cant include links according to the forum but it is the first google result]

I followed the “replacing with a hot spare” steps:

…

- take HDD offline (pool shows as degraded)

- remove HDD physically and woosh…

… the pool stopped showing up as degraded and instead of swapping in the hot spare (I ran a SMART check on that one beforehand) it now simply stated “online”

Without any further input from my side the remaining drive now shows up as stripe instead of mirror which also means that I cant just remove the spare from the pool (which was still connected as spare up to this point), go to [STORAGE] → [Add to pool] → [Data] and rebuild the mirrored config by adding the spare like that because now my remaining drive is showing up as “stripe” in that menu and the only option available is to add another “stripe” to it.

Guys I am a noob and even though I have backups I am scared now that this is really bad and I have no idea how to best get my remaining 2 drives back into a mirrored config, without having to recreate the whole pool, my datasets, shares and permissions.

I really hope someone tells me that there is a super easy fix and I worried for nothing!

I really appreciate your help!

I guess if something like this can happen when strictly following the official docs and then there is not even a solution other than setting up a new pool and copying all files and recreating all settings TrueNAS is still not mature enough for everyday use…

Did you “offline” the bad drive or “detach” it?

To choose “detach” will indeed convert it from a two-way mirror to a single-drive stripe.

That menu is meant to add an entirely new vdev to the pool itself.

You’re rather meant to go to the vdevs (not sure where it is in SCALE, but in Core it’s under the Pool → Status page), and replace the drive there. To upgrade from a stripe to a mirror, you would “attach” to the mirror vdev. (The GUI refers to it as “Extend” for some silly reason…)

1 Like

I made sure to follow the docs step by step:

Detaching a Failed Disk

After taking the failed disk offline and physically removing it from the system, go to the Storage Dashboard and click Manage Devices on the Topology widget for the degraded pool to open the Devices screen for that pool. Click

next to the VDEV to expand it, then look for the disk with the REMOVED status.

I clicked on “Offline” which made the pool show up as “degraded” then removed it from the backplane. When I went back to the screen it was showing up as “Online” instead of degraded.

If that’s really what happened, then it sounds like a bug.

See edit below.

I’ve gone through drive replacements for mirror vdevs in TrueNAS Core, which were straight to the point and worked as expected.

- Offline failing drive (“Offline”)

- Shutdown server

- Physically remove failing drive

- Physically plug in replacement drive

- Poweron server

- Replace with new drive (“Replace”)

Shutting down is optional if you have hot-plug. (I like to shutdown anyways.)

EDIT: “Online” as a single-drive stripe, or “Online” with a hot spare currently being triggered for the mirror vdev?

Here is what I advised on Reddit (having dealt with this entitled users whinging complaint about my first comment there):

Firstly, please provide a link to where you got the instructions for “replacing with a hot spare”, because you claim on Reddit that this was an “official document” but I couldn’t find any iX documentation that matches this description or the description of your original problem.[1]

The good news is that you should be able to put this right without having to rebuild your pool and recreate all the datasets and permissions etc. because vdevs in a pool consisting only of single disks and mirrors can be removed and mirrors can be added. So we might be able to help you put things right either through the UI or by using shell commands.

So let’s start by getting detailed information about your configuration (i.e. full hardware details inc. controller and disk models) and about how you have configured your Proxmox controller and disk passthrough/blacklisting, and the current pool status i.e. the output of zpool status -v.

P.S. I will go further and state that it was perhaps somewhat foolhardy for a self-proclaimed “noob” to create a complex environment of TrueNAS running under Proxmox, where misconfiguration of Proxmox is a common cause of data loss, when they don’t have sufficient technical knowledge to know how to configure Proxmox correctly or to sysadmin their system.

[1] With the hint in a later post I was able to search and find the correct page: Replacing Disks | TrueNAS Documentation Hub

“Online” as a single stripe with the hot spare still there as hot spare

I only removed the hot spare some time later when it became clear that the process according to the docs wont work. I then removed it in hopes I can simply add it to the pool and then resilver.

Sorry dude I really have no idea what your problem is with me or why you consider asking for help entitled but I still appreciate your willingness to help.

It sounds like you clicked “Detach” instead of “Offline”. Unless there’s a new bug in SCALE. I can only speak for Core.

You can still convert the single-drive stripe into a two-way mirror.

Just choose “Extend” (a poorly named menu item) for the single-drive vdev, and choose the good drive.

This will trigger a resilver, and now you’ll once again have a two-way mirror vdev.

EDIT: If your pool is only comprised of a single vdev (in this case a mirror), you might as well configure it as a three-way mirror, instead of a hot spare. There are no other vdevs in the pool to really make use of the flexibility of a hot spare.

With a three-way mirror, you have the same redundancy as RAIDZ2, but without harming performance or adding complexity with future management.

If you change your mind later, you can always detach the third drive from the mirror and delegate it once again as a hot spare.

1 Like

Thank you very much, it is resilvering right now, I really appreciate the help!

My reason for having it as hot spare is so I can leave the drive sitting there with power management set to spindown. This way it uses hardly any power, has no wear and still swap in, in case it is needed (if it works as intended).

Fair enough.

I use three-way mirrors myself. (Sue me, all your RAIDZ’ers!  )

)

I might write up a new post why three-way mirrors shouldn’t be dismissed, and can be very handy for home users.

As for the original issue, it’s either a true “bug” in SCALE, in which selecting “Offline” triggered the wrong action, or you really did select “Detach” by mistake.

Do you work for iX or are you just a sad mega fanboy?

According to your (kinda flawed) logic:

- Nobody is supposed to install any piece of software before studying its complete knowledgebase

- You want everyone who wants to setup a TrueNAS install to ask the forums first

- Following official docs is “blindly trying stuff” so people are supposed to ask the forums even though there are docs for that specifiv case

- wut?

- I provided every detail that was asked from me

Please start questioning your life choices if you feel personally slighted every time someone faces an issue with the company/product/concept you selected as the center of your personality.

The first thing you did when I asked for help was to look for ways to discredit what I said which is not a normal response to my OP if you think about it.

Anyway I am grateful for people like @winnielinnie who took time to try to help me and actually provided the solution.

I am happy right now because everything is back up working and I am not interested in arguing about this anymore so I`ll leave it at that:

I know enough to set up and manage servers and networks, write some code and I know how to follow docs. I followed the docs and that left me in the situation I mentioned in my OP. There was no further “playing around” that made things worse like you mentioned. I provided all the info you asked until the point someone else chimed in and provided a solution.

Your reaction is completely out of proportion and it really seems like you are emotionally invested in all of this far more than is healthy and it might make sense to rethink your priorities.

I work for iX and I’d like to jump in here.

If our official documentation or webUI is unclear, ambiguous, or makes it easy to make the wrong step in doing a safe and effective disk replacement (provided the underlying pool is capable of it, of course) then I consider that as me needing to hold the L and update whatever needs the extra polish.

@freaked1234 I appreciate the feedback about the process. I’ll run through the steps again and see if there’s something specific we can narrow down on.

For those of you who offered helpful advice and created a welcoming environment to help out a concerned new user, thank you.

I appreciate you chiming in here!

I am aware that certain people just dont want to admit that they made a mistake and damaged something in the process but I am quite sure I did exactly what the docs said.

If I can help recreating the issue I will gladly do so as long as there is no risk to my data.

No need to put your data at risk - let us do that with throwaway data and throwaway disks.

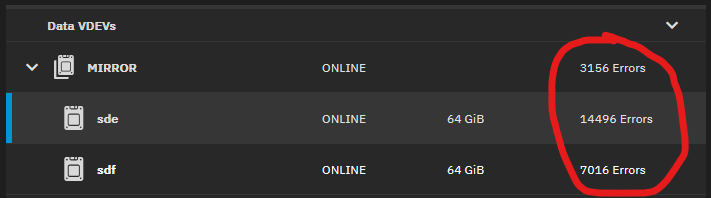

Because I’m sure not going to put data I care about on these

1 Like

Hah, yea that makes sense.

I had 3 identical drives in that pool, 2 mirrored and the third as hot spare. I also dont really get why the spare wasnt used while the pool was still showing up as “degraded”, before I pulled the failing drive out of its drive bay.

to clarify:

Under “Device Management” where I set the failing drive to “offline” all of the drives were listed, the 2 mirrored ones as well as the hot spare.

I expected the hot spare to “do something” when the pool showed “degraded” but nothing happened.

Bit of a random one but you’re not using zpool checkpoints are you as I discovered recently they don’t play nice with hot-spares.