Hi,

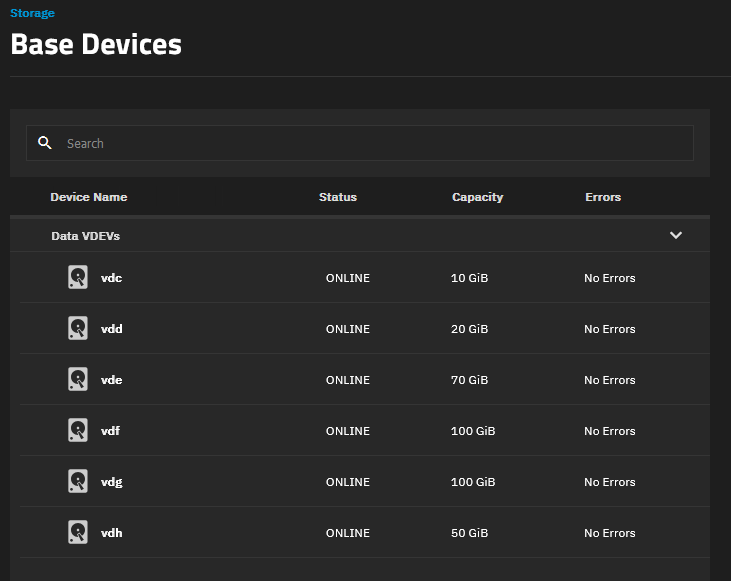

I’m using Truenas scale Dragonfish-24.04.2 in a VM and I created a data pool named “base” with 350GB raw disk (#6 vdev of several disks already protected to reach that amount in stripe 0).

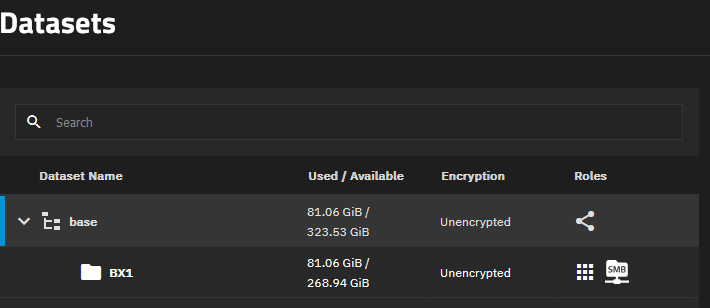

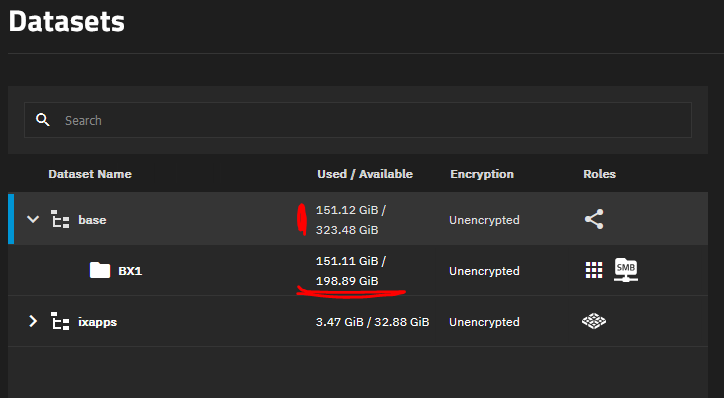

Under this DS I created a second DS (a child) called base/BX1.

I wrote some files in BX1 DS for a total of 81GB so the available space reduce properly in this DS but, as you can see in the picture, it doesn’t reduce on the main DS base.

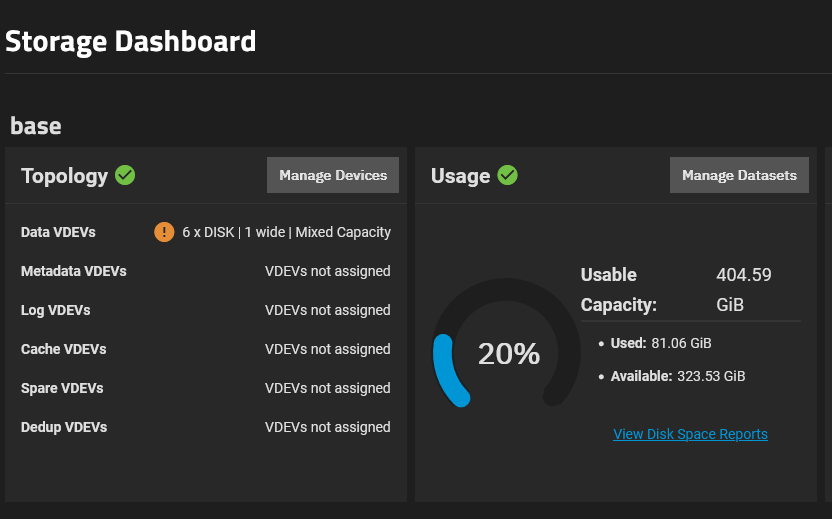

Another point is that in the storage view we have more “usable capacity” than the raw device (404.59GB vs 350GB of total raw disks):

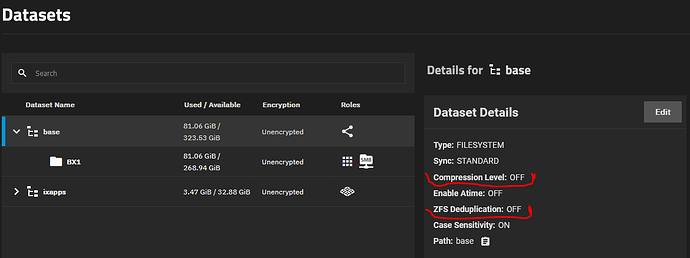

Pay attention that we don’t have dedup or compression active. All data are written as they are and occupy the related space in the fs.

This behaviour is equal using API so if I want to check the amount of available space in the main DS I receive the full amount of space even if it was partially used by a children.

Be care that if I continue to write files in the children DS the “usable capacity” continue to grow instead of decrease. Seems it is calculated as “used+available” but available doens’t take care of the space occupied by the children.

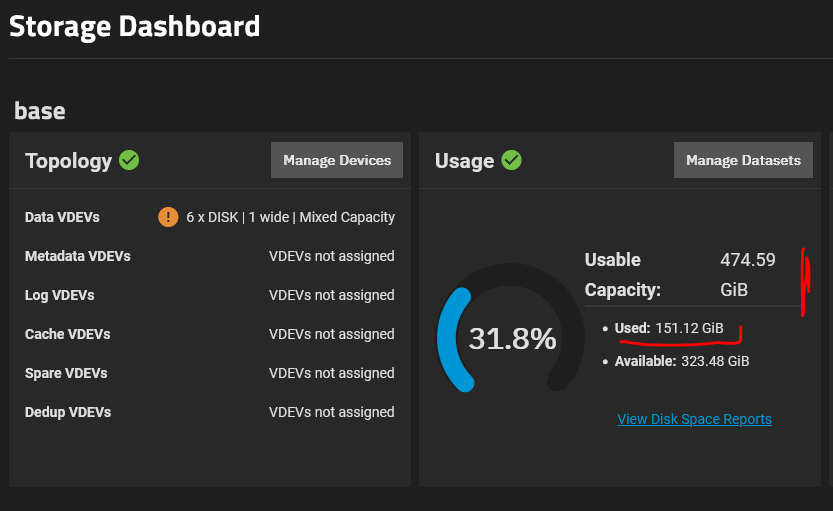

If I wrote other 70GB of file I have this situation:

as you can see used capacity grows of other 70GB but “available” still remains 323.48GB!!!

“Usable capacity” is 474.59GB !!!

This is the best storage I know. Much more you use it, much more “usable capacity” you have ![]()

In the children DS you can see available space is reduced but not in the main DS:

The problem is that in this way seems I can write much more data than I could.

It is reported available space in the main DS of 323.48GB but it’s not true!!!

All this info/number are reflected also in the API. So any check on available space is wrong because available space is wrong (available space in a zpool must be the same for any DS on it or it can be lower if I set a quota on some of them)

Anyone notices this behaviour?

How can I solve it?

regards

Rosario