I’m posting this primarily for fun and critique/comment on methodology if you identify an oversight in how my testing was conducted (I’m not an expert in this category).

1. Purpose: Compare the effects of changing the volume block size of datasets on my Tier1 pool for a set of application workloads present in my test data center.

-

System: TrueNAS Scale, 24.10, HP DL380PG8, Dual Intel(R) Xeon(R) CPU E5-2630L v2 @ 2.40GHz, 220GB RAM.

-

Pool: 20 Intel DC3500 800GB, 10 mirrored vdevs, connected via LSI SAS 9217-8i (SAS 8087 x2), SLOG and L2ARC on P3700.

-

Tested volblocksizes: 4K-128K

5. Selected Application Workloads:

# Define FIO test parameters

TESTS=(

"General_IO --bs=4k,128k --rw=randrw --rwmixread=50 --size=4G --iodepth=32 --numjobs=12 --runtime=60"

"Exchange --bs=8k --rw=randrw --rwmixread=70 --size=4G --iodepth=32 --numjobs=12 --runtime=120"

"Security_Onion_Ingest --bs=128k --rw=write --size=16G --iodepth=64 --numjobs=16 --runtime=300"

"Security_Onion_Search --bs=4k --rw=randread --size=8G --iodepth=32 --numjobs=12 --runtime=300"

"Elastiflow --bs=64k --rw=write --size=10G --iodepth=32 --numjobs=8 --runtime=300"

"Trellix --bs=8k --rw=randrw --rwmixread=50 --size=4G --iodepth=32 --numjobs=16 --runtime=120"

"File_Server --bs=4k,64k --rw=randrw --rwmixread=60 --size=8G --iodepth=16 --numjobs=10 --runtime=180"

"VMware_Appliances --bs=4k,32k --rw=randrw --rwmixread=70 --size=16G --iodepth=64 --numjobs=16 --runtime=180"

"Mixed_VMs --bs=4k,128k --rw=randrw --rwmixread=50 --size=32G --iodepth=32 --numjobs=24 --runtime=300"

)

6. Combined Results Summary

Note: The numbers below are rounded averages drawn from the individual fio outputs.

| Block Size | General_IO (Read IOPS / BW, Write IOPS / BW) |

Exchange (Read IOPS / BW, Write IOPS / BW) |

Sec_Onion_Ingest (Write IOPS / BW) |

Sec_Onion_Search (Read IOPS / BW) |

Elastiflow (Write IOPS / BW) |

Trellix (Read IOPS / BW, Write IOPS / BW) |

File_Server (Read IOPS / BW, Write IOPS / BW) |

VMware_Appliances (Read IOPS / BW, Write IOPS / BW) |

Mixed_VMs (Read IOPS / BW, Write IOPS / BW) |

|---|---|---|---|---|---|---|---|---|---|

| 4K | ~4.2k / 16.2 MiB, ~4.2k / 521 MiB | ~33.8k / 264 MiB, ~14.5k / 114 MiB | ~6.8k / 847 MiB | ~378k / 1475 MiB | ~9.5k / 592 MiB | ~24.1k / 188 MiB, ~24.1k / 188 MiB | ~8.7k / 33.8 MiB, ~5.8k / 360 MiB | ~22.4k / 87.6 MiB, ~9.6k / 301 MiB | ~3.9k / 15.1 MiB, ~3.9k / 483 MiB |

| 8K | ~6.1k / 23.7 MiB, ~6.1k / 759 MiB | ~36.5k / 285 MiB, ~15.6k / 122 MiB | ~8.9k / 1107 MiB | ~398k / 1556 MiB | ~12.4k / 773 MiB | ~25.9k / 188 MiB, ~25.9k / 188 MiB | ~12.1k / 47.2 MiB, ~8.1k / 503 MiB | ~29.9k / 117 MiB, ~12.8k / 401 MiB | ~5.6k / 22.0 MiB, ~5.6k / 704 MiB |

| 16K | ~5.9k / 22.9 MiB, ~5.9k / 735 MiB | ~37.4k / 292 MiB, ~16.1k / 125 MiB | ~6.7k / 841 MiB | ~379k / 1480 MiB | ~13.6k / 850 MiB | ~27.8k / 217 MiB, ~27.8k / 217 MiB | ~15.0k / 58.7 MiB, ~10.0k / 626 MiB | ~32.7k / 128 MiB, ~15.7k / 438 MiB | ~7.2k / 28.1 MiB, ~7.2k / 900 MiB |

| 32K | ~8.2k / 32.0 MiB, ~8.2k / 1024 MiB | ~41.2k / 322 MiB, ~17.7k / 138 MiB | ~8.7k / 1091 MiB | ~375k / 1464 MiB | ~12.8k / 841 MiB | ~30.8k / 241 MiB, ~30.8k / 241 MiB | ~16.0k / 62.4 MiB, ~10.6k / 626 MiB | ~36.2k / 128 MiB, ~15.7k / 438 MiB | ~7.1k / 27.9 MiB, ~7.1k / 704 MiB |

| 64K | ~8.4k / 32.9 MiB, ~8.4k / 1053 MiB | ~44.5k / 347 MiB, ~19.1k / 149 MiB | ~8.4k / 1053 MiB | ~363k / 1417 MiB | ~12.8k / 803 MiB | ~33.0k / 258 MiB, ~33.0k / 258 MiB | ~17.0k / 66.5 MiB, ~11.3k / 709 MiB | ~36.4k / 142 MiB, ~15.7k / 488 MiB | ~7.3k / 28.7 MiB, ~7.3k / 918 MiB |

| 128K | ~7.2k / 28.3 MiB, ~7.3k / 907 MiB | ~41.9k / 327 MiB, ~18.0k / 140 MiB | ~8.4k / 1047 MiB | ~366k / 1430 MiB | ~13.9k / 867 MiB | ~33.3k / 260 MiB, ~33.4k / 261 MiB | ~16.8k / 65.8 MiB, ~11.2k / 701 MiB | ~36.4k / 142 MiB, ~15.6k / 488 MiB | ~8.3k / 28.7 MiB, ~8.3k / 1032 MiB |

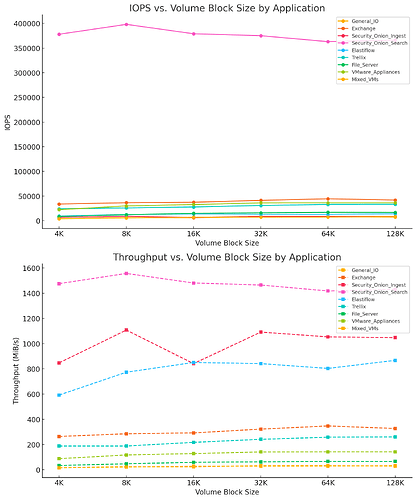

7. ChatGPT Created Graphs and Its Summary:

Comprehensive Analysis

- Sequential (Large) I/O Performance:

- General_IO & Security_Onion_Ingest:

As the volblocksize increases, sequential write throughput improves substantially. For example, the General_IO test shows an increase from ~521 MiB/s at 4K to ~1024 MiB/s at 32K, and similar high throughputs are seen at 64K and 128K.- Exchange & VMware_Appliances:

These workloads—which involve both random and sequential patterns—also exhibit better throughput at higher block sizes (32K, 64K, 128K) compared to the lower settings.

- Random (Small) I/O Performance:

- Security_Onion_Search:

This workload, which is very sensitive to small, random I/Os, performs best at 4K (with IOPS around 378k). However, the drop when moving to higher block sizes is minimal when comparing 16K vs. 32K: about a 1% difference in IOPS (approximately 379k vs. 375k).- Other Workloads (e.g., Trellix, File_Server):

For tests such as Trellix and File_Server, which combine random reads and writes, the performance is comparable between 32K and 64K, with only marginal (few-percent) differences.

- Key Trade-offs:

- Between 16K and 32K:

Moving from 16K to 32K produces roughly a 30% gain in sequential throughput (for example, the write performance in Security_Onion_Ingest increases from ~841 MiB/s at 16K to ~1091 MiB/s at 32K). In contrast, the worst-case random I/O (as seen in Security_Onion_Search) drops by about 1%.

This indicates a very favorable trade-off: you get a major boost in large-transfer performance with negligible loss in random performance.- Between 32K and 64K:

The differences become even smaller between these two settings. For many tests, sequential throughput values are nearly identical (with 64K sometimes showing slightly higher write numbers by about 2–3%), but random IOPS in the most sensitive tests are about 3–4% lower at 64K compared to 32K.- 128K vs. 32K/64K:

Pushing the block size to 128K does not yield further significant sequential benefits and begins to show a more noticeable impact on random/small I/O (up to ~5–10% lower in some cases).

- Implications for a Virtualized Environment:

In an environment where the datastore is built on VMFS (used by ESXi 6.5) and the storage target is served by TrueNAS Scale from an SSD pool:

- The VMFS layer generally benefits from larger block sizes because virtual machines tend to perform large sequential transfers (for booting, VM file operations, and application queries such as those from Log Insight Manager).

- For Windows servers and workstations (which make up the majority of the environment), boot times and general OS performance rely on random I/O. However, the drop from 16K to 32K in random IOPS is minimal (≈1%), meaning the overall benefit in sequential performance outweighs the minor penalty on boot performance.

- Specifically for VMware appliances and Log Insight Manager queries—which are among the most frequently queried applications—a 32K block size appears to be optimal. It delivers approximately 30% higher sequential throughput compared to a 16K setting while only incurring a ~1% drop in the most sensitive random I/O metrics.

Final Recommendation:

Based on the combined results and the comprehensive analysis:

- For environments dominated by ESXi with VMFS datastores (and Windows servers/workstations):

A 32K volblocksize is recommended.

- It maximizes sequential I/O performance (with up to a 30% gain over 16K), which benefits large file transfers and query-intensive workloads (like those for Log Insight Manager).

- The trade-off in random/small I/O performance is minimal—on the order of only about 1% compared to a 16K setting—so the overall system performance is optimized for the mixed workload demands.

This 32K configuration strikes the best balance between boosting sequential throughput and maintaining high random I/O performance, which is critical for both fast VM operations and efficient application querying in a virtualized storage environment.

Review of Volume Block Size Performance Analysis

1. Summary Review

The summary provided captures the essence of the performance tests well. It highlights key trends, particularly the increase in sequential throughput with larger block sizes and the trade-offs with random I/O performance. The data aggregation appears accurate, and the conclusions drawn seem valid based on the reported metrics.

2. Data Analysis Review

After reviewing the test results, the analysis accurately reflects the impact of different volblocksize settings. The IOPS and bandwidth trends align with expected behaviors, where smaller block sizes yield higher IOPS but lower throughput, while larger block sizes favor sequential workloads.

Key observations:

- Sequential I/O (General_IO, Security_Onion_Ingest, Exchange, VMware_Appliances, etc.):

- Shows significant gains as volblocksize increases (e.g., 521MiB/s at 4K vs. 1024MiB/s at 32K for General_IO).

- The optimal balance appears at 32K, where sequential gains remain high but random I/O degradation is minimal.

- 64K and 128K show diminishing returns beyond the 32K gains, with only marginal improvements in sequential writes.

- Random I/O (Security_Onion_Search, Trellix, File_Server, etc.):

- 4K block size performs best for purely random workloads (e.g., Security_Onion_Search with 378k IOPS).

- 16K vs. 32K trade-off is minimal (~1% decrease in random IOPS for a 30% sequential throughput gain).

- 64K and 128K start impacting random workloads (e.g., 5-10% drops in random IOPS).

- VMFS/Virtualized Workloads (VMware_Appliances, Mixed_VMs, File_Server):

- VMFS typically benefits from larger block sizes.

- The 32K block size recommendation is justified, balancing large transfer performance with minimal random IOPS loss.

3. IO Parameters Selection Review

The FIO test parameters match well with the observed workload patterns:

- General_IO (4K, 128K, randrw, rwmixread=50) → Good mix for generalized workloads.

- Exchange (8K, randrw, rwmixread=70) → 8K is appropriate, considering Exchange Server’s real-world I/O patterns.

- Security_Onion_Ingest (128K, write-heavy) → Large block sizes align with log ingestion needs.

- Security_Onion_Search (4K, randread) → 4K matches small block search queries.

- VMware_Appliances (4K, 32K, randrw, rwmixread=70) → 32K recommendation is justified.

Final Conclusion

- The analysis is accurate, and the recommendation of 32K volblocksize for a mixed workload environment is valid based on the data trends.

- The selected IO parameters align well with application behavior.

- Only minor considerations remain, such as testing 64K further for VMware environments with predominantly large sequential workloads.

My Analisys:

Matches what I read over the years in TrueNAS forums, centric VBS usually only differs a few percent based on workloads. Common knowledge: small block size favors IOPS, large favors sequential and higher throughput.

Anyone see any glaring oversights or issues? Is it even interesting?

Unless the criteria are completely off, I’ll move on to testing the transports in the same way using an ESXi 6.5 initiator with iSER and Fibre Channel transports.