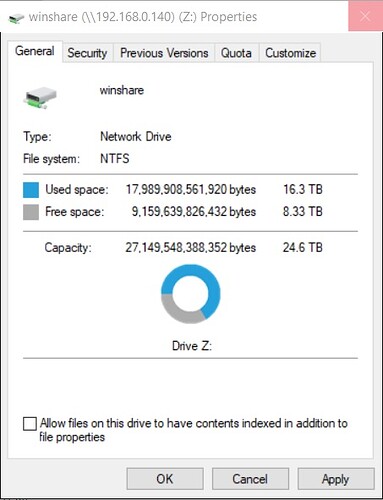

So I set up a 4 vdev 3 drive per raidz1 pool. The drives are all 2tb. My understanding is that in a 3 drive raidz1 vdev, 1 drive goes to parity. Doing the math I should have 16tb available. Yet I seem to have 24tb available, not only showing in the gui, but also from a mapped windows drive. What don’t I understand here? This week I just loaded the nas to over 22tb and other than the 80 and 90 percent warnings, nothing else alarmed or failed. Shouldn’t I be at 16tb?

ZFS just used magic to grant you an extra 8 TiB for free! You should be thankful. How dare you call into question its validity and accuracy!

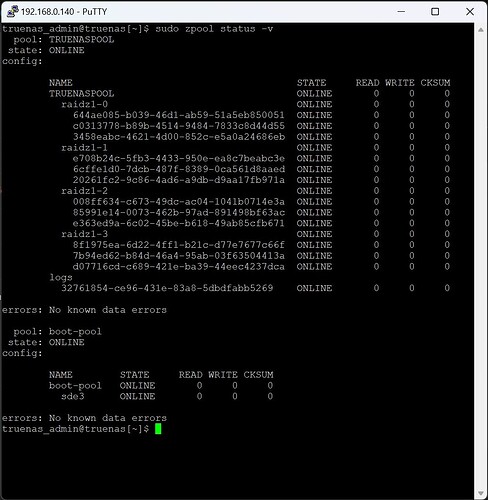

output of zpool status please

LZ4 is getting better these days it seems ![]()

No, it’s the new ultra compressed ZZ99000 algorithm. Of course, it takes a day per file to compress it.

Skinnier bits.

(Actually the bits become fatter after expansion, so there are less regular sized bits needed for the same file)

I recognize a fellow alumni from the Academy of ZFS Mathology. ![]()

Plot twist: The OP never used RAIDZ expansion. This was their first-time creation and setup of a pool with four vdevs.

I see everyone is having a little fun. But to be totally honest I have been working with Truenas for 1.5 weeks so its fair to say I posess very little knowledge except for the fact that i should not be getting 24+TB out of this pool. When everyone is done funning about the magic bits I woud appreciate if someone could explain what’s going on. Sorry for not having a sense of humor tonight but it just wasn’t a great day today and I’m just trying to learn something. Yes, I have been using the search function here and elsewhere.

In TrueNAS, a pool’s usable space depends on the configuration of the vdevs and RAID level. For your configuration with:

- 4 vdevs

- Each vdev containing 3 x 2TB hard drives

- Using RAID-Z1 (which offers single-drive failure protection)

Here’s how you calculate the usable space:

Step-by-step calculation:

- RAID-Z1 capacity: In RAID-Z1, one drive’s worth of space is used for parity in each vdev. So, in a vdev with 3 drives, 2 drives contribute usable space while 1 drive is used for parity.

- Each vdev’s usable capacity = 2 drives×2 TB=4 TB2 , \text{drives} \times 2 , \text{TB} = 4 , \text{TB}2drives×2TB=4TB.

- Total usable space: Since you have 4 vdevs, the total usable space is:

- 4 vdevs×4 TB per vdev=16 TB usable space4 , \text{vdevs} \times 4 , \text{TB per vdev} = 16 , \text{TB usable space}4vdevs×4TB per vdev=16TB usable space.

Important Considerations:

- This is a raw estimate. Actual usable space will be slightly lower due to ZFS overhead (metadata, checksums, etc.), but this gives a good approximation.

- Parity provides protection against a single drive failure per vdev.

So, the estimated usable space is approximately 16 TB.

We’re still trying to figure it out ourselves. Believe me. You’re not alone.

The humor in this illustrates why supposed “technical” explanations (or “excuses”), are not helpful to end-users.

The “ZFS magic math” is a meme in of itself. It’s frustrating and confusing to the user, which I feel your very frustration and confusion. It’s not acceptable for NAS users.

See this thread, which already has 75 replies. It goes to show you how nonsensical this is. End-users are left to scratch their head and wonder “how much space do I really have?”

For the record: I side with the users, not the developers, when it comes to these issues. Even if something “works”, it has to make sense to the person actually using it. ZFS “capacity” is still not intuitive when it comes to RAIDZ and RAIDZ expansion. (Case in point: your opening post.)

Can you actually fit 24 TiB worth of data in your pool of four vdevs, which should yield 16 TiB? Who knows! It certainty acts like you can… but you know it’s lying, since the math doesn’t add up.

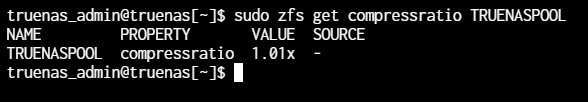

So, is it the compression ratio this time?

Unfortunately, compression ratio is no longer visible in the UI (another regression relative to CORE)

So the best thing is to check it manually.

Try running zfs get compressratio YOUR_POOL_NAME

2x Compression Ratio is pretty good.

If it is the compression ratio, capacity equals “free space + bytes stored” heh.

And the bytes stored can be compressed… so using less space… ergo more free space…

Output of lsblk please?

Here is my compression test. I ran this same server with same disks on FreeNAS for several years and I’m pretty sure I only got 14-16tb disk space. I’m going to re-install TruenNAS. This afterall is a lab system so I had always anticipated multiple installs. I’m pretty surprised that TrueNAS being a mature system would have this level of mystery when it comes to such a basic function. I’ll report back on my reinstalls(s). Thanks.

It’s more likely the fault of ZFS.

The following commands will help shed some light:

zfs --version

zpool list TRUENASPOOL

zfs list -o space TRUENASPOOL

Let’s keep this in one topic:

Reopened, as this seems to be a “related but different” issue.

There was no expansion here, yet ZFS reported size is widely off the mark.

Is it the “128k recordsize” assumption, or another thing entirely?

So, getting back on topic…

The issue appears NOT to be caused by a fabulous compression ratio.