You can check if there’s block-cloning happening.

Although, that should only affect the zfs command and “dataset” readouts. The pool itself (seen with zpool) should remain at a steady 16 TiB total capacity. (Unless there’s more ZFS magic going on.)

Previously, your zpool command was reporting “raw” capacity at ~24 TiB (rounding up to make this easier), rather than “usable” capacity at ~16 TiB (also rounding).[1]

This might even be a difference between OpenZFS 2.2 and 2.3, in which the earlier version accurately reports the pool’s total (and usable) capacity.[2]

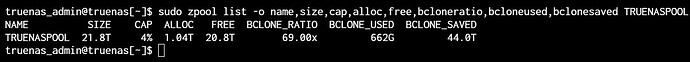

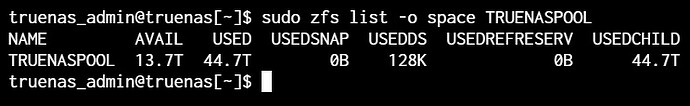

This will be interesting to see:

zpool list -o name,size,cap,alloc,free,bcloneratio,bcloneused,bclonesaved TRUENASPOOL

zfs list -o space TRUENASPOOL

DR;TL

The zpool output for “capacity” should remain at a stable amount. This is your total usable capacity. If zfs (or the GUI), or even user-tools report a larger-than-expected size, this is because they are unaware of deduplication, snapshots, and block-cloning that happens outside of their purview. It’s especially true if such space-savings happen across datasets.

If the pool’s total capacity is changing, based on how much data is being written, then that’s just silly ZFS math.

Think about it like this: If you have a storage bucket of 1 TiB with only 5% free space remaining, and every time you make a copy of a 128-GiB file it uses “block-cloning” to consume zero extra space, you would expect that the pool’s total capacity remains at 1 TiB with 5% free space remaining.

With each subsequence “zero-consumption” copy, it wouldn’t make sense for the storage bucket’s total capacity (or even free space) to keep changing. Sure, the filesystems (“datasets”) might report larger sizes, as will the filesize properties of each “copied” file. But in reality, zero extra usable capacity has been used up. Your storage bucket is, and always was, 1 TiB of total usable capacity for all intents and purposes, whether dedup, block-cloning, or snapshots are involved.

By all means, you should expect the pool to offer 16 TiB total usable capacity, regardless of any space-saving techniques. If a dataset reports it is consuming 1,000 TiB (because you went crazy with block-cloning!), the pool’s total capacity should still remain at 16 TiB. This is because the true consumption is much, much lower than 1,000 TiB, even if that is what the dataset and files are reporting. ↩︎

We still don’t know, since we missed our shot at a more “controlled” test. Too many variables and usages are changing between each test. ↩︎