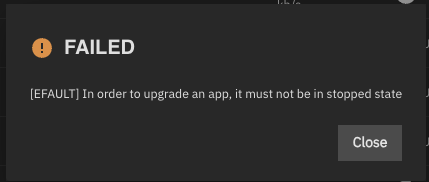

I don’t understand why for an app to be updated, it must be running, this is particularly curious, as for the update it’s stopped, then updated, and then redeployed. Why can’t the stop and redeploy steps be skipped and an app at rest be updated?

Propably because you don’t really update them, they get “replaced” by the newer version.

Well, that explains why they need to be shut down before updating/replacement, but it doesn’t explain why they need to be running first. If an app is stopped, it can’t be updated/replaced, it must be started first. But then the first thing the update process does is to stop it again. So why not let users update stopped apps?

I don’t really know myself and my googling did not result in any meaningful answers, but my guess is that docker can only check if an update is available if the container is running

Well, at least the TrueNAS UI shows that there’s an update for the app, but for it to be updated, it needs to be started first. That’s what I find odd. If updates were only visible for running apps, then I’d get it.

Welp now im confused, but i’m glad i don’t have to fight with the truenas ui, because i simply don’t use it…

Simple, just an IX decision to save repository bandwidth.

(If it is not running, no need to update it).

No, docker doesn’t work that way. Apps in Dockge and Portainer don’t have this problem, it’s just something peculiar to the awful way that iX implemented these apps. I recommend not using them. Just install Dockge and install all your apps within that using docker-compose scripts, so you don’t have all the problems inherent with these poorly-managed community apps.

You don’t have to tell my to not use them. The only 2 apps i use from the apps catalogue are webnut and scrutiny, the rest of my 30 docker apps run inside an lxc managed by portainer. They’re set to auto update via watchtower, but even watchtower doesn’t monitor stopped apps by default, you have to add that via an env variable.

I’d like to know this answer as well. I have to start a stopped app just to update it and then I stop it again. Some apps I do not run all the time, especially if I only need them once every few weeks for a few hours.

But we may never know “Why?” this decision was made.

Also, most of my updates are to the container, not the actual application, and maybe it is for security updates, I don’t know. With that said, I only update mine as I see fit. If it is working, don’t break it.

My memory from the last time I asked a dev about this is that it has to do with a concern about data integrity. As far as I understand it, their concern is that it’s less disruptive to stop and restart a running app (and then let it stay running after) than to allow docker to start a stopped app as part of the upgrade process and then immediately stop it again. The idea being that starting and then quickly stopping the app would increase the risk that data might be in an unsafe state as part of a startup process, like if the app was already touching data but hadn’t had time to attach signal listeners yet.

Is that what a dev said?

You don’t need to start a container to update its image.

Does not need to be stopped either: You simply download the new container image and use it when the container starts next time (which implies stopping the container before starting it again).

In other words, IX could download updated images for apps not running, but.

Now imagine hundreds, thousands of TN installations with 20 Docker apps running and 50 apps not running. Half were for testing. Half are app clones, backups and other stuff you are not using or plan to turn on.

Why would you want to waste bandwidth pulling the latest images for all those images of not running apps?

So GitHub and the Docker repositories start to impose stricter download quotas??

If i may say, a stopped app should remain stopped; what concern the startup normally rely on the app itself afaik.

But the same i can understand that stopped can mean a lot of things, not only “user have stopped the app”… maybe the running state is a compromise to be sure that the process will end gracefully? And those point inconsistency are most related to the “internal app” versioning or i’m wrong?

Asking just for understand what also to me has always looked very not usual ![]()

So this sounds like you’re thinking of a different upgrade process for stopped apps vs. running apps. The way the current upgrade script works it always runs the equivalent of docker compose up after pulling the updated image and then performs health checks.

You’re asking to include separate logic so that if an app is stopped it still pulls the updated images and stages them but does not start the app until the next time it’s done manually. It seems like something like that could be included as an optional service configuration. A Feature Request for that option might be a good idea to guage interest.

The one concern that pops out to me is that there is the potential for a staged upgrade to contain some kind of conflict that will ultimately fail, but the user won’t find that failure until the next time they manually start the app. So the UI might also need to warn when selecting the Stage upgrades option and have some way of signifying when a container has been updated but not started yet. I’m not familiar of it how/if other docker management UIs handle that.