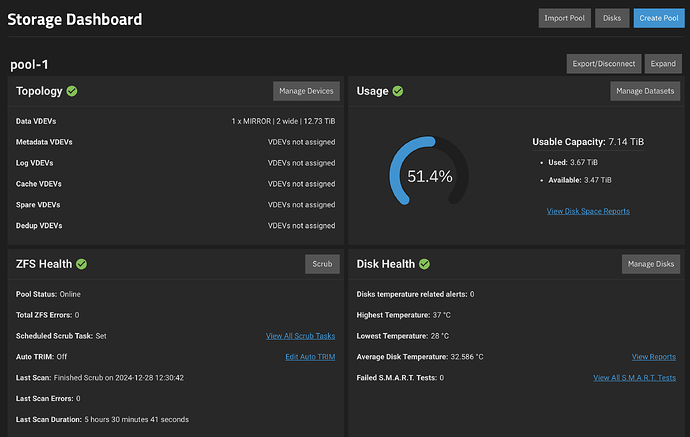

I have a TrueNAS Mini X+ running TrueNAS-SCALE-24.04.2.3. The NAS contains 2 14TB (12.73TiB) WD Red Pro drives set up as a 2-way mirror. Per the screenshot, TrueNAS knows the drives are 12.73TiB 2-way mirror but only shows 7.14TiB as “usable capacity”, which is only 56% of the actual capacity. Can anyone explain where the remaining space went? Below is the output of zfs list command, which shows the same info.

NAME USED AVAIL REFER MOUNTPOINT

boot-pool 10.5G 199G 24K none

boot-pool/ROOT 10.4G 199G 24K none

boot-pool/ROOT/13.0-U5.3 188K 199G 1.29G /

boot-pool/ROOT/13.0-U6.1 3.88G 199G 1.29G /

boot-pool/ROOT/23.10.1 2.15G 199G 2.15G legacy

boot-pool/ROOT/23.10.2 2.16G 199G 2.16G legacy

boot-pool/ROOT/24.04.2.3 2.20G 199G 164M legacy

boot-pool/ROOT/24.04.2.3/audit 613K 199G 613K /audit

boot-pool/ROOT/24.04.2.3/conf 42.5K 199G 42.5K /conf

boot-pool/ROOT/24.04.2.3/data 7.39M 199G 7.39M /data

boot-pool/ROOT/24.04.2.3/etc 3.46M 199G 3.02M /etc

boot-pool/ROOT/24.04.2.3/home 27K 199G 27K /home

boot-pool/ROOT/24.04.2.3/mnt 24K 199G 24K /mnt

boot-pool/ROOT/24.04.2.3/opt 72.0M 199G 72.0M /opt

boot-pool/ROOT/24.04.2.3/root 42K 199G 42K /root

boot-pool/ROOT/24.04.2.3/usr 1.89G 199G 1.89G /usr

boot-pool/ROOT/24.04.2.3/var 76.1M 199G 19.4M /var

boot-pool/ROOT/24.04.2.3/var/ca-certificates 24K 199G 24K /var/local/ca-certificates

boot-pool/ROOT/24.04.2.3/var/log 56.2M 199G 56.2M /var/log

boot-pool/ROOT/Initial-Install 1K 199G 1.29G legacy

boot-pool/ROOT/default 202K 199G 1.29G legacy

boot-pool/grub 1.91M 199G 1.91M legacy

pool-1 3.67T 3.47T 112K /mnt/pool-1

pool-1/.system 1.52G 3.47T 1.23G legacy

pool-1/.system/configs-2c41d412711f430381c6bee374b3106b 28.6M 3.47T 28.6M legacy

pool-1/.system/cores 96K 1024M 96K legacy

pool-1/.system/ctdb_shared_vol 96K 3.47T 96K legacy

pool-1/.system/glusterd 104K 3.47T 104K legacy

pool-1/.system/netdata-2c41d412711f430381c6bee374b3106b 261M 3.47T 261M legacy

pool-1/.system/rrd-2c41d412711f430381c6bee374b3106b 7.61M 3.47T 7.61M legacy

pool-1/.system/samba4 1.28M 3.47T 580K legacy

pool-1/.system/services 96K 3.47T 96K legacy

pool-1/.system/webui 96K 3.47T 96K legacy

pool-1/dataset-1 754G 3.47T 754G /mnt/pool-1/dataset-1

pool-1/dataset-2 1.25G 3.47T 1.25G /mnt/pool-1/dataset-2

pool-1/dataset-3 1.88T 3.47T 1.88T /mnt/pool-1/dataset-3

pool-1/dataset-4 592G 3.47T 592G /mnt/pool-1/dataset-4

pool-1/dataset-5 485G 215G 485G /mnt/pool-1/dataset-5

pool-1/dataset-6 27.4M 3.47T 27.4M /mnt/pool-1/dataset-6