Hello, I have been running truneas on a old gaming machine.

I found an X10SDV-6C-TLN4F for 150$ on craigslist and the guy let me test it for aobut 20 minutes and it all checked out…

First, I ordered 4x32GB modules (likely overkill but whatever) of recommended RAM from supermicro website. they are 2933 mhz but my bios shows them as 2133MT/s and I have fiddled with the settings to the point I have to reset cmos… anyone have any info about that?

Next, I have a CoolJag BufB on the way… its the same thing except full copper. I hope that in addition to cleaning and applying PTM7958 (no 7950) and the new full copper heatsink that I get better temps… I also have a ultra low noise fan I got for it. It is a silverstone FTF5010 and it has under 20 dbA.

The chassis I have is 2U PlinkUSA IPC-2026-BK i got for a deal open box on ebay. I thought it was super cool at first but I increasingly dont like it… maybe if I find a solution to where I can mount 3x 3.5" HDD with anti vibration or noise dampening I will be happier… the chassis came with 4 80mm fans that were not so quiet but I installed 4X NF8-ULN and they actually had every so slightly more cfm (20.8) but instead of over 35 dB they are under 15. It is also what I installed in the evercool mount. The noise is a issue due to my living situation, otherwise, I wouldnt care about nosie in a closet. So dont hate on me for the choice but let me know if I need extra cooling anywhere and thank you.

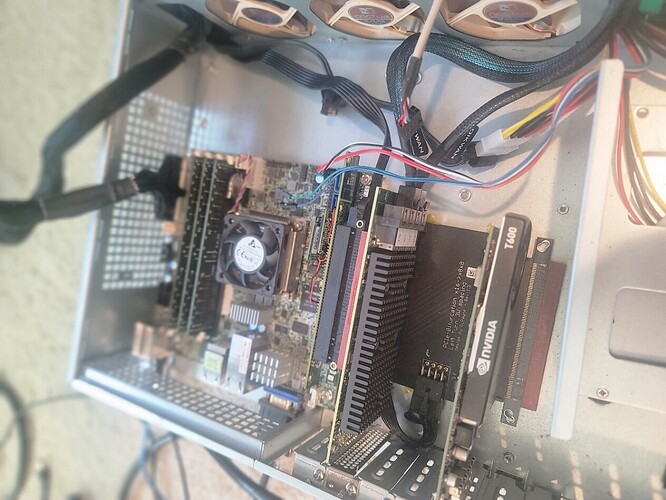

I just wanted to share my current state of affairs because I think it is kind of cool to use a double reverse pcie extension riser, and a x16 to x8x8 adapter so I can use 2 PCIE cards.

So easily enough set the bios to x8 x8 and it works without a hitch. Next I have a M.2 to Pcie 3.0 x 4 adapter/riser/extension whatever… and I plan to use a 280gb 905P.

I am still working out what to do about the drive mounts because the stock ones you can see below the motherboard is one 3x 3.5" stack where the drives basically touch each other… and then in between my psu and the 2x5.25" bay which I got the evercool adapter for. I figure I put 3x HDD in front of that fan and then the other 3x HDD in front pof the fan south of the motherboard and then I can put 6x SATA SSD in between the psu and the evercool adapter.

I was going to grab 6x 1TB Samsung 870 Evo on ebay listed as new for like70 dollars… from china… .is this legit?

I want DRAM sata ssd… there doesnt seem to be a big selection… and i read enough about errors with the MX500 that I would rather avoid…

Oh, and in regards to the 9400-16i… i just happened to have it. so yeah, no hate please thanks and if anything Ill instead a u.2 or nvme in between my pcie cards so there.

comments suggestions feedback etc…