This is a community resource that is not officially endorsed by, or supported by iXsystems/TrueNAS. Instead it is a personal passion project, and all of my opinions are my own.

There have been a bunch of failures recently that indicate missing or corrupt ZFS labels. I started answering questions about what they are in-line, but realized that a separate post dedicated to ZFS labels was probably a good reference.

I am going to use the word disk to refer to a zpool component even though they might be disks, partitions, virtual disks, really any block device could be used.

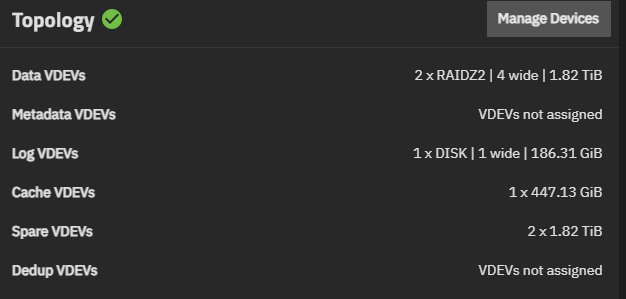

To examine your ZFS labels you first need to identify the disks used by the zpool. Using the TrueNAS user interface click on the Manage Devices button on the Topology brick for the zpool you are looking at. If you have not been able to import the zpool, then you will have to determine the disk device names by looking in /dev for disk devices (sda, sdb, etc.) and their partitions (sda1, sda2, etc.). TrueNAS (as of 25.04) uses partition 1 for non-OS disks, so you will be looking for /dev/sda1, /dev/sdb1, etc.).

To get to a command line, go to System → Shell in the TrueNAS user interface. Use the command sudo /sbin/zdb -l /dev/sda1 where sda1 is the disk you are looking at to examine the ZFS labels on that disk (partition).

NOTE:

/sbin/zdbis an overall ZFS debugger. You can do severe damage to a zpool through incorrect usage. Do not just start trying random/sbin/zdbcommands and options!/sbin/zdblocks the zpool it is working on, even if just reading data, to ensure consitency, if you are reading lots of data you may block access to the zpool for long enough that I/O may start to timeout. A/sbin/zdb -lshould return fast enough to not worry about it (too much), but pulling other data will take longer, potentially much longer.

Here are a sample labels from a 2 x 4xRAIDz2 zpool with both SLOG, L2ARC and hot spares to illustrate the majority of what you will see in a ZFS label, but first a screen shot of the Topology brick from the TrueNAS Dashboard.

The first RAIDz2 vdev

admin@xxx[~]$ sudo /sbin/zdb -l /dev/sdad1

------------------------------------

LABEL 0

------------------------------------

version: 5000 <-- base ZFS dataset version

name: 'VMs'

state: 0

txg: 21894 <-- most recent committed Transaction Group (TXG)

pool_guid: 8306720220591971624

errata: 0

hostid: 1046909542

hostname: 'xxx'

top_guid: 8182871320855948967

guid: 12752186334501239742

hole_array[0]: 2

vdev_children: 4

vdev_tree: <-- since ZFS stripes accross all top level vdev there is indication as to type of vdev in the global section

type: 'raidz' <-- when we enter the vdev we see the vdev type

id: 0 <-- and it's position relative to the other top level vdevs

guid: 8182871320855948967

nparity: 2 <-- this identifies this as a 2 parity RAIDz

metaslab_array: 155

metaslab_shift: 34

ashift: 12

asize: 7992986566656

is_log: 0

create_txg: 4 <-- the TXG that committed the creation of this vdev

children[0]: <-- first component of this vdev

type: 'disk'

id: 0

guid: 12752186334501239742

path: '/dev/disk/by-partuuid/a07f7413-3bab-4341-bd46-90b4be6ec160'

whole_disk: 0 <-- indicates that this is not the entire disk, but in this case a partition

create_txg: 4

children[1]:

type: 'disk'

id: 1

guid: 12263111496817576784

path: '/dev/disk/by-partuuid/968f6293-43e5-41e5-a667-037f3a913cd1'

whole_disk: 0

create_txg: 4

children[2]:

type: 'disk'

id: 2

guid: 7688501057607157299

path: '/dev/disk/by-partuuid/a1c2f1d9-c9db-4f14-a522-9a67ea89431b'

whole_disk: 0

create_txg: 4

children[3]:

type: 'disk'

id: 3

guid: 6828939413129632038

path: '/dev/disk/by-partuuid/893bb901-f633-4199-977a-cd30f72d27e7'

whole_disk: 0

create_txg: 4

features_for_read: <-- feature flags that effect read operations

com.delphix:hole_birth

com.delphix:embedded_data

com.klarasystems:vdev_zaps_v2

labels = 0 1 2 3 <-- at which positions did I find this label

admin@xxx[~]$

The second RAIDz2 vdev

Much the same as the first, differences indicated below.

admin@xxxx[~]$ sudo /sbin/zdb -l /dev/sdb1

------------------------------------

LABEL 0

------------------------------------

version: 5000

name: 'VMs'

state: 0

txg: 21894

pool_guid: 8306720220591971624

errata: 0

hostid: 1046909542

hostname: 'xxx'

top_guid: 6761566294553582256

guid: 9294818102476701863

hole_array[0]: 2

vdev_children: 4

vdev_tree:

type: 'raidz' <-- you can see how the type of each vdev can be different

id: 1 <-- this vdev is in the second position (0 is first position)

guid: 6761566294553582256

nparity: 2

metaslab_array: 145

metaslab_shift: 34

ashift: 12

asize: 7992986566656

is_log: 0

create_txg: 4

children[0]:

type: 'disk'

id: 0

guid: 15631385997839139037

path: '/dev/disk/by-partuuid/ef214a32-6ae4-492e-b0e2-e27c3d52129b'

whole_disk: 0

create_txg: 4

children[1]:

type: 'disk'

id: 1

guid: 500392086168322762

path: '/dev/disk/by-partuuid/05398324-c01e-4a7b-8db0-54f9df580e97'

whole_disk: 0

create_txg: 4

children[2]:

type: 'disk'

id: 2

guid: 9294818102476701863

path: '/dev/disk/by-partuuid/fd1c11d4-8c5f-4051-ae95-240a9506a898'

whole_disk: 0

create_txg: 4

children[3]:

type: 'disk'

id: 3

guid: 10873913273110761404

path: '/dev/disk/by-partuuid/4ac7491b-550d-47d7-89d3-a65e0b06d0cb'

whole_disk: 0

create_txg: 4

features_for_read:

com.delphix:hole_birth

com.delphix:embedded_data

com.klarasystems:vdev_zaps_v2

labels = 0 1 2 3

admin@xxx[~]$

The L2ARC (read cache) vdev

admin@xxx[~]$ sudo /sbin/zdb -l /dev/sdl1

------------------------------------

LABEL 0

------------------------------------

version: 5000

state: 4 <-- I assume this identifies this device as an L2ARC

guid: 2228300738575578825

ashift: 12

path: '/dev/disk/by-partuuid/31dfc96d-1d6f-41d2-96b7-e3f20f0a8c38'

labels = 0 1 2 3

------------------------------------

L2ARC device header

------------------------------------

magic: 6504978260106102853

version: 1

pool_guid: 8306720220591971624

flags: 1

start_lbps[0]: 0

start_lbps[1]: 0

log_blk_ent: 1022

start: 4198400

end: 477955096576

evict: 4198400

lb_asize_refcount: 0

lb_count_refcount: 0

trim_action_time: 1748551131

trim_state: 2

------------------------------------

L2ARC device log blocks

------------------------------------

No log blocks to read

admin@xxx[~]$

The SLOG (write accelerator) vdev

admin@xxx[~]$ sudo /sbin/zdb -l /dev/sdu1

------------------------------------

LABEL 0

------------------------------------

version: 5000

name: 'VMs'

state: 0

txg: 21895

pool_guid: 8306720220591971624

errata: 0

hostid: 1046909542

hostname: 'xxx'

top_guid: 15363572077989720144

guid: 15363572077989720144

is_log: 1 <-- identifies this as a SLOG device

hole_array[0]: 2

vdev_children: 4

vdev_tree: <-- since you can have mirroed SLOG the vdev type needs to be identified

type: 'disk'

id: 3

guid: 15363572077989720144

path: '/dev/disk/by-partuuid/1e016cb4-515d-4d46-83fc-4cdf42f893e6'

whole_disk: 0

metaslab_array: 1162

metaslab_shift: 30

ashift: 12

asize: 198044024832

is_log: 1

create_txg: 21893

features_for_read:

com.delphix:hole_birth

com.delphix:embedded_data

com.klarasystems:vdev_zaps_v2

labels = 0 1 2 3

admin@xxx[~]$

The hot spare vdevs

admin@xxx[~]$ sudo /sbin/zdb -l /dev/sdd1

------------------------------------

LABEL 0

------------------------------------

version: 5000

state: 3 <-- I assume this identifies this as a hot spare

guid: 13909808474772480724

path: '/dev/disk/by-partuuid/188d232b-93aa-4a07-9986-dcc4d5bc0a97'

labels = 0 1 2 3

admin@xxx[~]$ sudo /sbin/zdb -l /dev/sde1

------------------------------------

LABEL 0

------------------------------------

version: 5000

state: 3

guid: 4206175843085097119

path: '/dev/disk/by-partuuid/a6e88d24-f4d0-4561-99ba-63e9e971ee1e'

labels = 0 1 2 3

admin@xxx[~]$

ZFS labels are written to the start and end of each disk used by ZFS. There are 4 copies for redundancy, 2 at the start (labels 0 and 1) and 2 at the end (labels 2 and 3). Each label has enough information to assemble this disk into the vdev it is a member of, including identifying where it fits in the vdev as well as all the other disks in the vdev. Each label knows nothing about other vdevs in the zpool, although there is positional information, so some information about other vdevs may be inferred.

The zpool and all it’s disks are identified via unique identifiers so that duplicate names do not confuse it and so that when disks change OS device names ZFS can still figure out which is which. If you want to import a zpool and there are duplicate zpool names, then you import by unique identifier and if a zpool of the same name is already imported, assign the zpool a new name.

When Things go Wrong

There are a variety of things that can go wrong with a zpool. If you cannot import a zpool then looking at all of the ZFS labels can provide a clue with what is wrong and possibly provide a path to recovery. While I ask questions here, I am not providing solutions as every case is different and doing the wrong thing can lead to data loss! Gather the information the questions below ask and then ask for help on the TrueNAS Forums.

- Do you have all 4 ZFS labels?

- If not, then which are missing?

- 0 and 1 from the start of the disk?

- What might have overwritten the beginning of the disk (partition)?

- 2 and 3 from the end of the disk?

- What might have overwritten the end of the disk (partition)?

- 0 and 1 from the start of the disk?

- If not, then which are missing?

- Are any of them flagged as corrupt?

- Are they all corrupt or only some of them?

- Do the txg values match on all labels?

- If not, then a disk with a lower txg was not updated when later txg were committed, this can give you a timeline of when which disk was unreachable.