I also don’t use interposers, never have…Didn’t even realize it was a thing lol.

i used it in TrueNAS Core for multipath

Hmmm. That could certainly be relevant here. Those shelves were designed for SAS not SATA. Looks like @QuirkyKirkHax has SATA drives. There could be a weird quirk (pun intended) in how the shelf enumerates the paths on boot that for whatever reason trips up the Linux kernel.

Looks like they are $4 each if you want to give it a try.

NetApp SATA to SAS Interposer DS4243 DS4246 Hard Drive tray L3-25232 500605B | eBay

Thanks for that. I went aheaad and ordered a few for this shelf. I’ll make an update post if that seems to help…I still am leaning towards some kind of race condition though. I’ve also got another SFP cable coming in today, I’ll also let you know if that helps.

I used these interposers after upgrading to TrueNAS Scale and hat exactly the same problems

Then i removed them and problems remained

From what i know the interposers are only needed for multipath when connecting cables to both iom6 controllers at the same time.

Since TrueNAS Scale does not support multipath anymore the interposers are useless.

Please feel free to correct me if i am wrong

I’m also reading that they are only needed for multipath.

I don’t think it is hardware related i think this is some kernel or software problems because when i import the pool after the reboot it works without any problems and i have written terrabytes of data on it

If this errors were hardware related then you could not import and use the pool fine after the reboot.

By all means i am a noob, dont belive what i say, this is just my two cents.

I guess we will never know because the tickets got closed wit no JBOD support on community edition and i am not capable of debugging it myself. As long as no developer is willing to take a look we are basically screwed

Can maybe someone point me where it says no support for JBOD

When i look on the truenas Scale page it says SAS JBODs under Hardware Management

Good catch!

i don´t know if this changed something or i am just lucky at the moment

for now it reboots fine and imports all pools, i have no idea why but i am happy

here is what i did

i installed TrueNAS Scale 24.04.1.1 fresh, server rebooted then i imported my backup.config, then server restarted again WITHOUT ANY PROBLEMS and imported all pools

Today i did reboot again and it restarted again WITHOUT ANY PROBLEMS and imported all pools

I dont know if this matters but out of my 4 pools 2 are encrypted

just wanted to post an update how it is for now

After a week of absolute hell, this seems to be working…I had to migrate to Core 13.3 BETA and rebuild all my tasks, and now I migrated back to SCALE 24.04.1.1 and imported my old config.

First boot appears to work fine…Gonna give it a half hour or so of operation, do another few reboots and see if it works consistently…

I hope it works for you…

can’t wait to hear back

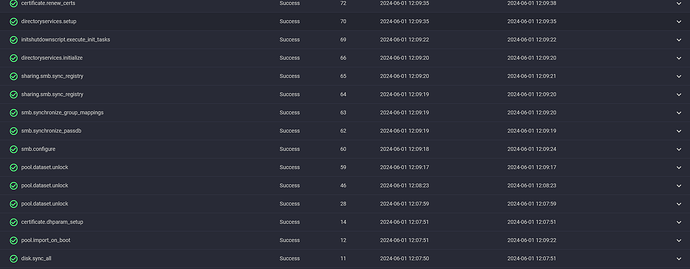

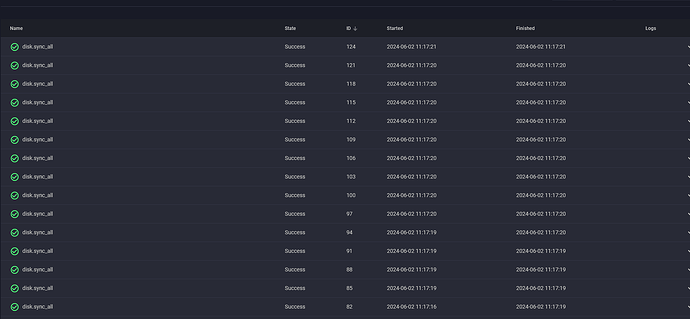

Reboot came back fine, poked around inside on some of the oddities I was seeing, and we only have 1 disk_sync job now:

Also, could just be my imagination, or perhaps Im just looking more closely now, but the zfs service seems to be taking a bit longer on boot. Which seems good, as I was leaning to this being some sort of race condition. Gonna go through a couple more reboots because I am paranoid, but this all seems like it works at the moment.

Cool to see it works for you aswell

Pools getting I/O errors on import was really not fun at all

Let us know how it works with the next reboots…

I also will report the next reboots

Rebooted this morning after scrubbing everything and it seems to be borked again. Same exact behavior

Oh man…

Is it just the disk sync task or are the pools broken again?

Pools are all borked, disks are all “unassigned” likely due to this sync issue relabeling them and getting zfs confused. Im back on CORE 13.3 BETA for now just so I don’t lose any data for these replication tasks…

That is frustrating to hear…

I did yesterday another reboot and it went well, at least for now… maybe just a lucky reboot

This is really bad.

Did you guys get anywhere on this ??

I am having the same issue and just saw this post

I’m running

DELL Power Edge 720

LSI 9207-8e HBA

DS4246 (24 drives)

but I want to note that I am using imposers and all sata drives… originally I had both HBA (to I/O 1 and 2 on the shelf) connected to but reduced to one troubleshooting this issue… have noticed the redundant path was not supported anyway

Did know where I started this new thready today

Nothing really…I went to CORE 13.3 BETA and it works so far, but I’m unsure what could fix this. I had a friend try setting some rootdelay kernel params to maybe delay the zfs import to when the disks were all initialized, but it didn’t appear to help. I haven’t tried that myself though, been so frustrated I can’t make myself do another install lol

Im considering a supermicro but I am not sure if that Jbod would solve the issue