You have the right answer, but not for the right reason. It isn’t that the parity disks don’t count (RAIDZ doesn’t use dedicated parity disks; I think unRAID is the only modern system that does that); it’s that the smallest disk size in a vdev determines that vdev’s capacity.

My 4TB WD-Red-Plus has a lot of hours on them

Seller wrote :

5x4TB WD RED (WD40EFRX-68)

Approx. 36829 hours (have been in sleep/standby , most of the time - Have run badblocks on all , and there’s no errors)

Is badblocks a reliable way to check health ?

Does sleep/standby reduce wear ?

I mean even if SMART reports Power-On hours at 36K

I have been looking at new drives, and have found these 4 types.

1:

WD-Red-Pro

Pricey - But seems to have best specs - Xfer-Rate, TBW & MTBF etc …

2:

WD-Red-Plus

“Basic Nas”

3:

Toshiba N300

I like Toshiba

4:

Segate IronWolf.

I don’t like Seagate , but don’t have any experience with their NAS series.

I was leaning towards 6TB disks, but there’s little price ($30) difference between 6TB & 8TB, so i’ll prob end up w. 8TB’s.

What would you buy ??

I’m leaning towards RED-Pro’s

Prices are high in “DK”

8TB Red Plus = $247

8TB Red Pro = $273

8TB Tosh N300 = $219

8TB Ironwolf = $222

I’m leaning a bit towards (as mentioned above), for starters.

Getting a 8TB as the 6t’h drive, and begin to play …

Then maybe have a look at a hopefully good offer on “Blackfriday” to get the rest.

Is it difficult to “swap - read rebuild” the drives, is there a specific order ?

40k hours is a drive that’s on the wrong side of the bathtub curve.

I have no issues with Seagate Ironwolf, and they’re normally the cheapest NAS drive when I check.

I just buy the cheapest new drive (by $/TB) that is NAS rated and the correct size, except I do not buy WD “Red”

Which means the Toshy in your list I think.

except I do not buy WD “Red”

Why not WD “Red” ?

From “specs” it seems like Red-Pro is “golden”

The price diff between Tosh N300 & IronWolf is minimal.

Any reason to go with IronWolf ?

WD Red Plus and Pro are fine.

WD trashed the WD Red brand when they snuck SMR into the drives. So much so that they’ve now retired the range/brand I believe

Power-on: 36,829 hours is c. 4.2 years. An Enterprise might well swap out disks after 4 years even if they are still operating perfectly in order to avoid the hassle and risks of them failing.

Flying hours: There is often a SMART attribute 240 which shows the time where the disks were spinning and the heads were not retracted - and this is probably a better measure of wear than power on hours, because it is a better measure of wear on the motors and bearings and of wear on the head due to it being close to a spinning drive.

Reads/writes: SMART attributes 241 and 242 will tell you how much data has been read and written to the drives - and these can also give you some idea of wear because they will indicate actuator movements and changes of magnetic polarity on the disk surface. (These are in LBAs - so the actual data depends on the size of the LBA. For magnetic surface wear, this needs to be compared to the size of the disk. And you probably need some stats to compare them to in order to make any assessment.)

SMART stats can be obtained with sudo smartctl -x /dev/sdx.

This is all to do with SMR drives - which (without going into technical details) are absolutely unsuitable for use with ZFS or for use in write intensive environments.

There are currently three WD Red brands - Red, Red Plus and Pro - but there used to be just Red and Red Pro.

WD introduced SMR drives into their WD Red range without publicising the fact - and when this was discovered it did a LOT of harm to their brand image.

They then decided to rebrand WD Red drives by splitting them into Red and Red Plus - where Red were SMR and Red Plus were CMR - but the brand damage was already done.

When you buy a (used) WD Red drive you need to check the precise model number to determine whether it is an old CMR drive (now Red Plus) or a newer SMR drive.

Since TrueNAS is ZFS based, WD Red SMR drives are completely unsuitable.

I have 5x new Seagate Ironwolf 4TB drives and have not had a single issue with them so far.

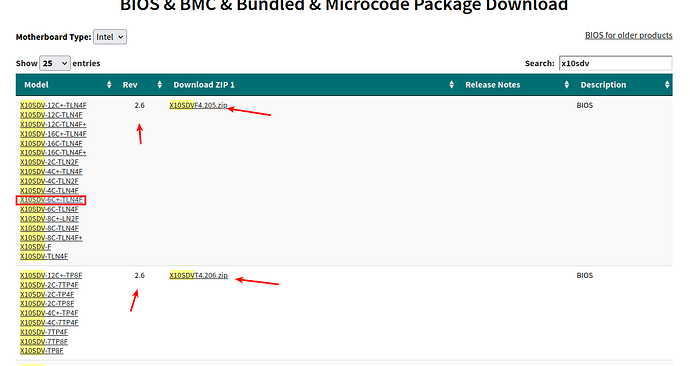

- When you update the BIOS, I see they have 2.6 available. My X10SDV is on 2.3 with a D1518 and its working fine. Not sure the changes.

- I thought the 10GBe ports on these boards are 1 or 10 and don’t support 2.5.

- As you are thinking about your setup, think about your backup. Z2 isn’t a backup.

Re 1:

A bit strange … The Page shows 2.6 , but filename & contents are named 2.5

Re 2:

If/when i’ll use the 10G , it will be at 10G

Re 3:

I have actually considered to make this, the backup box.

If it draws close to 80…100w the cost of 24/7/365 would be quite high in DK.

I’m maybe considering it to be “just turned on”, at select times to make backups.

Maybe even just weekly …

I’d expect that WOL would turn it on, and then last backup would “shutdown the machine” …

How do you guyzz make backup of 32TB ???

Well … I’m prob. not going to have 32TB on the NAS for a while.

Guesstimate would be 4TB growing to 8

I’m a home user (Wife & I) … Wouldn’t want to go crazy on $$ or electricity.

Daily (important) changes would mostly be e-mails (own server) , and at times pictures.

Other systems are quite static …

Zabbix , Pi-Hole etc.

- I think 2.05/2.06 is just distinguising the different zip files. You don’t want to use the wrong bundle even if the BIOS level is the same.

- You can save some power then by shutting off the two 10G ports. There is a rapidly emerging class of 2.5g routers and switches to take advantage of existing wires, which is where the “future-proofing” diverges.

- I use mine as a backup box. I have a low power server I leave on with two mirrored drives and is in active use; and weekly use IPMI to turn on the backup box box, manually run my replications, and then shut it down. Actually two backup boxes, and it takes about 2 minutes. This way my continuous usage is on the order of 30 watts. If the main server fails, then I can switch to one of the other two boxes.

- The key thing is to divide your total storage into smaller datasets, for at least two reasons. The first is that you are absolutely going to redo your dataset structure as you get into this. The second is your snapshot and replication strategy may differ. So if you have 12TB of storage in Z2, you may be using 6 to 8TB of it. If it is all in a single dataset, you may not have enough room to reorganize it. But if you have a dataset for your data, one for your wife’s data, one for music, one for movies, one for applications you run, and so on, you have more flexibility. In my main site, I have 20mbps upload. Saturating that to transfer 8TB off site would take half a year. So the first replication is to a USB HDD mounted as pool with matching datasets, exported, taken to the second location, imported, replicated locally, and exported again. Then subsequent replications only take minutes.

In your first post you mentioned 2.5G & 1/10G , i didn’t quite understand it.

But think you meant that 2.5G was not supported by the onboard 10G nic’s correct ?

Re: Low power server

Since you are talking about replications, i suppose you mean ZFS replications, and that you run ZFS on your two mirrored disks … Or ??

Any pointers to a “low power” server Mobo w ECC Ram ?

Thank you for the tips on making several datasets.

And room for a possible reorganize. ![]()

Yes, my understanding is that 2.5G isn’t supported, unless perhaps it is part of the updated bios…

All TrueNAS is ZFS, so yes that’s what I run on my main server. It is a J5005 ASRock board with 16gb but no ECC. I’m not counting on it for high usage or long term data protection. That’s for the X10, fired up when I need it. The J5005 is really for Plex and always available network storage, without having to spool up the X10, or spool it up too often. I even thought about repurposing a laptop with an external drive counting on the other two for data protection since they are necessary for the backup. So the reason I mention datasets is that I’ve reconfigured my systems several times in the course of coming up the learning curve to a decidely unprofessional place.

Not 100% sure this is correct.

It was certainly the case when the chipset was released.

BUT the 10gb chipset in these systems is X552 (I think) which is very closely related to the X550 chipset which received a firmware update to support multi-gig. (2.5 and 5 gbe)

Thus, a firmware update might add support, maybe with a bios update.

Spend some time today updating the MoBo.

1:

Tried to update the Bios via IPMI , it “loaded” but returned an error.

Googled the error, and it indicated the BMC FW probably was too old.

2:

Downloaded the BMC package bundle (V 4.0)

Installed DEB12 , and the BMC package on the “Box”

Found the linux Alupdate , made it executable, did a BMC “Config backup” , using -i kfc (i think it was called - It means local access not tcp access)

Then i did a “Firmware backup” using the same local access … That was a sooo slow , taking around 90 sec per “percent read”, seemed like it was reading 2K or 3K blocks. Took 2+ hours to complete reading the 32MB. Well i did let it complete.

After completion , i “just for fun” tried config & firmware backups via Alupdate & lan ( -i lan) , from my “Remote IPMI machine” - And to my surprise that was much much faster.

Btw: The BMC update was done via the IPMI firmware update menu.

3:

Then i tried to update the Bios via IPMI, but now it was “doing nothing” …

Not even giving the Bios upload error it gave before.

It wouldn’t react to “Close to anything” …

Well a IPMI Shutdown machine gave a timeout error.

I was using a “Linux Mint” and FireFox (FF)…

At some point in the above steps the IPMI IF was giving me a headsup, that some feature needed Chrome. But back then most seemed to work with FF.

I swiched browser to “Vivaldi” , and now things began to work normal again.

So the new V4.0 BMC FW, does not like my FF setup anymore.

I uploaded the new Bios , and chose “Not to save setting” , think i read that saving settings could cause trouble.

4:

Now i have Bios V2.6 - Can confirm the xxx205 zip mentioned above , downloaded from SM , contains Bios V 2.6

But with a default’ed Bios …

And am having some issues booting to the installed Deb12, I’m quite sure it’s UEFI related …

To be continued …

Kind of at the same setup here.

What originally started as replacing my one HDD Synology spiraled out of control.

I bought a HP Z440 and put 256GB DDR4 ECC RAM in it as I was reading about Truenas and ZFS and how having large amounts of RAM is good for ARC.

I bought a HP Z Drive Quad Turbo as well and put 4 Samsung 960GB PM963 NVMe SSD’s in it, 4 Samsung 256GB SATA SSD’s and 2 4GB WD Red Pro CMR HDD’s.

Also I switched the CPU to a Xeon E5-2697A v4.

My plan was to host some dockers and do some virtualization but I quickly realized the setup was idling at 95 Watts.

Too much with the electricity prices in Europe.

I got a little carried away and bought another Z440 with the exact same specs. Only the NVMe SSD’s are the PM983.

I had a good chance to buy 4 Exos 24 16TB HDD’s and a thin client wich I will use later as a streaming device.

My primary goal was to use Truecharts but then I read that they no longer support Truenas.

By that time I could buy a Supermicro X10SRL-f server with a HBA.

I pulled out the SAS card as I scrapped my plans to run Truenas on Proxmox as the MoBo has 10 SATA ports. So who needs a 12 Watt HBA?

My new setup would be a dedicated NAS and use the Z440 to play with Proxmox etc.

The Truenas box is a Supermicro X10SRL-f with Xeon E5-2697A v4, 256 GB RAM, 2 Supermicro PCIe x8 to NVMe in bifurication.

No GPU, waiting for a 10 Gbe NIC, and 4x 16TB HDD in 2 mirrored VDEV’s.

I want to use this as a pure NAS and would like to know if I can also set it up as a Proxmox backup server.

I want to use the thin client or Z440 Proxmox for streaming media and as an exit node for a headscale/wireguard tunnel.

I would like the Truenas box to be accesible from outside my network but not through port forwarding. It’s imperative that it is not public facing. Synology has a nice feature called Synology Drive that syncs folders to my phones and laptops on the move. I would like the same for my Truenas machine. Currently it idles at 56 Watts so that’s way better than the Z440. I’m kind of stuck in the setup process as I dedicated 2 mirrored 960 TB SSD’s as boot drives. I just now read about the Optane M10 and think I’m going to order a few as they’re dirt cheap on Ebay. Should I mirror them as well? *As this leaves no room for a possible GPU in the future.) Or is it overkill to mirror a boot drive? I pressume having a backup of the settings is more than enough? Don’t really know.

I also don’t understand what dataset share type I should choose. Generic or multiprotocol?

After changing the boot drive to that Optane M10 I have 3 pools and 2 spare new NVMe drives. One mirrored 2 NVMe VDEV for virtual machines and containers. One mirrored 2 HDD pool for documents, pictures etc. accesed by Android phones, iPhone, Windows clients and some Debian Linux machines. And 1 mirrored 2 HDD VDEV pool that I would like to use for Proxmox and other VM backups. Do I need encryption? My machines are inaccesible by anyone else than me. Is a 960 GB enterprise SSD good enough for a ZIL or too large? Do I benefit from a ZIL? Are the default settings good enough for NVMe SSD’s?

What is best practice for setting up users? I don’t like the idea of “admin” login. Do I create a new user and add it to the “builtin_admin” group? And after succesful login, disable admin account by disabling password login? Does this create problems?

Should I virtualize a PBS on this machine after I get all network parts for a 10 Gbe network?

It all boils down to this: What would an experienced Truenas user do with my hardware? I’m not ruling out adding a GPU in the future. But right now I want to use this as a NAS for files and media. I want to set this thing up and having as little maintenance as possible so I can focus on the tinkering with my other hardware.

EDIT: Sorry for hijacking this post.

With all that hardware I would set up in competition with Amazon Web Services!!! J-K. ![]()

More seriously, in terms of helping you, we need:

-

Less of a stream of conscious narrative on your journey to now, but rather a clear, bullet point description of the hardware you have to hand and the power measurements you made and your LAN environment.

-

A clear statement of what you want to use it for e.g. SMB shares, Plex, VMs for X and Y, and the performance you are looking for.

-

A clear and concise set of questions you want answered.

But also on a serious note, some life-advice from someone who has had decades of similar lived experiences…

Sometimes it is better to pick a horse and make progress with it than flip from horse to horse and never actually get anywhere.

In your case this would mean:

- Deciding what your needs are;

- Asking for advice on what hardware to use and how to configure and use it to best advantage;

- Doing the build and making it work, putting it into production.

- Use that server in production for a reasonable period even if running costs are a bit expensive

- Learn from the entire process

Then (say 2 years later) based on all the learning experiences from that process, you can repeat the process starting afresh, building a replacement environment and migrating over to it.

P.S. I have almost the lowest possible hardware configuration (with a few apps but no VMs) - see my signature for details. If I wanted to run VMs, then I would definitely need bigger hardware, but for what I want it runs brilliantly and can fully utilize my Gb LAN. IME it is how you use SSDs vs HDDs and how you configure it that has the biggest impact, so ask for advice on how you should set up your system.

I’m sorry if my post is rather disorganized.

You’re absolutely right about jumping horses. I got carried away by all the choices of NAS OS’s out there and I lost track of my main goal which was to get a functional, future proof and performant NAS without relying on proprietary hard- and software.

The question I have is exactly what you said. What is the best use for all this hardware? The Proxmox box is not as important as the Truenas build. I plan to deploy the Truenas build as my main NAS and use my Synology as a backup device.

I would like to be able to sync my 3 laptops I need for my job to this NAS. That is the documents and files in a directory on the Windows 11 machines and also 2 desktops running Windows and Linux. If I save, modify, delete a file locally on one of those machines I want it to sync to all the other machines.

I would like the photos of my Android phones to backup automaticly to the Truenas server.

Perhaps also my wife’s iPhone. (optional)

I’d like to run some containers on this machine. Preferably accesible through a Headscale setup. Things like Vaultwarden, Keepass, a CalDav service and some other things I might decide later on.

This is my main goal.

The reason I got this shitload of hardware is that I could buy them for very low prices. Except for the NVMe SSD’s.

I didn’t come here to show off all the stuff I bought and I apologize if that’s what I brought across.

After I set up this server, I would like it to be as maintenance free as possible. It’s going to contain files, document and photographs which are really important. My backup strategy is having local copies on all of my computers, that’s five. As well as rSync to the Synology for my ca. 3TB of most important files. I chose 2 mirrored VDEVs so I can add another disk when needed or pull one out to build a new mirror on another ZFS server. (Which I plan on doing in the future)

Another question I have is whether I should use a ZIL for the datasets of the VM’s or containers I plan to run. I read in another thread you recently gave someone advice to either use a ZIL or move the entire VM pool to NVMe depending on the size of the data written to disk. This makes sense for small amounts of data written inside that container. But what if I for example run Nextcloud and have it save files to my 16TB pool, would I benefit a lot from using a ZIL? Or is the performance negligible? The Synology I currently use as my main NAS doesn’t have it. And it syncs files immediately over the internet. Since I got all these SSD’s might as well use them. I’ve thought about a special metadata vdev but what I understand is that if that one fails, you lose the entire pool. That’s not something I want to risk of course. The SSD’s all have PLP and have 100% health. Is that a good use case for them? Considering I have about 256 GB or RAM and most files I need to acces fast i.e. invoices of my customers etc. fit well into ARC.

The last question I have is what is best practice for hardening the server? I have 2 1000 Base-T NIC’s and a VLAN capable router as well as a VLAN capable switch it is connected to.

Should I dedicate 1 of them for administering purposes? That is connecting it to a administer only VLAN? To be honest I have no idea what to do with the 3rd IPMI LAN port. I’m going to add a 10 Gbe card in the future that will be used for connecting another server and my workstations.

IMO the best tool for bidirectional syncing is IMO SyncThing which is available for Windows and Linux and Mac and Android (and via a 3rd party app) iPhone.

If you want a simple backup tool for Windows, SyncBack.

SyncThing again, but single direction phone->server. You can set it to run only when on charge at night - so it backs up overnight.

TruneNAS VM support is excellent. Run production VMs on TrueNAS.

If you want to virtualise for experimentation, then either Virtual Box on your normal PCs or Proxmox on a server.

-

In TrueNAS automate SMART tests and Snapshots. For basic NAS functionality, not much more “maintenance” is needed.

-

For monitoring and alerts set up TrueNAS email alerts and add @joeschmuck 's Multi-Report script for additional checks.

A ZIL is only used by ZFS for synchronous writes, and you only really need these for specific cases where you cannot afford any data loss on a power failure or O/S crash (i.e. transactional databases, VM O/S disks). And a ZIL is only helpful if it is on a vDev that is substantially faster then the vDev(s) that the data is on.

NextCloud data is normally mostly “at rest” i.e. stored and inactive, so RAIDZ HDDs are normally the most suitable storage. Writes can almost always be asynchronous so a ZIL will not be used.

For VMs and Apps and some of their data, use mirrored SSD/NVMe pools. For VM O/S and transactional database storage use Zvols. For VM and app data use normal datasets. Although VMs will use synchronous writes for O/S ZVols, and thus use a ZIL for their writes, because they will be on a fast disk already an SLOG for the ZIL probably won’t add much performance.

Special metadata vDevs are only of benefit when ultimate performance is needed for locating large numbers of files on very large HDD storage. Metadata already accessed from HDDs will be cached anyway, so it only speeds up the first access. Unless you have a known need, IMO don’t bother because it adds complexity and risk for little or no noticeable benefit.

If my low-spec system can perform brilliantly without NVMe, SLOG or special metadata vDev, then unless you have a known use case why bother with these for your system.

No - if the entire vDev fails, you lose the entire pool, so a special metadata vDev needs to be a 2x or 3x mirror.

This is NOT the definition of “fast” that relates to ZIL. You need to access your customer invoices in (say) < 1sec from a single. Fast for ZIL relates to:

- Synchronous writes not reads or asynchronous writes.

- Hundredths of a second performance improvements only visible when thousands of them are needed for a single action.

- ARC only applies for documents already recently read or for sequential reads of large files (for streaming) where read-ahead happens.

Use your SSDs for mirror vDevs for Apps and VMs.

Only if you think the administrative LAN is more secure and less risky than the normal LAN. A LAN is only as secure as the physical security, so in a normal home where e.g. you don’t have a secure datacentre and don’t have advanced VPNs and firewalls to secure access to an administrative LAN, it probably won’t be any more secure and will thus add unnecessary complexity.

Connect this to a switch so you can access BIOS over the network. But do make sure that it has some protection from e.g. kids accessing it and screwing things up.

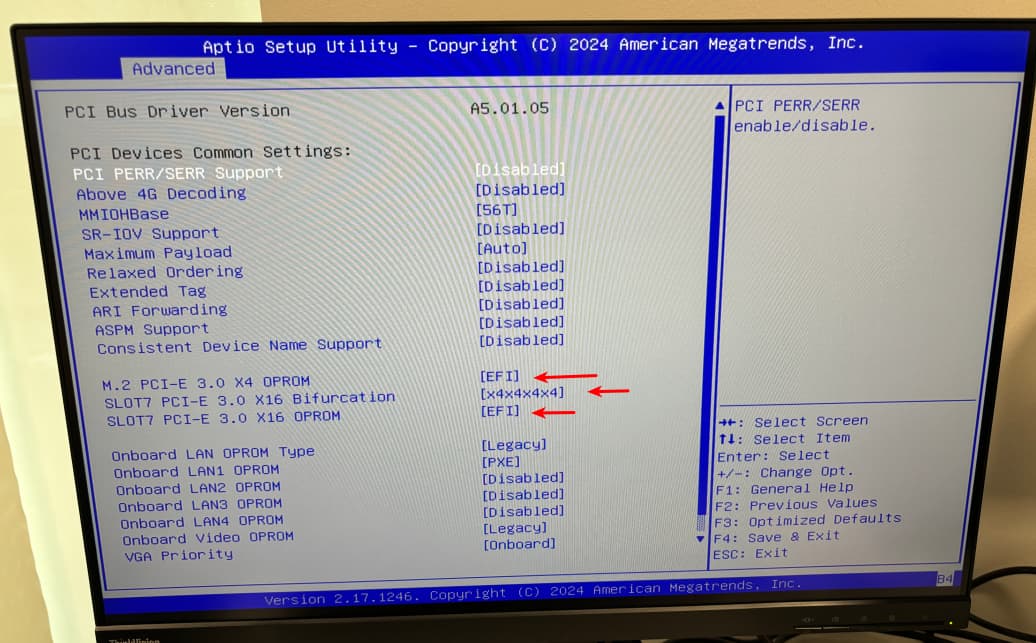

Well … Regarding my UEFI/Boot issue

I did some EFI settings in the Bios, under Pcie …

And forced the Secure boot “On”.

Then i had to “Instal/deploy” the Bios default EFI keys.

I had no luck in booting from the previous installed Deb12, but a reinstall w. UEFI active , made it bootable.

Now i’m back to the functionality i had before the BMC & Bios updates.

Next … Remove the M2-Sata drive , and install a NVME (To free up the 6’th sata channel , for my 6’th drive) … Then install DEB12 again to see if it will boot from the NVME.

I’ll order a Tosh-N300 8TB as my 6’th drive (tomorrow) , preparing to (at some time) phase out the - Old 5 x 4TB WD-RED-Plus installed - with all Tosh-N300 8TB’s