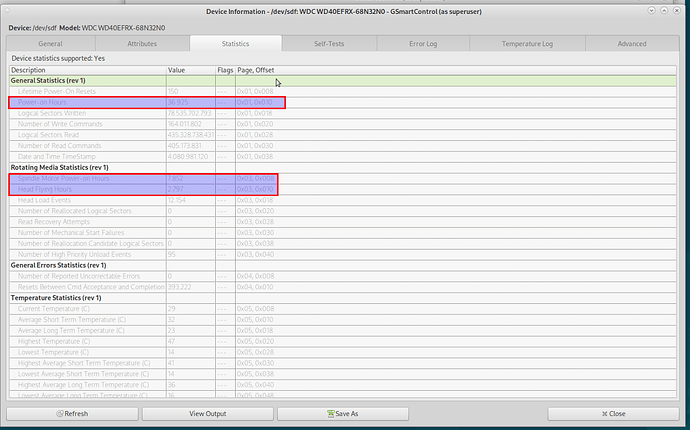

Regarding my “Old” WD-RED-Plus’es

It seems like seller was telling the truth, when he said the disks had mostly been “inactive”

The drives has seen approx 37K power on hours

But “only” 7.8K Spindle Power On hours & 2.8K “Head flying” hours

Device Statistics (GP Log 0x04)

Page Offset Size Value Flags Description

0x01 ===== = = === == General Statistics (rev 1) ==

0x01 0x008 4 150 --- Lifetime Power-On Resets

0x01 0x010 4 36925 --- Power-on Hours

0x01 0x018 6 78535702790 --- Logical Sectors Written

0x01 0x020 6 164011802 --- Number of Write Commands

0x01 0x028 6 435328738408 --- Logical Sectors Read

0x01 0x030 6 405173831 --- Number of Read Commands

0x01 0x038 6 4080981120 --- Date and Time TimeStamp

0x03 ===== = = === == Rotating Media Statistics (rev 1) ==

0x03 0x008 4 7852 --- Spindle Motor Power-on Hours

0x03 0x010 4 2797 --- Head Flying Hours

0x03 0x018 4 12154 --- Head Load Events

0x03 0x020 4 0 --- Number of Reallocated Logical Sectors

0x03 0x028 4 0 --- Read Recovery Attempts

0x03 0x030 4 0 --- Number of Mechanical Start Failures

0x03 0x038 4 0 --- Number of Realloc. Candidate Logical Sectors

0x03 0x040 4 95 --- Number of High Priority Unload Events

0x04 ===== = = === == General Errors Statistics (rev 1) ==

0x04 0x008 4 0 --- Number of Reported Uncorrectable Errors

0x04 0x010 4 393222 --- Resets Between Cmd Acceptance and Completion

0x05 ===== = = === == Temperature Statistics (rev 1) ==

0x05 0x008 1 29 --- Current Temperature

0x05 0x010 1 32 --- Average Short Term Temperature

0x05 0x018 1 23 --- Average Long Term Temperature

0x05 0x020 1 47 --- Highest Temperature

0x05 0x028 1 14 --- Lowest Temperature

0x05 0x030 1 41 --- Highest Average Short Term Temperature

0x05 0x038 1 14 --- Lowest Average Short Term Temperature

0x05 0x040 1 36 --- Highest Average Long Term Temperature

0x05 0x048 1 16 --- Lowest Average Long Term Temperature

0x05 0x050 4 0 --- Time in Over-Temperature

0x05 0x058 1 65 --- Specified Maximum Operating Temperature

0x05 0x060 4 0 --- Time in Under-Temperature

0x05 0x068 1 0 --- Specified Minimum Operating Temperature

0x06 ===== = = === == Transport Statistics (rev 1) ==

0x06 0x008 4 828 --- Number of Hardware Resets

0x06 0x010 4 307 --- Number of ASR Events

0x06 0x018 4 0 --- Number of Interface CRC Errors

|||_ C monitored condition met

||__ D supports DSN

|___ N normalized value

I have attached the SMART statiscics of one of the drives , all 5 WD-Red-Plus looks quite “alike” …

Graphics

And “Text” …

WDC_WD40EFRX-68N32N0_WD-WCC7K3RUxxxx_2024-09-26_1128.txt (14.1 KB)

.

Could i still use the 4TB RED’s for a while …

They seem to have been “idle” a lot of the power on time …

TIA

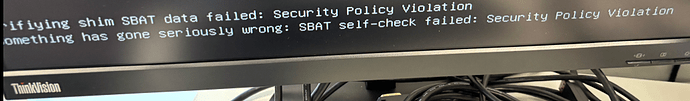

Bingo