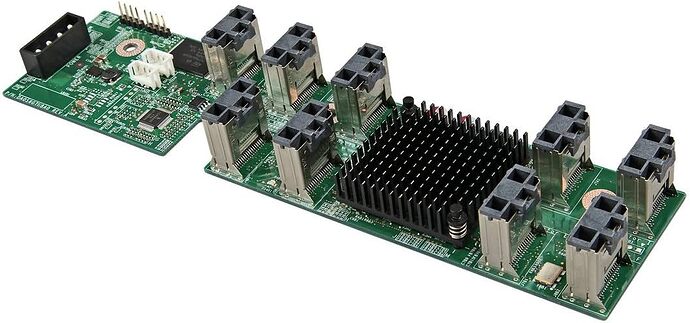

I’m using an Intel RES2CV360 36 Port SAS2 expander. I got it cheap. They’re expensive again now!

The 36 port expander means I can dual-link up to 28 bays off a single HBA and reclaim a PCIe3 x8 slot, and reclaim an HBA too… and I have other things to do with a spare HBA

And the 36 port expander was pretty much the same price as two HBAs… so its even.

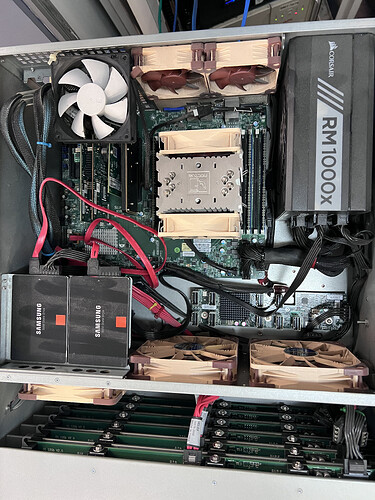

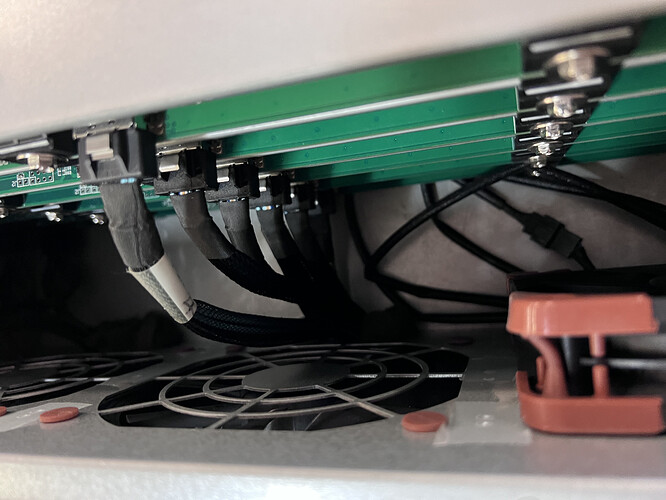

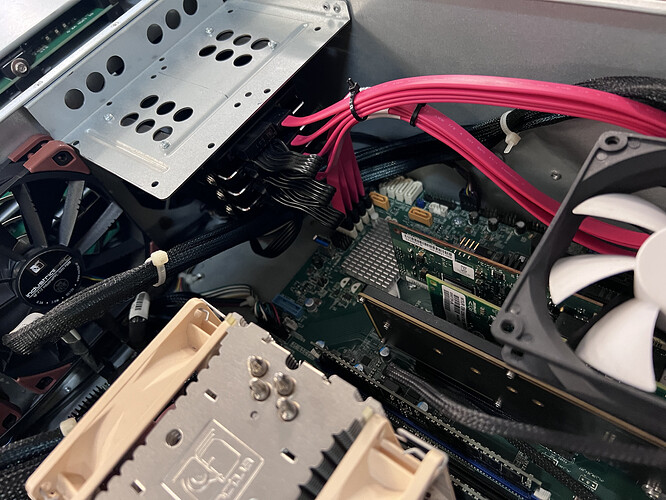

The current PCIe2 x8 adapter has about 4GB/s of bandwidth, which works out to 166MB/s per disk in the front 24 bays, which is fine. This also leaves an extra 4 ports for something else if I wish, and then then it also vacated the 10 SATA ports on the motherboard.

One day, if I wanted I could replace this with the gen3 adapter and only need a x4 slot.

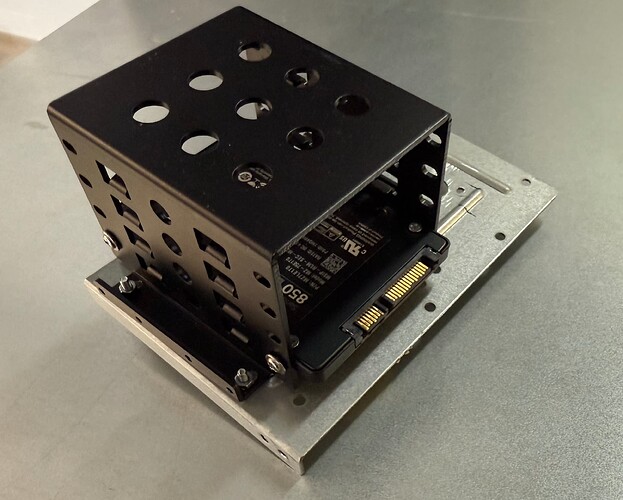

I plan to use the SATA ports on the motherboard for some SSDs, and upgrade from the USB boot mirrors to some 120GB SATA SSDs.

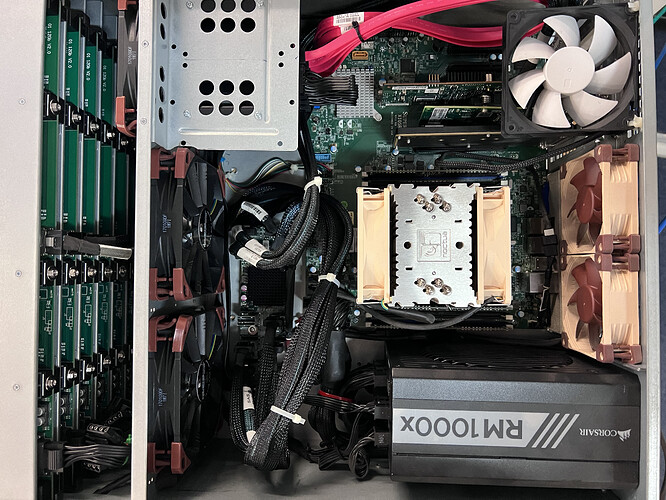

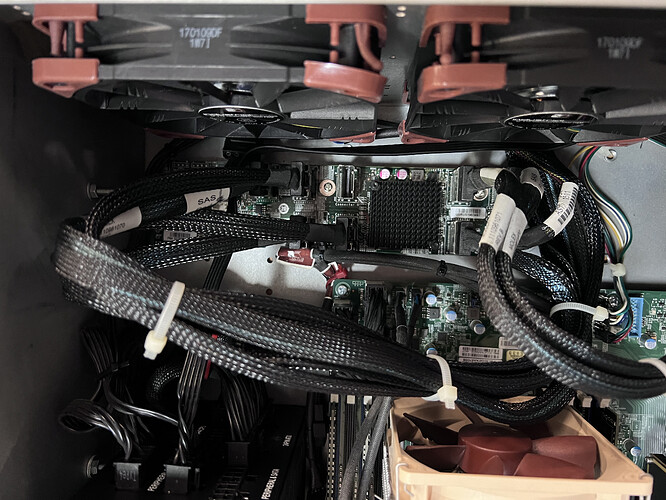

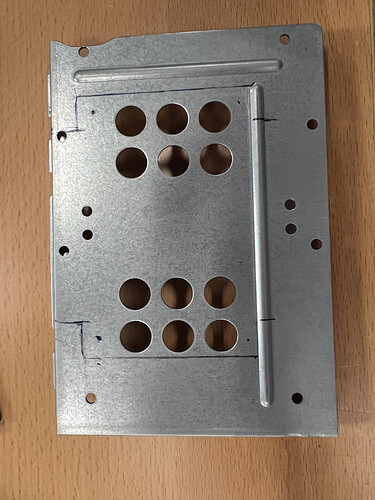

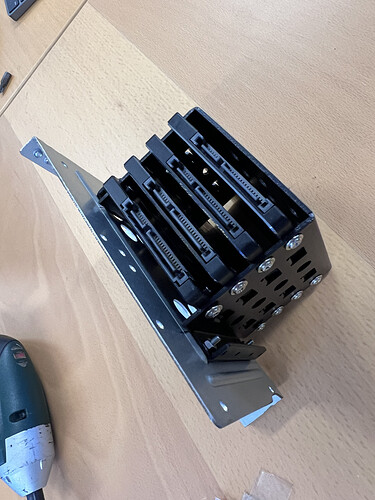

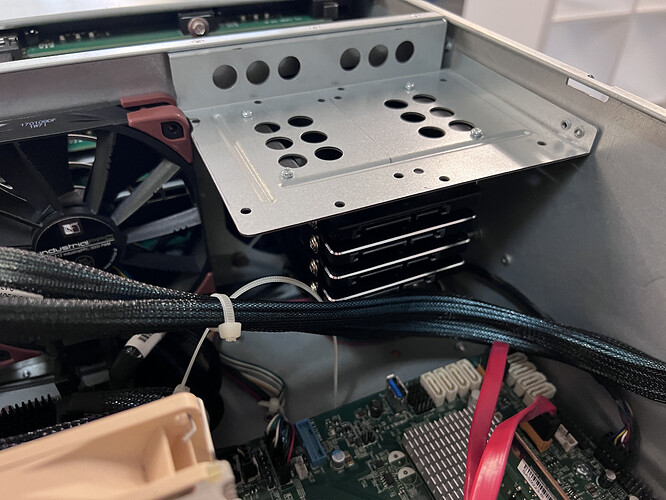

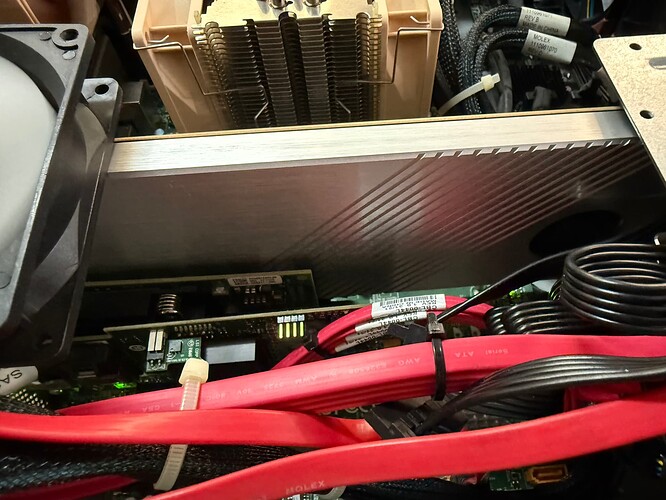

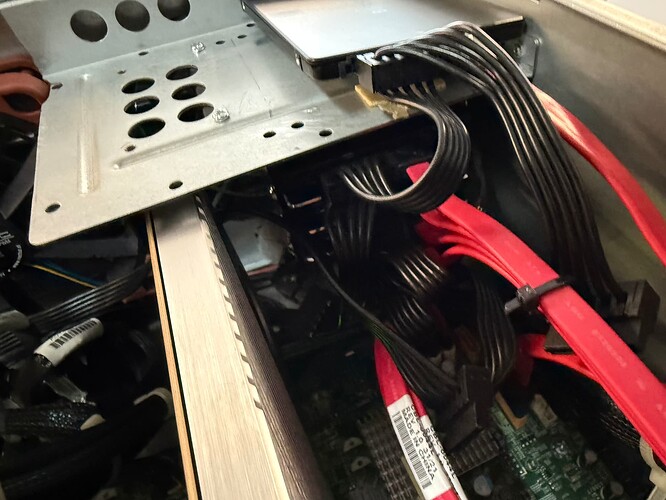

When the Intel RES2CV360 arrived I tested its functionality and mapped out where it would need to be installed…

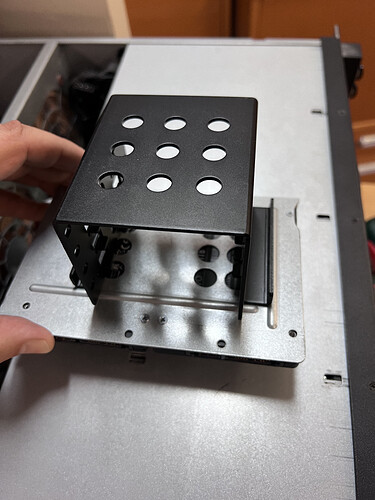

Think I will mount it around about here. Between the motherboard and fan bulkhead. I’ve already pulled one of the molex connectors back out from the drive bay section and it will reach the power input on the SAS expander nicely. The heat sink gets hot, so being in the air flow path is not a bad thing. Unfortunately the short cables that come with the expander won’t be much good.

While testing/setting up, I found that this section from the RES2CV360 manual

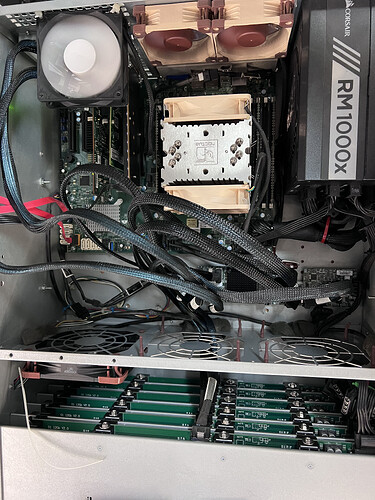

Is fairly important. If you don’t cable the drive bays A->F (and presumably G) then the slot# in sas2ircu <#> display gets confused.

If you do, then you get a nice display with the sasexpander and connected drives, listed with increasing slot numbers and enclosure number. (each HBA is an enclosure and each Expander seems to be an “enclosure”), so 0 and 1 for my two HBAs, and then the expander is Enclosure 2 connected to enclosure 1.

So, the next thing to do was test if I can set a drive to boot off the expander…

Which apparently worked fine. Need to ensure the HBA has its option rom installed, that option roms are enabled for the PCIe slot its installed for, you can then configure the HBA boot order in the avago bios utility, and then you can select any of the devices attached to the entire sas topology (apparently) from the SM bios’ boot screen, under HD disk priority.

![]()