Hi,

I successfully mounted the Pool by turning off first files systems (SMB/NFS) and deconfiguring the Apps Pool

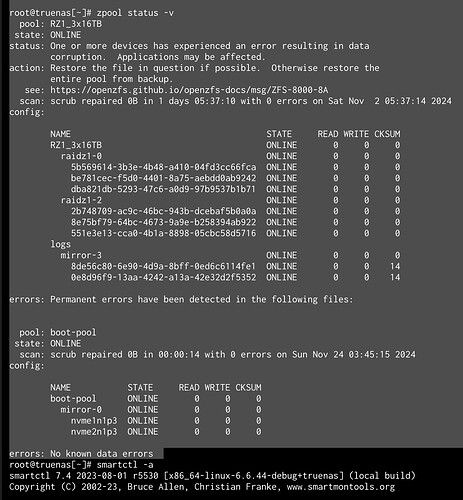

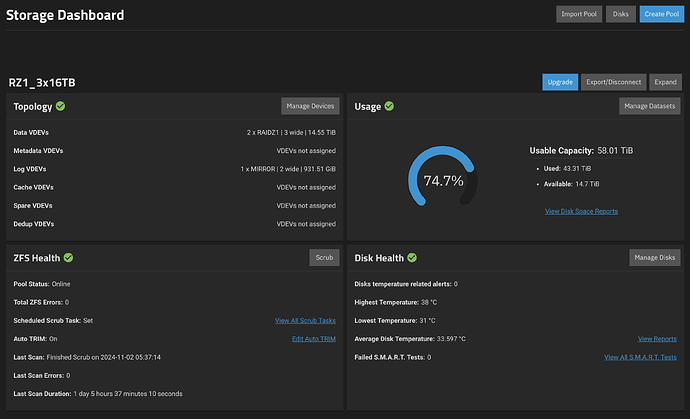

Ran the commands and here’s the most up to date results:

truenas:~$ lsblk -bo NAME,MODEL,ROTA,PTTYPE,TYPE,START,SIZE,PARTTYPENAME,PARTUUID

NAME MODEL ROTA PTTYPE TYPE START SIZE PARTTYPENAME PARTUUID

sda ST16000NT00 1 gpt disk 16000900661248

├─sda1 1 gpt part 2048 2147484160 Linux swap 190604cc-2425-483d-af9a-cfc0869cc182

└─sda2 1 gpt part 4198400 15998750032384 Solaris /usr & Apple ZFS 2b748709-ac9c-46bc-943b-dcebaf5b0a0a

sdb ST16000NT00 1 gpt disk 16000900661248

├─sdb1 1 gpt part 2048 2147484160 Linux swap 65c7ad43-7b2c-4e3b-90e2-5c4c8c82d1af

└─sdb2 1 gpt part 4198400 15998750032384 Solaris /usr & Apple ZFS be781cec-f5d0-4401-8a75-aebdd0ab9242

sdc ST16000NT00 1 gpt disk 16000900661248

├─sdc1 1 gpt part 2048 2147484160 Linux swap 246c007e-1124-4f64-ada2-e630b1203ae5

└─sdc2 1 gpt part 4198400 15998750032384 Solaris /usr & Apple ZFS 8e75bf79-64bc-4673-9a9e-b258394ab922

sdd ST16000NT00 1 gpt disk 16000900661248

├─sdd1 1 gpt part 2048 2147484160 Linux swap 82051eb8-ea65-4ff7-b803-d416fd55b5a6

└─sdd2 1 gpt part 4198400 15998750032384 Solaris /usr & Apple ZFS dba821db-5293-47c6-a0d9-97b9537b1b71

sde ST16000NT00 1 gpt disk 16000900661248

├─sde1 1 gpt part 2048 2147484160 Linux swap 05ff11e5-da76-448e-be26-630ee464a6c0

└─sde2 1 gpt part 4198400 15998750032384 Solaris /usr & Apple ZFS 5b569614-3b3e-4b48-a410-04fd3cc66fca

sdf ST16000NT00 1 gpt disk 16000900661248

├─sdf1 1 gpt part 2048 2147484160 Linux swap 723de58f-d644-4e98-824a-3b21c8b6c154

└─sdf2 1 gpt part 4198400 15998750032384 Solaris /usr & Apple ZFS 551e3e13-cca0-4b1a-8898-05cbc58d5716

zd0 0 gpt disk 536870912000

zd16 0 gpt disk 536870912000

nvme3n1 WDBRPG5000A 0 gpt disk 500107862016

├─nvme3n1p1 0 gpt part 4096 1048576 BIOS boot 492b305f-8733-4c74-98da-c97721af04bd

├─nvme3n1p2 0 gpt part 6144 536870912 EFI System 1e7d328b-04d9-43a6-96b1-6f7d42a13841

└─nvme3n1p3 0 gpt part 1054720 499567828480 Solaris /usr & Apple ZFS 5527c7ae-b7df-4786-8067-9f7116a590ed

nvme0n1 WDS500G3X0C 0 gpt disk 500107862016

├─nvme0n1p1 0 gpt part 4096 1048576 BIOS boot 6d1529c4-970f-4924-bdcd-58d2b5c58485

├─nvme0n1p2 0 gpt part 6144 536870912 EFI System 2e93d4e2-0a57-4da7-9ffa-fb915442f1f5

└─nvme0n1p3 0 gpt part 1054720 499567828480 Solaris /usr & Apple ZFS 9afd2a95-908d-4bcd-946a-7cfcba2d2d99

nvme2n1 Samsung SSD 0 gpt disk 1000204886016

└─nvme2n1p1 0 gpt part 4096 1000202043904 Solaris /usr & Apple ZFS 8de56c80-6e90-4d9a-8bff-0ed6c6114fe1

nvme1n1 Samsung SSD 0 gpt disk 1000204886016

└─nvme1n1p1 0 gpt part 4096 1000202043904 Solaris /usr & Apple ZFS 0e8d96f9-13aa-4242-a13a-42e32d2f5352

_

truenas:~$ lspci

00:00.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Root Complex

00:00.2 IOMMU: Advanced Micro Devices, Inc. [AMD] Starship/Matisse IOMMU

00:01.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

00:01.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse GPP Bridge

00:02.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

00:03.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

00:03.5 PCI bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse GPP Bridge

00:04.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

00:05.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

00:07.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

00:07.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Internal PCIe GPP Bridge 0 to bus[E:B]

00:08.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

00:08.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Internal PCIe GPP Bridge 0 to bus[E:B]

00:14.0 SMBus: Advanced Micro Devices, Inc. [AMD] FCH SMBus Controller (rev 61)

00:14.3 ISA bridge: Advanced Micro Devices, Inc. [AMD] FCH LPC Bridge (rev 51)

00:18.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship Device 24; Function 0

00:18.1 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship Device 24; Function 1

00:18.2 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship Device 24; Function 2

00:18.3 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship Device 24; Function 3

00:18.4 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship Device 24; Function 4

00:18.5 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship Device 24; Function 5

00:18.6 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship Device 24; Function 6

00:18.7 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship Device 24; Function 7

01:00.0 Non-Volatile memory controller: Samsung Electronics Co Ltd NVMe SSD Controller PM9A1/PM9A3/980PRO

02:00.0 Non-Volatile memory controller: Sandisk Corp WD Black SN750 / PC SN730 NVMe SSD

03:00.0 Non-Essential Instrumentation [1300]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Function

03:00.2 Encryption controller: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PTDMA

04:00.0 Non-Essential Instrumentation [1300]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Reserved SPP

04:00.2 Encryption controller: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PTDMA

04:00.3 USB controller: Advanced Micro Devices, Inc. [AMD] Starship USB 3.0 Host Controller

40:00.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Root Complex

40:00.2 IOMMU: Advanced Micro Devices, Inc. [AMD] Starship/Matisse IOMMU

40:01.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

40:01.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse GPP Bridge

40:01.3 PCI bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse GPP Bridge

40:01.4 PCI bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse GPP Bridge

40:01.5 PCI bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse GPP Bridge

40:02.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

40:03.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

40:03.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse GPP Bridge

40:04.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

40:05.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

40:07.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

40:07.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Internal PCIe GPP Bridge 0 to bus[E:B]

40:08.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

40:08.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Internal PCIe GPP Bridge 0 to bus[E:B]

40:08.2 PCI bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Internal PCIe GPP Bridge 0 to bus[E:B]

40:08.3 PCI bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Internal PCIe GPP Bridge 0 to bus[E:B]

41:00.0 Non-Volatile memory controller: Sandisk Corp WD Black SN750 / PC SN730 NVMe SSD

42:00.0 Ethernet controller: Intel Corporation Ethernet Controller X550 (rev 01)

42:00.1 Ethernet controller: Intel Corporation Ethernet Controller X550 (rev 01)

44:00.0 USB controller: ASMedia Technology Inc. ASM2142/ASM3142 USB 3.1 Host Controller

45:00.0 PCI bridge: ASPEED Technology, Inc. AST1150 PCI-to-PCI Bridge (rev 04)

46:00.0 VGA compatible controller: ASPEED Technology, Inc. ASPEED Graphics Family (rev 41)

47:00.0 Non-Volatile memory controller: Samsung Electronics Co Ltd NVMe SSD Controller PM9A1/PM9A3/980PRO

48:00.0 Non-Essential Instrumentation [1300]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Function

48:00.2 Encryption controller: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PTDMA

49:00.0 Non-Essential Instrumentation [1300]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Reserved SPP

49:00.1 Encryption controller: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Cryptographic Coprocessor PSPCPP

49:00.2 Encryption controller: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PTDMA

49:00.3 USB controller: Advanced Micro Devices, Inc. [AMD] Starship USB 3.0 Host Controller

49:00.4 Audio device: Advanced Micro Devices, Inc. [AMD] Starship/Matisse HD Audio Controller

4a:00.0 SATA controller: Advanced Micro Devices, Inc. [AMD] FCH SATA Controller [AHCI mode] (rev 51)

4b:00.0 SATA controller: Advanced Micro Devices, Inc. [AMD] FCH SATA Controller [AHCI mode] (rev 51)

80:00.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Root Complex

80:00.2 IOMMU: Advanced Micro Devices, Inc. [AMD] Starship/Matisse IOMMU

80:01.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

80:01.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse GPP Bridge

80:02.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

80:03.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

80:04.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

80:05.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

80:07.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

80:07.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Internal PCIe GPP Bridge 0 to bus[E:B]

80:08.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

80:08.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Internal PCIe GPP Bridge 0 to bus[E:B]

80:08.2 PCI bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Internal PCIe GPP Bridge 0 to bus[E:B]

80:08.3 PCI bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Internal PCIe GPP Bridge 0 to bus[E:B]

81:00.0 Ethernet controller: Intel Corporation I210 Gigabit Network Connection (rev 03)

82:00.0 Non-Essential Instrumentation [1300]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Function

82:00.2 Encryption controller: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PTDMA

83:00.0 Non-Essential Instrumentation [1300]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Reserved SPP

83:00.2 Encryption controller: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PTDMA

84:00.0 SATA controller: Advanced Micro Devices, Inc. [AMD] FCH SATA Controller [AHCI mode] (rev 51)

85:00.0 SATA controller: Advanced Micro Devices, Inc. [AMD] FCH SATA Controller [AHCI mode] (rev 51)

c0:00.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Root Complex

c0:00.2 IOMMU: Advanced Micro Devices, Inc. [AMD] Starship/Matisse IOMMU

c0:01.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

c0:01.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse GPP Bridge

c0:02.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

c0:03.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

c0:04.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

c0:05.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

c0:07.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

c0:07.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Internal PCIe GPP Bridge 0 to bus[E:B]

c0:08.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Host Bridge

c0:08.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Internal PCIe GPP Bridge 0 to bus[E:B]

c1:00.0 PCI bridge: Advanced Micro Devices, Inc. [AMD] Vega 10 PCIe Bridge (rev c3)

c2:00.0 PCI bridge: Advanced Micro Devices, Inc. [AMD] Vega 10 PCIe Bridge

c3:00.0 VGA compatible controller: Advanced Micro Devices, Inc. [AMD/ATI] Vega 10 XL/XT [Radeon RX Vega 56/64] (rev c3)

c3:00.1 Audio device: Advanced Micro Devices, Inc. [AMD/ATI] Vega 10 HDMI Audio [Radeon Vega 56/64]

c4:00.0 Non-Essential Instrumentation [1300]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PCIe Dummy Function

c4:00.2 Encryption controller: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PTDMA

c5:00.0 Non-Essential Instrumentation [1300]: Advanced Micro Devices, Inc. [AMD] Starship/Matisse Reserved SPP

c5:00.2 Encryption controller: Advanced Micro Devices, Inc. [AMD] Starship/Matisse PTDMA

_

truenas:~$ sudo sas2flash -list

LSI Corporation SAS2 Flash Utility

Version 20.00.00.00 (2014.09.18)

Copyright (c) 2008-2014 LSI Corporation. All rights reserved

No LSI SAS adapters found! Limited Command Set Available!

ERROR: Command Not allowed without an adapter!

ERROR: Couldn't Create Command -list

Exiting Program.

truenas:~$ sudo sas3flash - list

Avago Technologies SAS3 Flash Utility

Version 16.00.00.00 (2017.05.02)

Copyright 2008-2017 Avago Technologies. All rights reserved.

No Avago SAS adapters found! Limited Command Set Available!

ERROR: Invalid command -

Exiting Program.