Good morning,

My primary NAS is struggling to run a replication to a remote NAS and I am getting the following message that make little sense to me. The replication appears to start normally, all the changes are collected, then the replication hangs on a Time Machine dataset from 2025-02-06.

Error: [2025/03/08 01:01:00] INFO [Thread-144] [zettarepl.paramiko.replication_task__task_1] Connected (version 2.0, client OpenSSH_9.2p1)

…

[2025/03/08 02:04:08] INFO [replication_task__task_1] [zettarepl.replication.run] For replication task ‘task_1’: doing push from ‘local/Time Machine/TimeMachine_CvW’ to ‘remote/local_Backup/Time Machine/TimeMachine_CvW’ of snapshot=None incremental_base=None include_intermediate=None receive_resume_token=‘[EDIT: token ID]’ encryption=False

[2025/03/08 02:04:09] INFO [replication_task__task_1] [zettarepl.transport.ssh_netcat] Automatically chose connect address ‘EDIT: local IP’

[2025/03/08 02:04:09] WARNING [replication_task__task_1] [zettarepl.replication.partially_complete_state] Specified receive_resume_token, but received an error: contains partially-complete state. Allowing ZFS to catch up

[2025/03/08 02:04:09] ERROR [replication_task__task_1] [zettarepl.replication.run] For task ‘task_1’ non-recoverable replication error ContainsPartiallyCompleteState()

I have seen posts in the old forum suggesting that the way to deal with this error is to either wait it out, kill the errant process, or to reboot the remote machine. There must be a better way?

Waiting it out does not seem to be an option because my NAS’ fail nightly at getting the task done. I have now rebooted the remote machine twice as part of trying to resolve this issue. What else can I try?

Does this mean that there is no common snapshot to facilitate the replication of the child dataset “TimeMachine_CvW”?

1 Like

The curious thing is that I’ve had this system work for over a year. As best as I can tell, the current issue is an old-hat problem, i.e. that

- The pipe between the two systems gets broken by some event that I cannot control before a given snapshot transfer has completed. This is usually due to a large snapshot being generated (i.e. lots of changes on my laptop → time machine → local NAS → replication to remote NAS)

- The alleged-available replication resume function is not being invoked by 24.10.2. Instead, the replication function keeps failing out and only a restart of the remote NAS kills the process that is hanging reliably.

I doubt that iXsystems encounters this issue much since their customers have high-speed connections between local and remote systems and as such the lack of resume function or ZFS replication middleware with its hair on fire is likely a edge case for the tech support team.

Not knowing the scheduling, but is it possible that pruning is occurring before a replication can complete?

1 Like

Have you tried removing one or two of the most recent snapshots on the receive system to see if it can start again from an earlier point in time?

1 Like

That’s a really good point. I doubt it, as this error was allowed to fester for a while since usually replications fix themselves - i.e. the remote system has a power outage so the task fails today but will complete tomorrow, for example.

In this case, the replications keep failing and the task backlog has grown to about 500MB in content, which suggests a very long transfer time thanks to Comcast. Without the benefit of a working resume function, any interruption kills the entire snapshot transfer and appears to put the remote machine in a funk.

I can certainly do that. I did look on the receiving machine whether there were any registered 2025-02-06 snapshots for the particular Time Machine dataset in question and there were none. Otherwise, I would have deleted them since this issue has affected me in the past.

Do I have to do anything special with TrueNAS to “legally” delete the 2025-02-05 Time Machine dataset so it knows to retransmit it or can I just delete the remote snapshot and ZFS replicates it again?

What’s your snapshot schedule on your primary system for the dataset in question?

1 Like

Nightly with a two week retention, weekly with a 3 month retention.

The remote sets follow the local cadence.

Ok so can you see any snapshots on the receive system relating to this dataset?

I presume the receive side is not being limited by space/quota?

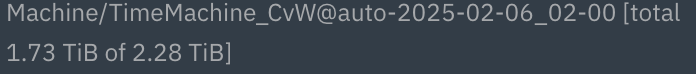

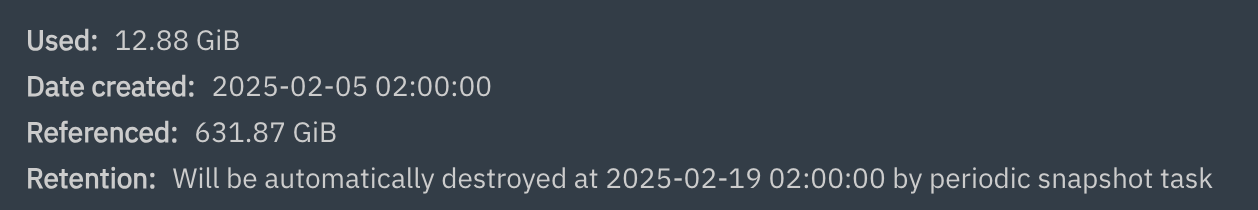

Sure can… and lookie here, the 2025-02-05 snapshot is showing zero bytes

while the local machine is currently trying to upload the snapshot for 2025-02-06.

28% full, oodles of space, no quota. I’m guessing the mangled 2025-02-05 snapshot is causing unhappiness. Can I simply

- stop the replication process on the local machine

- delete the 2025-02-05 snapshot on the remote system via the GUI

- restart the local replication process?

That only means that there are 0 bytes worth of “differences” between this snapshot and all other snapshots (including the live filesystem).

1 Like

But the local system shows more than zero bytes for this particular snapshot… or am I reading this wrong?

I’d be tempted to delete the most recent snap on the receive side and then let replication try to untwist its knickers. I find it’s normally very good at that given the chance.

If you are able to then a reboot on the receive side wouldn’t hurt to force the send to fail and start again.

2 Likes

Is this Core? I know that Core has a longstanding bug in the GUI where you cannot always trust the information about snapshots.

What is revealed if you use the command-line?

zfs list -o name,used,refer <pool>/<dataset>/<child>@auto-2025-02-05_02-00

1 Like

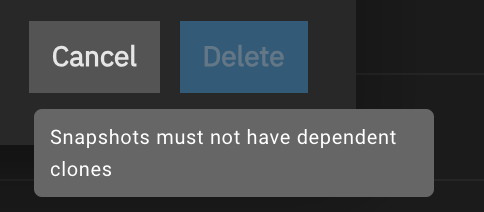

Nope. It now claims it has dependent sets

Interesting, so trying to delete that most recent snapshot on the recieve side presents this message?

If so I would disable replication on the primary, restart the receive system and then try deleting it again.

1 Like