So I have a couple of Debian VM’s that are highly configured and working great. Correct me if I am wrong but these wont run natively in Fangtooth and to run them on Fangtooth I would have to totally rebuild them in the new environment. Is that true?

You will have to reconfigure the VM, but you can migrate the existing Zvol.

So the here is the way I am reading this. If I have an EEL with a Debian VM running specialty apps then only thing that will convert over will be the zvol where the VM was running. From there I will have to load a new Debian image, configure it and load and configure all my specialty apps. Right?

Not as I understand it. Your Debian is in a zvol, that’s where the storage of the VM lives.

So configure a new VM, with cores and memory and devices as you need it, attach the Debian zvol, and start.

Debian will come up and with it all apps installed on it.

If that is the way it works I was reading the conversion process totally wrong. The back end of the VM is what needs to be converted. Then point the zvol with the debian image to it and boot…

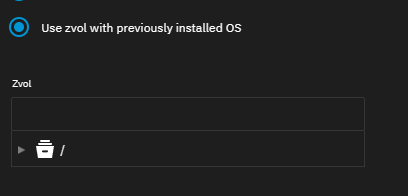

Correct and actually the process has been simplified a little since that migration process was written by the addition of this Image Option

Cool, That will work. I appreciate all the work that has been put in this update. I know it has been hours and hours in development.

I’m stumbling over this too, because of this part of the manual migration instructions in the release notes:

1. Recreate the VM

* Click **Create New Instance**.

* Select **VM** as the **Virtualization Method**.

* Upload or select the ISO file for installation.

* Reconfigure the VM using the settings you recorded before updating.

* Under **Disks**, select the existing zvol for storage.

* Click **Create**.

This seems to me to imply you have to reinstall the OS from the ISO, rather than just attach the zvol with the existing installed OS.

That was the case in BETA, but it has been improved. You can see Release note clarifications by DjP-iX · Pull Request #3584 · truenas/documentation · GitHub for now, until it gets merged. the updated process in the release notes.

- After upgrading to TrueNAS 25.04, go to Instances (formerly Virtualization).

- Click Select Pool to open Global Settings.

- Use the Pool dropdown to select a storage pool for virtualization.

- Accept default networking settings or modify as needed, then click Save.

I just wanted to check, we can choose more than one pool right?

I have 2x W11 VM’s each on their own pool.

Not currently.

That feature is coming one day.

but when you “create” a VM you can select an already existing zvol to hook up.

I’m still stumbling over moving my Windows VM (set up with virtio drivers) to Fangtooth. On EE, the VM pulls an IP from my router, and I can access the VM with RDP. Works fine. On Fangtooth, I use the same zvol, and the VM is “running” with the default bridge. But I can’t figure out how to access it with RDP. I’m stuck on the “Accept default networking settings or modify as needed”.

Ok I may need to move some zvols and fiddle with my pools by the looks then

I don’t know how Windows reacts, but on linux, after mounting the volume on a new virtual instance, I noticed underlying virtual hardware is slightly different.

I was more concerned about uefi vs bios config and secure boot, but that just worked for me. In the past I’ve had to drop into uefi and configure boot from its uefi cli.

On the network side, I had to reconfigure the interfaces, so I’d check if devices are installed correctly on Windows. You might need to uninstall the old nics on Windows, if you didn’t do it beforehand.

ATM, truenas’s UI doesn’t have much info for troubleshooting. I’d suggest running “incus config show [VM NAME]” and compare the MAC addresses with the vm.

Hope this helps,

Very helpful, thanks. A little trial and error needed: the MAC addresses of the four ethernet ports reported by the IPMI, and the MAC address reported by INCUS and the EN0 options are all different, but its working now (and the MAC address of the incus config is different than the MAC address that the router is seeing).

The current implementation is nearly unusable for VMs…its not even possible to define the MAC address for each adapter. This is a huge step back.

In some situations it can change the interface name in the guest and with it some IP configuration and routing…its a pain to change it in the guest via VNC oder serial console ![]() .

.

Yea, I decided to hold off and stay with my stable EEL VM enviornment. I would suggest others do the same if you have VM’s that are of critical use. Hopefully they will release some fixes soon.

\

The more or less official advice for those with critical VMs is to wait for 25.10, where the API is expected to stabilise and some automatic VM conversion might be implemented.

Yep, I am staying on EEL till then and even after 25.10 is released give it some time to prove out. I have a feeling that the developers did not realize the need for a stable VM environment when they chose to break the heck out of it. I am seeing lots of "Oh crap I did not realize when I upgraded it would break all my VM’s) …

I’d say the developers do realise that stability is required, but many “Community” users do not realise that they are beta testers even when installing a “release” version.