The GPU and it’s audio device have their own IOMMU Groups if that’s what you’re looking for:

IOMMU groups

IOMMU Group 0 00:01.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Renoir PCIe Dummy Host Bridge [1022:1632]

IOMMU Group 1 00:01.1 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Renoir PCIe GPP Bridge [1022:1633]

IOMMU Group 10 02:00.0 USB controller [0c03]: Advanced Micro Devices, Inc. [AMD] 400 Series Chipset USB 3.1 xHCI Compliant Host Controller [1022:43d5] (rev 01)

IOMMU Group 10 02:00.2 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] 400 Series Chipset PCIe Bridge [1022:43c6] (rev 01)

IOMMU Group 10 03:00.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] 400 Series Chipset PCIe Port [1022:43c7] (rev 01)

IOMMU Group 10 03:04.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] 400 Series Chipset PCIe Port [1022:43c7] (rev 01)

IOMMU Group 10 03:05.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] 400 Series Chipset PCIe Port [1022:43c7] (rev 01)

IOMMU Group 10 03:06.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] 400 Series Chipset PCIe Port [1022:43c7] (rev 01)

IOMMU Group 10 03:07.0 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] 400 Series Chipset PCIe Port [1022:43c7] (rev 01)

IOMMU Group 10 05:00.0 Ethernet controller [0200]: Realtek Semiconductor Co., Ltd. RTL8125 2.5GbE Controller [10ec:8125] (rev 05)

IOMMU Group 10 07:00.0 VGA compatible controller [0300]: NVIDIA Corporation GT218 [NVS 300] [10de:10d8] (rev a2)

IOMMU Group 10 07:00.1 Audio device [0403]: NVIDIA Corporation High Definition Audio Controller [10de:0be3] (rev a1)

IOMMU Group 10 08:00.0 Ethernet controller [0200]: Realtek Semiconductor Co., Ltd. RTL8111/8168/8411 PCI Express Gigabit Ethernet Controller [10ec:8168] (rev 15)

IOMMU Group 11 09:00.0 Non-Volatile memory controller [0108]: Intel Corporation NVMe Optane Memory Series [8086:2522]

IOMMU Group 12 0a:00.0 VGA compatible controller [0300]: Advanced Micro Devices, Inc. [AMD/ATI] Renoir [1002:1636] (rev d9)

IOMMU Group 13 0a:00.1 Audio device [0403]: Advanced Micro Devices, Inc. [AMD/ATI] Renoir Radeon High Definition Audio Controller [1002:1637]

IOMMU Group 14 0a:00.2 Encryption controller [1080]: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 10h-1fh) Platform Security Processor [1022:15df]

IOMMU Group 15 0a:00.3 USB controller [0c03]: Advanced Micro Devices, Inc. [AMD] Renoir/Cezanne USB 3.1 [1022:1639]

IOMMU Group 16 0a:00.4 USB controller [0c03]: Advanced Micro Devices, Inc. [AMD] Renoir/Cezanne USB 3.1 [1022:1639]

IOMMU Group 17 0a:00.6 Audio device [0403]: Advanced Micro Devices, Inc. [AMD] Family 17h/19h HD Audio Controller [1022:15e3]

IOMMU Group 2 00:02.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Renoir PCIe Dummy Host Bridge [1022:1632]

IOMMU Group 3 00:02.1 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Renoir/Cezanne PCIe GPP Bridge [1022:1634]

IOMMU Group 4 00:02.2 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Renoir/Cezanne PCIe GPP Bridge [1022:1634]

IOMMU Group 5 00:08.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Renoir PCIe Dummy Host Bridge [1022:1632]

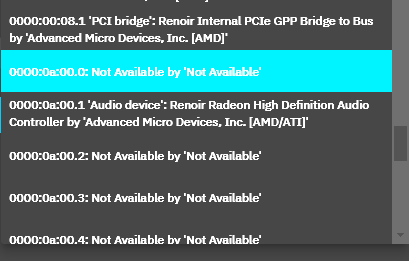

IOMMU Group 6 00:08.1 PCI bridge [0604]: Advanced Micro Devices, Inc. [AMD] Renoir Internal PCIe GPP Bridge to Bus [1022:1635]

IOMMU Group 7 00:14.0 SMBus [0c05]: Advanced Micro Devices, Inc. [AMD] FCH SMBus Controller [1022:790b] (rev 51)

IOMMU Group 7 00:14.3 ISA bridge [0601]: Advanced Micro Devices, Inc. [AMD] FCH LPC Bridge [1022:790e] (rev 51)

IOMMU Group 8 00:18.0 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Renoir Device 24: Function 0 [1022:1448]

IOMMU Group 8 00:18.1 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Renoir Device 24: Function 1 [1022:1449]

IOMMU Group 8 00:18.2 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Renoir Device 24: Function 2 [1022:144a]

IOMMU Group 8 00:18.3 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Renoir Device 24: Function 3 [1022:144b]

IOMMU Group 8 00:18.4 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Renoir Device 24: Function 4 [1022:144c]

IOMMU Group 8 00:18.5 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Renoir Device 24: Function 5 [1022:144d]

IOMMU Group 8 00:18.6 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Renoir Device 24: Function 6 [1022:144e]

IOMMU Group 8 00:18.7 Host bridge [0600]: Advanced Micro Devices, Inc. [AMD] Renoir Device 24: Function 7 [1022:144f]

IOMMU Group 9 01:00.0 RAID bus controller [0104]: Broadcom / LSI SAS2008 PCI-Express Fusion-MPT SAS-2 [Falcon] [1000:0072] (rev 03)

I already tried to add it to an existing VM, but under edit → GPU.

I now disabled “Ensure Display Device” and added PCIe devices 0a:00.0 and 0a:00.1 manually as passthrough devices. This does not create a warning in the UI, but I still get these errors in messages:

Aug 2 08:14:37 truenas1 kernel: pcieport 0000:00:08.1: broken device, retraining non-functional downstream link at 2.5GT/s

Aug 2 08:14:38 truenas1 kernel: pcieport 0000:00:08.1: retraining failed

Aug 2 08:14:40 truenas1 kernel: pcieport 0000:00:08.1: broken device, retraining non-functional downstream link at 2.5GT/s

Aug 2 08:14:41 truenas1 kernel: pcieport 0000:00:08.1: retraining failed

Aug 2 08:14:41 truenas1 kernel: vfio-pci 0000:0a:00.0: not ready 1023ms after bus reset; waiting

Aug 2 08:14:42 truenas1 kernel: vfio-pci 0000:0a:00.0: not ready 2047ms after bus reset; waiting

Aug 2 08:14:44 truenas1 kernel: vfio-pci 0000:0a:00.0: not ready 4095ms after bus reset; waiting

Aug 2 08:14:48 truenas1 kernel: vfio-pci 0000:0a:00.0: not ready 8191ms after bus reset; waiting

Aug 2 08:14:57 truenas1 kernel: vfio-pci 0000:0a:00.0: not ready 16383ms after bus reset; waiting

Aug 2 08:15:14 truenas1 kernel: vfio-pci 0000:0a:00.0: not ready 32767ms after bus reset; waiting

Aug 2 08:15:49 truenas1 kernel: vfio-pci 0000:0a:00.0: not ready 65535ms after bus reset; giving up

Aug 2 08:15:50 truenas1 kernel: vfio-pci 0000:0a:00.0: vgaarb: VGA decodes changed: olddecodes=io+mem,decodes=io+mem:owns=none

Aug 2 08:15:50 truenas1 kernel: [drm] initializing kernel modesetting (RENOIR 0x1002:0x1636 0x1043:0x87E1 0xD9).

Aug 2 08:15:50 truenas1 kernel: [drm] register mmio base: 0xF5F00000

Aug 2 08:15:50 truenas1 kernel: [drm] register mmio size: 524288

Aug 2 08:15:50 truenas1 kernel: amdgpu 0000:0a:00.0: amdgpu: amdgpu: finishing device.

Aug 2 08:15:50 truenas1 kernel: amdgpu: probe of 0000:0a:00.0 failed with error -22

Aug 2 08:15:50 truenas1 kernel: snd_hda_intel 0000:0a:00.1: Handle vga_switcheroo audio client

Aug 2 08:15:50 truenas1 kernel: snd_hda_intel 0000:0a:00.1: number of I/O streams is 30, forcing separate stream tags

Also no idea why it says snd_hda_intel.

The machine can’t be turned on and logs this:

2024-08-02T06:14:36.043655Z qemu-system-x86_64: VFIO_MAP_DMA failed: Bad address

2024-08-02T06:14:36.044566Z qemu-system-x86_64: vfio_dma_map(0x5608b31db850, 0xf4000000, 0x4000000, 0x7fa423e00000) = -14 (Bad address)

qemu: hardware error: vfio: DMA mapping failed, unable to continue