Hello, I experienced the exact same problem as described here. I managed to relatively quickly fix the issue similarly as described by user cgfrost. Since the answer was pretty short I wanted to give a guide to less experienced users. Main difference being that a quick wipe should be enough to solve the issue, a wipe to zeros certainly also works but takes a much longer time.

In my setup I am using a simple mirror with two HDDs. I imagine the process must be very similar for RAID setup but please only follow these instructions if you also have a mirror setup.

The problem:

############

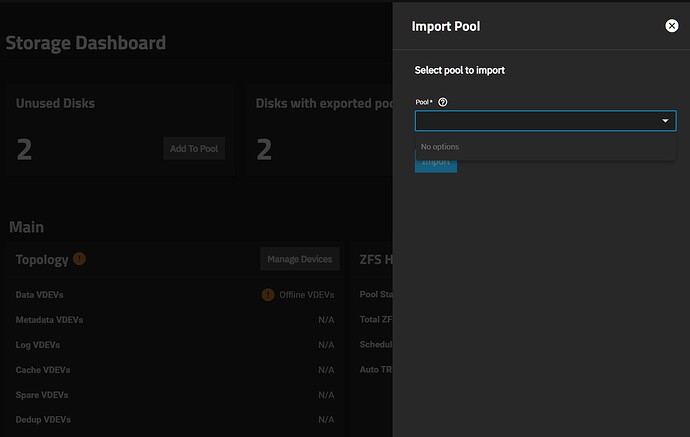

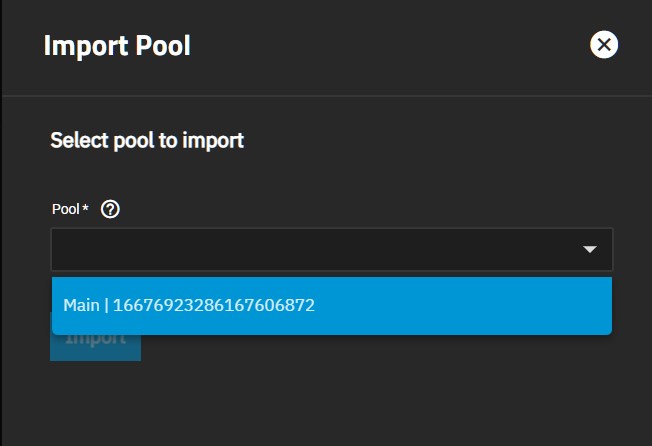

It seems that the drives that have the issue of being exported after a reboot have multiple filesystem entries which confuses truenas and does not automatically mount the pool. The pool is stil present though and can be manually exported and reimported.

For my drives the command sudo blkid --probe /dev/sda1 gives the following output

blkid: /dev/sda1: ambivalent result (probably more filesystems on the device, use wipefs(8) to see more details)

The Solution:

#############

Before doing anything make sure to back up your data, as a mistake in following the instructions will lead to data loss!

Repeat the following for all affected disks: (in my case first for disk sda and then for disk sdb)

########################

wipe the drive:

###############

go to “Storage” and click on “Disks”

select the disk (sda)

select wipe

choose option quick

After the drive was wiped you need to resilver it to copy your files over from the other one back to the wiped one

resilvering:

############

go to storage

on your data pool under “Topology” click on manage device

select the drive that we just wiped (you should see two drives, one named sdb and another one that earlier was named sda) and click on replace

in the drop down menu you can select the previously wiped drive name sda

select it and go back to the storage menu

You should see the resilvering process has started under the “ZFS Health” tab

After the drive is resilvered, reload the page: everything on “Storage” should be green indicating that both disks in your mirror are working fine.

Confirm that the procedure has worked

You can confirm by going to “Storage” and clicking on “Disks” to check that all disks (for me sda and sdb) are showing the correct data pool instead of something like unassigned.

Since the drive was completely wiped and only the partition with your data pool was copied over in the resilvering process, the issue with the multiple filesystems should be fixed now. You can check by performing the command sudo blkid --probe /dev/sda1 again. This command for partition sdb1 still shows the same error mentioned above. But for the now fixed partition sda1 I get:

/dev/sda1: VERSION="5000" LABEL="Data-Pool" UUID="7327691878511260953" UUID_SUB="1860526013766648308" BLOCK_SIZE="4096" TYPE="zfs_member" USAGE="filesystem" PART_ENTRY_SCHEME="gpt" PART_ENTRY_NAME="data" PART_ENTRY_UUID="da453a01-5237-4af1-802e-0e7ab8f52530" PART_ENTRY_TYPE="6a898cc3-1dd2-11b2-99a6-080020736631" PART_ENTRY_NUMBER="1" PART_ENTRY_OFFSET="2048" PART_ENTRY_SIZE="15628050432" PART_ENTRY_DISK="8:0"

Only once you are very sure that everything worked fine repeat the instructions above for each affected disk.

########################

After the procedure you should see the output from blkid indicating only one filesystem for each of your drives.

Solutions suggested by others either take a longer time or require you to back up your data, wipe all drives and start from scratch setting up the pool.

For anyone experiencing this issue this discussion on this forum named " Pool Offline After Every Reboot" might also be interesting.