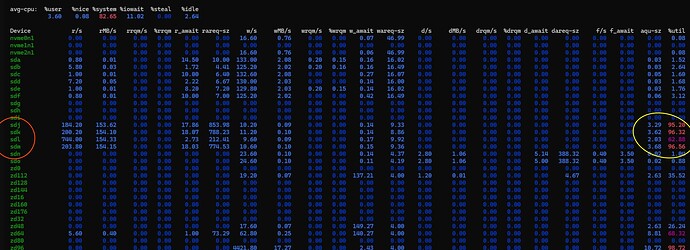

From my perspective here, I see that iostat will report that writes are being issued based on the block size of the “disk” itself. I believe this to be correct and expected behavior. In this case its 512 bytes, in yours it is 4k. See wareq-sz in my output from both the guest and host perspective. I don’t thing this is the issue but I’ll keep digging.

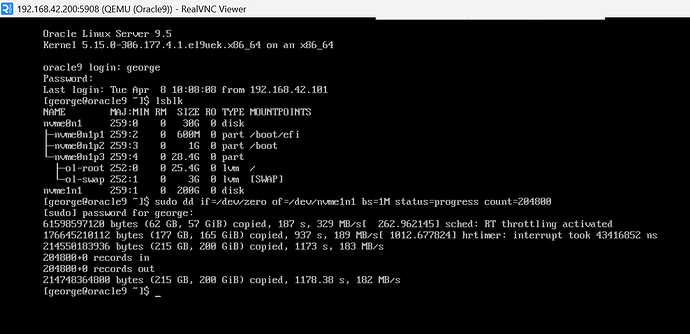

As a note, I have written alot of data on loop to work around the fact this disk is so small, and I have not been able to reproduce in Ubuntu 24.04.

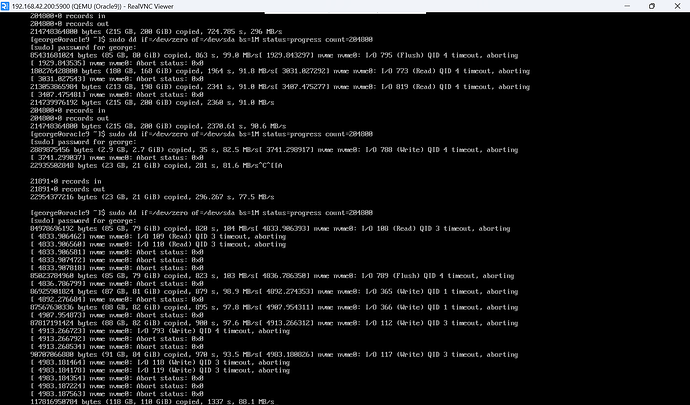

while true; do dd if=/dev/zero of=/dev/nvme0n1 bs=1M status=progress; done

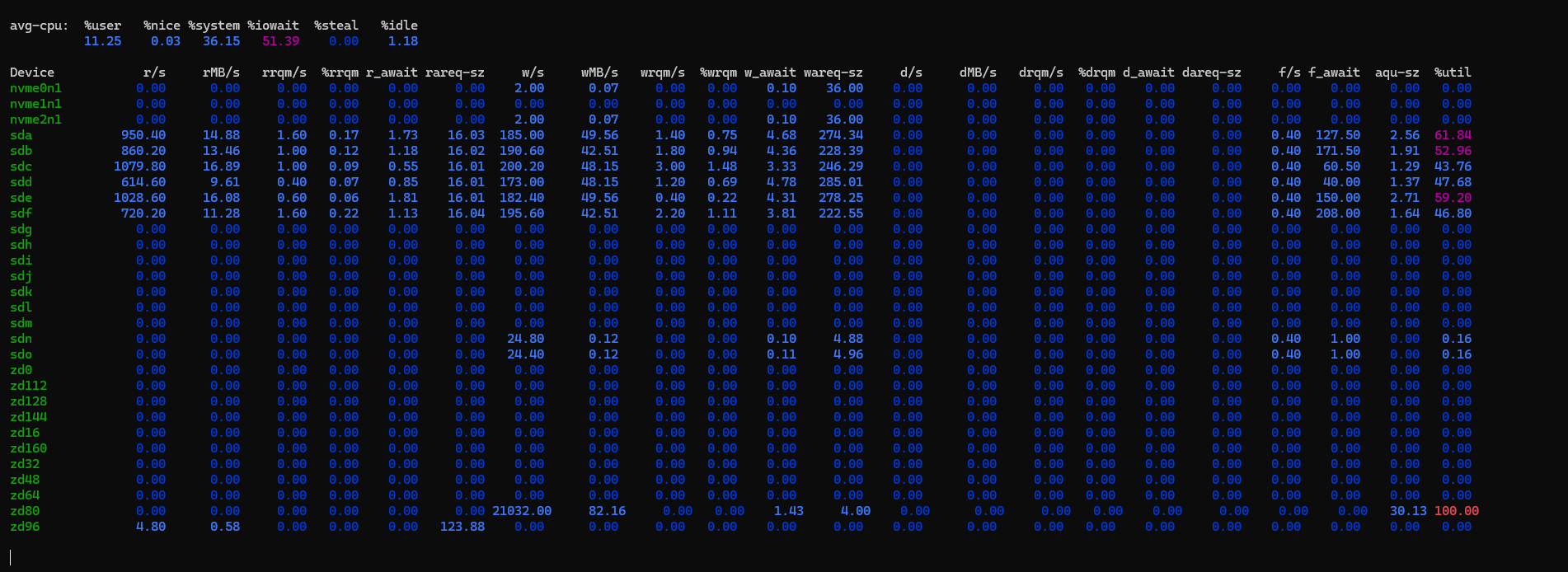

TrueNAS IOstat

Device r/s rkB/s rrqm/s %rrqm r_await rareq-sz w/s wkB/s wrqm/s %wrqm w_await wareq-sz d/s dkB/s drqm/s %drqm d_await dareq-sz f/s f_await aqu-sz %util

zd160 0.00 0.00 0.00 0.00 0.00 0.00 1628.00 833536.00 0.00 0.00 0.51 512.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.83 58.84

iostat client side

nickf@orion:~$ iostat -x nvme0n1 10

Linux 6.11.0-21-generic (orion) 04/07/2025 _x86_64_ (4 CPU)

avg-cpu: %user %nice %system %iowait %steal %idle

2.89 0.00 0.63 0.00 0.25 96.22

Device r/s rkB/s rrqm/s %rrqm r_await rareq-sz w/s wkB/s wrqm/s %wrqm w_await wareq-sz d/s dkB/s drqm/s %drqm d_await dareq-sz f/s f_await aqu-sz %util

nvme0n1 0.00 0.07 0.00 0.00 0.36 17.11 2.34 1155.71 286.59 99.19 109.07 493.94 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.26 0.11

avg-cpu: %user %nice %system %iowait %steal %idle

1.69 0.00 35.00 0.03 0.10 63.18

Device r/s rkB/s rrqm/s %rrqm r_await rareq-sz w/s wkB/s wrqm/s %wrqm w_await wareq-sz d/s dkB/s drqm/s %drqm d_await dareq-sz f/s f_await aqu-sz %util

nvme0n1 0.00 0.00 0.00 0.00 0.00 0.00 999.20 511641.60 126932.00 99.22 88.71 512.05 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 88.64 49.30

Client side:

root@orion:/home/nickf# lsblk -o NAME,PHY-SeC,LOG-SEC,SIZE /dev/nvme0n1

NAME PHY-SEC LOG-SEC SIZE

nvme0n1 512 512 10G

root@orion:/home/nickf#

root@orion:/home/nickf# while true; do

dd if=/dev/zero of=/dev/nvme0n1 bs=1M status=progress

done

10633609216 bytes (11 GB, 9.9 GiB) copied, 13 s, 818 MB/s

dd: error writing '/dev/nvme0n1': No space left on device

10241+0 records in

10240+0 records out

10737418240 bytes (11 GB, 10 GiB) copied, 14.0427 s, 765 MB/s

10200547328 bytes (10 GB, 9.5 GiB) copied, 12 s, 850 MB/s

dd: error writing '/dev/nvme0n1': No space left on device

10241+0 records in

10240+0 records out

10737418240 bytes (11 GB, 10 GiB) copied, 13.6552 s, 786 MB/s

10583277568 bytes (11 GB, 9.9 GiB) copied, 12 s, 882 MB/s

dd: error writing '/dev/nvme0n1': No space left on device

10241+0 records in

10240+0 records out

10737418240 bytes (11 GB, 10 GiB) copied, 13.1167 s, 819 MB/s

10042212352 bytes (10 GB, 9.4 GiB) copied, 11 s, 913 MB/s

dd: error writing '/dev/nvme0n1': No space left on device

10241+0 records in

10240+0 records out

10737418240 bytes (11 GB, 10 GiB) copied, 12.8883 s, 833 MB/s

10065281024 bytes (10 GB, 9.4 GiB) copied, 11 s, 915 MB/s

dd: error writing '/dev/nvme0n1': No space left on device

10241+0 records in

10240+0 records out

10737418240 bytes (11 GB, 10 GiB) copied, 12.7005 s, 845 MB/s

10613686272 bytes (11 GB, 9.9 GiB) copied, 12 s, 884 MB/s

dd: error writing '/dev/nvme0n1': No space left on device

10241+0 records in

10240+0 records out