It’s prepend sudo, as it goes it the start, append is at end. Or You’ll need to put sudo before each command listed below

With your feedback, I was able to make a little progress by adding the nvidia.runtime to the UI created incus instance by running the following commands after creating the instance:

incus config set docker110 nvidia.runtime=true

incus config set docker110 security.nesting=true

incus config set docker110 security.syscalls.intercept.mknod=true

incus config set docker110 security.syscalls.intercept.setxattr=true

This gives me a working instance that I can then install docker and nvidia-container-toolkit and nvidia-smi works in the incus instance. But I’m unable to then present the GPU to docker containers under the instance. I get the following error:

root@docker110:~# docker run --rm --runtime=nvidia --gpus all ubuntu nvidia-smi

docker: Error response from daemon: failed to create task for container: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: error during container init: error running prestart hook #0: exit status 1, stdout: , stderr: Auto-detected mode as 'legacy'

nvidia-container-cli: mount error: failed to add device rules: unable to find any existing device filters attached to the cgroup: bpf_prog_query(BPF_CGROUP_DEVICE) failed: operation not permitted: unknown

I also tried using the docker-init-nvidia.yaml to create an instance, which does successfully create an instance with docker and nvidia-container-toolkit running and nvidia-smi works in the instance, but I get the same error as above when trying to run a container using the nvidia runtime under the instance.

Researching the error, I found that the incus instance is missing an number of folders under /proc/driver/nvidia (including /proc/driver/nvidia/gpus) that it seems like needs to be present.

root@docker110:~# ls /proc/driver/nvidia

params registry version

root@docker110:~#

Any ideas?

I had it working on the initial beta (you can look further up the thread earlier), but I ran into that blocker as well. Still trying to figure it out in RC1. I didn’t have much time this weekend to look into it. Setting no-cgroups in the Nvidia toolkit requires passing through a new device, but IX blocks that in the middleware. Not 100% sure other than that. I have to go through logs, etc, next week.

Also, removing the count from the GPU config on Docker to allow it to start. That worked with Plex, however, Immich won’t use the GPU without a count.

Sounds good. Thanks for the confirmation. I’m a bit in over my head with doing any real troubleshooting on this but had hoped I would finally be able to move away from using jailmaker (which is all kinds of quirky in electric eel because of /usr/lib/x86_64-linux-gnu folder being read only in the jail when gpu_passthrough_nvidia is enabled).

I’ll keep tinkering on my test box and leave production box at electriceel+jailmaker despite it’s quirks for now.

@awalkerix I’ve not had much luck in getting this working with Docker. I’ve been looking around from what I can see the container requires mounting of /proc/driver/nvidia/gpus/* within the Incus container, however It’s not happening because of the thread up from this response and the post right after. I think it’s in a TNS issue, as straight Incus will let you mount that path in an Incus container. I checked inside of my old jailmaker jails with nvidia passed through, and that directory is mounted and HW acceleration is working properly there. That is really the main difference here that I’m seeing.

Should a bug report be filed for this or has someone else already done so? Thanks. ![]()

EDIT:

Libs are very different in incus vs TNS and jailmaker, so that’s some if it…

incus:

nvidia-container-cli list|wc -l

23

nvidia-container-cli list

/dev/nvidiactl

/dev/nvidia-uvm

/dev/nvidia-uvm-tools

/dev/nvidia-modeset

/dev/nvidia0

/usr/bin/nvidia-smi

/usr/bin/nvidia-debugdump

/usr/bin/nvidia-persistenced

/usr/bin/nvidia-cuda-mps-control

/usr/bin/nvidia-cuda-mps-server

/usr/lib/x86_64-linux-gnu/libnvidia-ml.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-cfg.so.550.142

/usr/lib/x86_64-linux-gnu/libcuda.so.550.142

/usr/lib/x86_64-linux-gnu/libcudadebugger.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-opencl.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-gpucomp.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-ptxjitcompiler.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-allocator.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-pkcs11.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-pkcs11-openssl3.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-nvvm.so.550.142

/lib/firmware/nvidia/550.142/gsp_ga10x.bin

/lib/firmware/nvidia/550.142/gsp_tu10x.bin

TNS:

nvidia-container-cli list|wc -l

40

nvidia-container-cli list

/dev/nvidiactl

/dev/nvidia-uvm

/dev/nvidia-uvm-tools

/dev/nvidia-modeset

/dev/nvidia0

/usr/bin/nvidia-smi

/usr/bin/nvidia-debugdump

/usr/bin/nvidia-persistenced

/usr/bin/nvidia-cuda-mps-control

/usr/bin/nvidia-cuda-mps-server

/usr/lib/x86_64-linux-gnu/libnvidia-ml.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-cfg.so.550.142

/usr/lib/x86_64-linux-gnu/libcuda.so.550.142

/usr/lib/x86_64-linux-gnu/libcudadebugger.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-opencl.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-gpucomp.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-ptxjitcompiler.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-allocator.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-pkcs11.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-pkcs11-openssl3.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-nvvm.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-ngx.so.550.142

/usr/lib/x86_64-linux-gnu/vdpau/libvdpau_nvidia.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-encode.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-opticalflow.so.550.142

/usr/lib/x86_64-linux-gnu/libnvcuvid.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-eglcore.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-glcore.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-tls.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-glsi.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-fbc.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-rtcore.so.550.142

/usr/lib/x86_64-linux-gnu/libnvoptix.so.550.142

/usr/lib/x86_64-linux-gnu/libGLX_nvidia.so.550.142

/usr/lib/x86_64-linux-gnu/libEGL_nvidia.so.550.142

/usr/lib/x86_64-linux-gnu/libGLESv2_nvidia.so.550.142

/usr/lib/x86_64-linux-gnu/libGLESv1_CM_nvidia.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-glvkspirv.so.550.142

/lib/firmware/nvidia/550.142/gsp_ga10x.bin

/lib/firmware/nvidia/550.142/gsp_tu10x.bin

jailmaker:

nvidia-container-cli list|wc -l

40

nvidia-container-cli list

/dev/nvidiactl

/dev/nvidia-uvm

/dev/nvidia-uvm-tools

/dev/nvidia-modeset

/dev/nvidia0

/usr/bin/nvidia-smi

/usr/bin/nvidia-debugdump

/usr/bin/nvidia-persistenced

/usr/bin/nvidia-cuda-mps-control

/usr/bin/nvidia-cuda-mps-server

/usr/lib/x86_64-linux-gnu/libnvidia-ml.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-cfg.so.550.142

/usr/lib/x86_64-linux-gnu/libcuda.so.550.142

/usr/lib/x86_64-linux-gnu/libcudadebugger.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-opencl.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-gpucomp.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-ptxjitcompiler.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-allocator.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-pkcs11.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-pkcs11-openssl3.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-nvvm.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-ngx.so.550.142

/usr/lib/x86_64-linux-gnu/vdpau/libvdpau_nvidia.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-encode.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-opticalflow.so.550.142

/usr/lib/x86_64-linux-gnu/libnvcuvid.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-eglcore.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-glcore.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-tls.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-glsi.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-fbc.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-rtcore.so.550.142

/usr/lib/x86_64-linux-gnu/libnvoptix.so.550.142

/usr/lib/x86_64-linux-gnu/libGLX_nvidia.so.550.142

/usr/lib/x86_64-linux-gnu/libEGL_nvidia.so.550.142

/usr/lib/x86_64-linux-gnu/libGLESv2_nvidia.so.550.142

/usr/lib/x86_64-linux-gnu/libGLESv1_CM_nvidia.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-glvkspirv.so.550.142

/lib/firmware/nvidia/550.142/gsp_ga10x.bin

/lib/firmware/nvidia/550.142/gsp_tu10x.bin

EDIT2:

Found this thread…

So, I see the permissions in the container differ from the host:

ls -l /dev/|grep nvidia

crw-rw-rw- 1 nobody nogroup 236, 0 Mar 11 12:21 nvidia-uvm

crw-rw-rw- 1 nobody nogroup 236, 1 Mar 11 12:21 nvidia-uvm-tools

crw-rw-rw- 1 root root 195, 0 Mar 16 16:50 nvidia0

crw-rw-rw- 1 nobody nogroup 195, 255 Mar 11 12:21 nvidiactl

TNS:

ls -l /dev|grep nvidia

drwxr-xr-x 2 root root 80 Mar 11 12:21 nvidia-caps

crw-rw-rw- 1 root root 236, 0 Mar 11 12:21 nvidia-uvm

crw-rw-rw- 1 root root 236, 1 Mar 11 12:21 nvidia-uvm-tools

crw-rw-rw- 1 root root 195, 0 Mar 11 12:21 nvidia0

crw-rw-rw- 1 root root 195, 255 Mar 11 12:21 nvidiactl

userns_idmap at work here with the nobody:nogroup permission.

Ok, so maybe I’m barking up the wrong tree… HW Transcoding isn’t even working for me on my jailmaker jail on RC1… Hrm. Everything is in place in the jail. I updated it to the latest nvidia-containers-toolkit and docker-ce, but nothing.

EDIT: it was DNS… couldn’t contact plex.tv for my license. ![]()

It’s not DNS

There’s no way it’s DNS

It was DNS

Got it working again in Incus…

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.142 Driver Version: 550.142 CUDA Version: 12.4 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA GeForce GTX 1080 Ti Off | 00000000:2B:00.0 Off | N/A |

| 0% 58C P2 77W / 280W | 609MiB / 11264MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| 0 N/A N/A 3228338 C ...lib/plexmediaserver/Plex Transcoder 606MiB |

+-----------------------------------------------------------------------------------------+

I’m going to modify the docker-init-nvidia.yaml script in the OP with the workaround, but in short, here is the workaround for double passthrough. Replace the PCI address with your card:

mkdir -p /proc/driver/nvidia/gpus && ln -s /dev/nvidia0 /proc/driver/nvidia/gpus/0000:2b:00.0

Updated docker-init-nvidia.yaml cloud init script in OP.

I need to update the /etc/nvidia-container-runtime/config.toml and change no-cgroups to true as well in the OP. I will work on that here in a bit.

I rebuilt from the updated script and modified that config.toml and that config and everything is working without any compose file changes.

Modified docker-init-nvidia.yaml with another runcmd to disable cgroups in /etc/nvidia-container-runtime/config.toml.

Nvidia should now be working out of the box with that config. ![]()

So adding this to my dockge compose works, but I’m not sure why it’s not working by default since the Incus host default DNS server is set to that IP in resolv.conf. All my interfaces are bridge interfaces in Incus from the host. It loads all the Dockge agents instantly like it did in jailmaker.

dns:

- 192.168.0.8

Since these are system bridges, they are no managed by Incus. More investigation to do…

incus network ls

+-----------------+----------+---------+------+------+-------------+---------+-------+

| NAME | TYPE | MANAGED | IPV4 | IPV6 | DESCRIPTION | USED BY | STATE |

+-----------------+----------+---------+------+------+-------------+---------+-------+

| bond0 | bond | NO | | | | 0 | |

+-----------------+----------+---------+------+------+-------------+---------+-------+

| br0 | bridge | NO | | | | 3 | |

+-----------------+----------+---------+------+------+-------------+---------+-------+

| br2 | bridge | NO | | | | 0 | |

+-----------------+----------+---------+------+------+-------------+---------+-------+

| br5 | bridge | NO | | | | 3 | |

+-----------------+----------+---------+------+------+-------------+---------+-------+

| enp35s0 | physical | NO | | | | 0 | |

+-----------------+----------+---------+------+------+-------------+---------+-------+

| enp36s0 | physical | NO | | | | 0 | |

+-----------------+----------+---------+------+------+-------------+---------+-------+

| enxd23daae1515e | physical | NO | | | | 0 | |

+-----------------+----------+---------+------+------+-------------+---------+-------+

| lo | loopback | NO | | | | 0 | |

+-----------------+----------+---------+------+------+-------------+---------+-------+

| vlan2 | vlan | NO | | | | 0 | |

+-----------------+----------+---------+------+------+-------------+---------+-------+

| vlan5 | vlan | NO | | | | 0 | |

+-----------------+----------+---------+------+------+-------------+---------+-------+

Quick workaround would be to set the DNS server globally in Docker’s config, then restart docker:

cat /etc/docker/daemon.json

{

"dns": ["192.168.0.8"]

}

Ugh… not working again… it worked several times on creating new instances yesterday. I must’ve missed something I did… Looking, again…

This should be the end of needing incus admin recover to use multiple pools.

On update, the middleware will now automatically “recover” all the pools listed in “storage_pools”

This will be great. ![]()

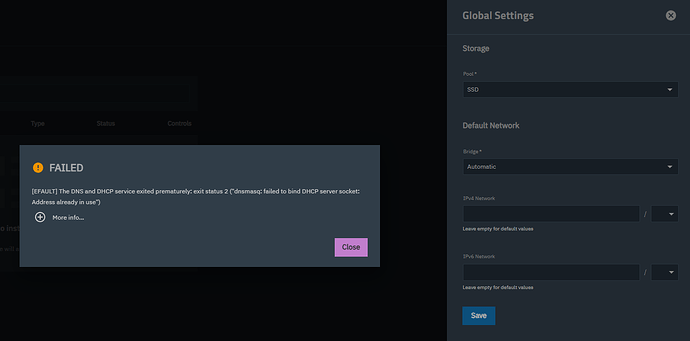

I would like to test Incus but I have the following problem:

I left all the settings at default and I get the error “Address already in use”.

I don’t know anything about Incus, completely new.

Are you running something like pi-hole already? If you only have one interface and you are using the managed interface that Incus creates, then you’re going to have a conflict with that port and Incus’s dnsmasq implementation.

You can move Incus dnsmasq port to something else or create bridge’s within TNCE and not have Incus manage those interfaces. That should get you around this issue.

I don’t have any managed interfaces within Incus, just TNCE.

I created the bridge and passed this step. Thank you.

I’m moving forward.

Ok, making progress, I think…

[Req#1ff1/Transcode] [FFMPEG] - Cannot load libnvidia-encode.so.1

Missing libs in Incus, how in the world did I have this working yesterday?

nvidia-container-cli list|grep -E 'encode|libnvcuvid'

jailmaker:

nvidia-container-cli list|grep -E 'encode|libnvcuvid'

/usr/lib/x86_64-linux-gnu/libnvidia-encode.so.550.142

/usr/lib/x86_64-linux-gnu/libnvcuvid.so.550.142

ENV Vars are not being set correctly in the Incus container:

printenv |grep -i nvidia

NVIDIA_VISIBLE_DEVICES=none

NVIDIA_REQUIRE_CUDA=

NVIDIA_DRIVER_CAPABILITIES=compute,utility

NVIDIA_REQUIRE_DRIVER=

Setting the nvidia.driver.capabilities config causes the container to fail to start.

Got a little further, but Plex is still barking about missing libs.

nvidia.driver.capabilities: compute,utility,video

vars:

printenv |grep -i nvidia

NVIDIA_VISIBLE_DEVICES=none

NVIDIA_REQUIRE_CUDA=

NVIDIA_DRIVER_CAPABILITIES=compute,utility,video

NVIDIA_REQUIRE_DRIVER=

nvidia-container-cli list

/dev/nvidiactl

/dev/nvidia-uvm

/dev/nvidia-uvm-tools

/dev/nvidia-modeset

/dev/nvidia0

/usr/bin/nvidia-smi

/usr/bin/nvidia-debugdump

/usr/bin/nvidia-persistenced

/usr/bin/nvidia-cuda-mps-control

/usr/bin/nvidia-cuda-mps-server

/usr/lib/x86_64-linux-gnu/libnvidia-ml.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-cfg.so.550.142

/usr/lib/x86_64-linux-gnu/libcuda.so.550.142

/usr/lib/x86_64-linux-gnu/libcudadebugger.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-opencl.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-gpucomp.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-ptxjitcompiler.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-allocator.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-pkcs11.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-pkcs11-openssl3.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-nvvm.so.550.142

/usr/lib/x86_64-linux-gnu/libvdpau_nvidia.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-encode.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-opticalflow.so.550.142

/usr/lib/x86_64-linux-gnu/libnvcuvid.so.550.142

/lib/firmware/nvidia/550.142/gsp_ga10x.bin

/lib/firmware/nvidia/550.142/gsp_tu10x.bin

lib count:

nvidia-container-cli list|wc -l

27

EDIT: moar libs:

nvidia-container-cli list

/dev/nvidiactl

/dev/nvidia-uvm

/dev/nvidia-uvm-tools

/dev/nvidia-modeset

/dev/nvidia0

/usr/bin/nvidia-smi

/usr/bin/nvidia-debugdump

/usr/bin/nvidia-persistenced

/usr/bin/nvidia-cuda-mps-control

/usr/bin/nvidia-cuda-mps-server

/usr/lib/x86_64-linux-gnu/libnvidia-ml.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-cfg.so.550.142

/usr/lib/x86_64-linux-gnu/libcuda.so.550.142

/usr/lib/x86_64-linux-gnu/libcudadebugger.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-opencl.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-gpucomp.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-ptxjitcompiler.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-allocator.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-pkcs11.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-pkcs11-openssl3.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-nvvm.so.550.142

/usr/lib/x86_64-linux-gnu/libvdpau_nvidia.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-encode.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-opticalflow.so.550.142

/usr/lib/x86_64-linux-gnu/libnvcuvid.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-eglcore.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-glcore.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-tls.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-glsi.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-fbc.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-rtcore.so.550.142

/usr/lib/x86_64-linux-gnu/libnvoptix.so.550.142

/usr/lib/x86_64-linux-gnu/libGLX_nvidia.so.550.142

/usr/lib/x86_64-linux-gnu/libEGL_nvidia.so.550.142

/usr/lib/x86_64-linux-gnu/libGLESv2_nvidia.so.550.142

/usr/lib/x86_64-linux-gnu/libGLESv1_CM_nvidia.so.550.142

/usr/lib/x86_64-linux-gnu/libnvidia-glvkspirv.so.550.142

/lib/firmware/nvidia/550.142/gsp_ga10x.bin

/lib/firmware/nvidia/550.142/gsp_tu10x.bin

count:

nvidia-container-cli list|wc -l

39

nvidia.driver.capabilities: compute,graphics,utility,video

That was it. I updated the OP and the docker-init-nvidia.yaml config. I would use all here, but the display option is causing it to fail to start. More configs here.

nvidia.driver.capabilities: compute,graphics,utility,video

Working output, again…

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.142 Driver Version: 550.142 CUDA Version: 12.4 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA GeForce GTX 1080 Ti Off | 00000000:2B:00.0 Off | N/A |

| 0% 54C P2 102W / 280W | 609MiB / 11264MiB | 15% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| 0 N/A N/A 3995473 C ...lib/plexmediaserver/Plex Transcoder 606MiB |

+-----------------------------------------------------------------------------------------+

I also modified my plex docker compose from:

devices:

- /dev/dri:/dev/dri

runtime: nvidia

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: 1

capabilities:

- gpu

to:

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: 1

capabilities:

- gpu

- compute

- utility

- video

Needed libs for this use case are loading now:

root@apps1:~# nvidia-container-cli list|grep -E 'encode|libnvcuvid'

/usr/lib/x86_64-linux-gnu/libnvidia-encode.so.550.142

/usr/lib/x86_64-linux-gnu/libnvcuvid.so.550.142