Hi, I recently expanded my ZFS Z2 pool (8 wide) from using 1TB to 8TB.

I was able to easily replace each of the first 7 drives, but the last one, sde gave me issues. It has failed one short smart test, but passed several long tests and many more subsequent short tests since, so I’m not sure if it’s a hardware issue. It was finicky, I was eventually able to successfully replace it, but when I tried to expand the pool to fill the drives, I would consistently get errors. I ended up manually expanding the data partitions on each drive and with autoexpand on, the capacity was updated. For reference, I used the command found here (discussion) and here. sdi shows what a drive looked like before being expanded (I used a 2TB for testing while troubleshooting getting sde working).

When using lsblk, I noticed that one of my drives (sde) is missing the raid1 and crypt partitions (see paste below). Some early research, mainly posts on the old forum, indicates that it shouldn’t be a problem and is common for arrays with an odd/prime number of disks. This pool, however, has 8, and they say raid1 partitions instead of raid2 partitions. Is this something I should be worried about with the drive or is this expected behavior?

admin@truenas[~]$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 20G 0 disk

├─sda1 8:1 0 1M 0 part

├─sda2 8:2 0 512M 0 part

└─sda3 8:3 0 19.5G 0 part

sdb 8:16 0 7.3T 0 disk

├─sdb1 8:17 0 2G 0 part

│ └─md127 9:127 0 2G 0 raid1

│ └─md127 253:1 0 2G 0 crypt

└─sdb2 8:18 0 7.3T 0 part

sdc 8:32 0 7.3T 0 disk

├─sdc1 8:33 0 2G 0 part

│ └─md127 9:127 0 2G 0 raid1

│ └─md127 253:1 0 2G 0 crypt

└─sdc2 8:34 0 7.3T 0 part

sdd 8:48 0 7.3T 0 disk

├─sdd1 8:49 0 2G 0 part

│ └─md126 9:126 0 2G 0 raid1

│ └─md126 253:0 0 2G 0 crypt

└─sdd2 8:50 0 7.3T 0 part

sde 8:64 0 7.3T 0 disk

├─sde1 8:65 0 2G 0 part

└─sde2 8:66 0 7.3T 0 part

sdf 8:80 0 7.3T 0 disk

├─sdf1 8:81 0 2G 0 part

│ └─md126 9:126 0 2G 0 raid1

│ └─md126 253:0 0 2G 0 crypt

└─sdf2 8:82 0 7.3T 0 part

sdg 8:96 0 7.3T 0 disk

├─sdg1 8:97 0 2G 0 part

│ └─md127 9:127 0 2G 0 raid1

│ └─md127 253:1 0 2G 0 crypt

└─sdg2 8:98 0 7.3T 0 part

sdh 8:112 0 7.3T 0 disk

├─sdh1 8:113 0 2G 0 part

│ └─md126 9:126 0 2G 0 raid1

│ └─md126 253:0 0 2G 0 crypt

└─sdh2 8:114 0 7.3T 0 part

sdi 8:128 0 1.8T 0 disk

├─sdi1 8:129 0 2G 0 part

└─sdi2 8:130 0 929.5G 0 part

sdj 8:144 0 7.3T 0 disk

├─sdj1 8:145 0 2G 0 part

│ └─md127 9:127 0 2G 0 raid1

│ └─md127 253:1 0 2G 0 crypt

└─sdj2 8:146 0 7.3T 0 part

sr0 11:0 1 1024M 0 rom

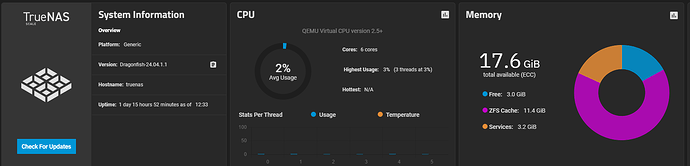

OS Version:TrueNAS-SCALE-24.04.1.1

Product:Standard PC (i440FX + PIIX, 1996)

Model:QEMU Virtual CPU version 2.5+

Memory:6 GiB

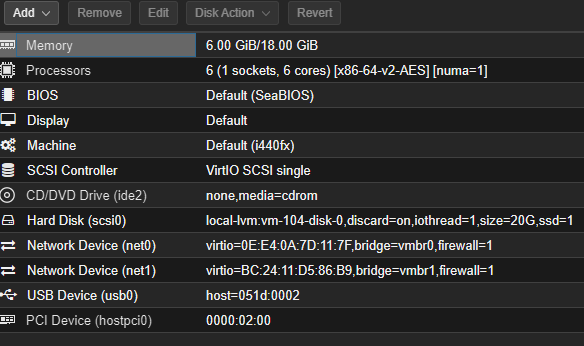

Running as a VM on Proxmox

PCI passthrough of a Dell/**LSI-IT-mode** HBA card for storage drives.

You need to post your complete hardware specs, proxmox setup and pool setup. It will help to see any other issues such as wrong hard drives (SMR / CMR issue) etc

Memory: 6 GiB is below min shown in hardware guide

https://www.truenas.com/docs/scale/24.04/gettingstarted/scalehardwareguide/

For the RAM, I think that is because I have it running with ballooning memory, with a minimum of 6GB. It is actually running with 18GB (see the pictures below).

I have a Dell PowerEdge R720XD running Proxmox. TrueNAS is running as a VM in Proxmox (boot drive is an SSD directly on Proxmox) and the PERC H310 Mini (in IT mode) is passed through to the VM directly, so Proxmox doesn’t see the 12 bays at all- only TrueNAS does. The model for all of the drives is HUH728080AL4200, an HGST 7.28TiB model.

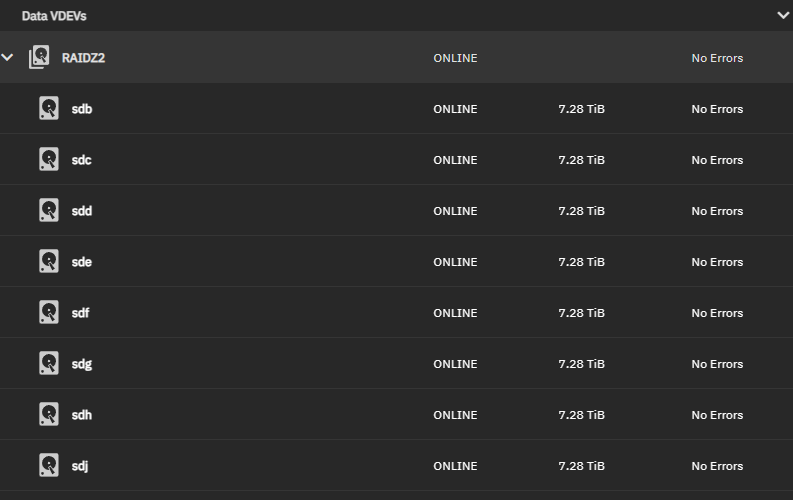

The pool has a single 8-wide vdev as shown below. With the exception of the increased recordsize for the dataset on the pool (128KiB → 1MiB), everything should be default settings.

What other information about the system would be useful?

Just seeing that RAM figure is helpful and the pass through is helpful. Did you follow the guides for setting up TrueNAS as a VM? I am not sure whether balloning memory was okay or it wasn’t.

See 4.3 in link

https://www.truenas.com/blog/yes-you-can-virtualize-freenas/

What was the SMART error you saw? Have you tried removing, whiping and reslivering the ‘bad’ drive or anything else? Did you do a drive burn in before using or are these just out of the box so to speak. Check or swap cables to disks?

After digging around a little bit for the ballooning memory specifically, I’ll probably disable it at the next restart. That article you linked is good too.

I have removed and wiped and resilvered that drive several times. The drives are all used enterprise drives and I have never had any issues with the chassis they are mounted in, but I could try putting it in a different drive bay.

smartctl -a gives the following:

=== START OF INFORMATION SECTION ===

Vendor: HGST

Product: HUH728080AL4200

Revision: A7J0

Compliance: SPC-4

User Capacity: 8,001,563,222,016 bytes [8.00 TB]

Logical block size: 4096 bytes

LU is fully provisioned

Rotation Rate: 7200 rpm

Form Factor: 3.5 inches

Logical Unit id: 0x5000cca3b4002ddc

Serial number: -----1PX [redacted]

Device type: disk

Transport protocol: SAS (SPL-4)

Local Time is: Tue Jul 9 13:27:15 2024 CDT

SMART support is: Available - device has SMART capability.

SMART support is: Enabled

Temperature Warning: Enabled

=== START OF READ SMART DATA SECTION ===

SMART Health Status: OK

Current Drive Temperature: 51 C

Drive Trip Temperature: 85 C

Accumulated power on time, hours:minutes 43155:54

Manufactured in week 13 of year 2016

Specified cycle count over device lifetime: 50000

Accumulated start-stop cycles: 31

Specified load-unload count over device lifetime: 600000

Accumulated load-unload cycles: 1409

Elements in grown defect list: 0

Vendor (Seagate Cache) information

Blocks sent to initiator = 28868108475170816

Error counter log:

Errors Corrected by Total Correction Gigabytes Total

ECC rereads/ errors algorithm processed uncorrected

fast | delayed rewrites corrected invocations [10^9 bytes] errors

read: 0 42 0 42 20588656 168782.203 0

write: 0 3 0 3 3376402 74228.360 0

verify: 0 0 0 0 94599 0.016 0

Non-medium error count: 0

SMART Self-test log

Num Test Status segment LifeTime LBA_first_err [SK ASC ASQ]

Description number (hours)

# 1 Background short Completed - 43056 - [- - -]

# 2 Background short Completed - 43040 - [- - -]

# 3 Background long Completed - 43034 - [- - -]

# 4 Background short Completed - 43015 - [- - -]

# 5 Background short Completed - 43015 - [- - -]

# 6 Background short Completed - 43015 - [- - -]

# 7 Background short Completed - 43015 - [- - -]

# 8 Background long Completed - 43012 - [- - -]

# 9 Background short Failed in segment --> 3 42994 - [0x1 0x5d 0xfd]

#10 Background short Completed - 42975 - [- - -]

Long (extended) Self-test duration: 75960 seconds [21.1 hours]

If you have tried that, I am hoping someone else will make some comments.