i managed to fix immich docker on this new nas build.

few mistakes

- now i no longer use a nvidia graphics card, i forgot to edit the docker compose to remove the

image: ghcr.io/immich-app/immich-machine-learning:${IMMICH_VERSION:-release}-cuda

the correct entry should be this without the cuda. this will then use CPU to perform the machine learning. It will be slower supposedly compared to using graphics card

image: ghcr.io/immich-app/immich-machine-learning:${IMMICH_VERSION:-release}

So now i can do the machine learning for immich so i can run a search, say cars, then it will show any pics resembling a car in the results.

The other feature feature does is the people so it takes pics of people and group images with that person so it’s easier to find pics that way.

point here is, this build without a graphics card will still work for immich machine learning and persons/people grouping.

also sharing what i did to troubleshoot this issue if you are like me and plan to remove a graphic card from your immich setup but missed a step like i did.

This is the docker compose i am using that works

name: immich

services:

immich-server:

container_name: immich_server

image: ghcr.io/immich-app/immich-server:${IMMICH_VERSION:-release}

volumes:

- ${UPLOAD_LOCATION}:/usr/src/app/upload

- /etc/localtime:/etc/localtime:ro

- /mnt/Storage/Pictures/Avatars:/mnt/media/Avatars:ro

env_file:

- .env

ports:

- 2283:2283

depends_on:

- redis

- database

restart: unless-stopped

healthcheck:

disable: false

immich-machine-learning:

container_name: immich_machine_learning

image: ghcr.io/immich-app/immich-machine-learning:${IMMICH_VERSION:-release}

volumes:

- /mnt/docker/data/immich/model-cache:/cache

env_file:

- .env

restart: unless-stopped

healthcheck:

disable: false

redis:

container_name: immich_redis

image: redis:alpine

healthcheck:

test: redis-cli ping || exit 1

restart: unless-stopped

database:

container_name: immich_postgres

image: tensorchord/pgvecto-rs:pg14-v0.2.0

env_file:

- .env

environment:

POSTGRES_PASSWORD: ${DB_PASSWORD}

POSTGRES_USER: ${DB_USERNAME}

POSTGRES_DB: ${DB_DATABASE_NAME}

POSTGRES_INITDB_ARGS: --data-checksums

volumes:

# Do not edit the next line. If you want to change the database storage location on your system, edit the value of DB_DATA_LOCATION in the .env file

- ${DB_DATA_LOCATION}:/var/lib/postgresql/data

healthcheck:

test: pg_isready --dbname="$${POSTGRES_DB}" --username="$${POSTGRES_USER}" ||

exit 1; Chksum="$$(psql --dbname="$${POSTGRES_DB}"

--username="$${POSTGRES_USER}" --tuples-only --no-align

--command='SELECT COALESCE(SUM(checksum_failures), 0) FROM

pg_stat_database')"; echo "checksum failure count is $$Chksum"; [

"$$Chksum" = '0' ] || exit 1

interval: 5m

start_interval: 30s

start_period: 5m

command: postgres -c shared_preload_libraries=vectors.so -c

'search_path="$$user", public, vectors' -c logging_collector=on -c

max_wal_size=2GB -c shared_buffers=512MB -c wal_compression=on

restart: unless-stopped

#volumes:

# model-cache: null

networks: {}

Notice the volumes i hashed out. That was the default but i modified that part to input the location manually instead. tested as working using my edits.

There is another portion which is the .env file where u have the rest of ur edits.

If you go to GitHub - immich-app/immich: High performance self-hosted photo and video management solution. look for the .env file example as reference what to add there.

I deployed mine using dockge, so i added the .env entry manually from dockge, which then saves that into the dockge stacks location you specified during dockge config setup.

for this portion, u change it to where your media is located. had a couple of entries similar to this added.

- /mnt/Storage/Pictures/Avatars:/mnt/media/Avatars:ro

note: the pathin on the left side is what i set in jailmaker. so if you are using jailmaker, make sure it’s same as jailmakers setup, and not the actual pathing as your nas. But if you are not using jailmaker, then use the full path instead. But the right side in the immich docker compose, this is what you will be using when adding external libraries in the immich UI. Hope that clarifies

Then when you’ve got immich up and running, go to admin, external libraries, edit import paths

start adding like this and so on

/mnt/media/Avatars

and if you want to be able to browse the contents of those media locations you added, go to user settings, features and enable folders and add the sidebar.

then go to admin, jobs, then do the scan library, smart detection, face detection, facial recognition.

At this point your immich is fully setup and usable, enjoy

PS: for immich maintenance, check their github every now and then in case they require you update your docker compose to match their latest compose and env based on latest changes they make. Immich tend to break stuff for latest releases that require some editting to update to match their edits. Doesn’t happen often but it does happen, so if your immich which is set to auto update (i use watchtowerr to do that), and it broke, this could be why. So just a heads up

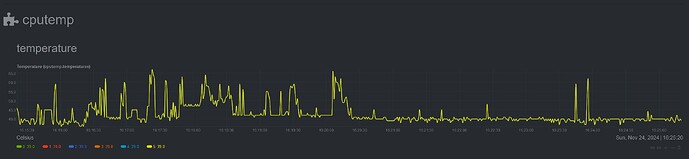

So anyway, for this nas build when running the immich jobs, cpu load is under 45% and the cpu temps hover between 60-70c at most. No issues.

Even without the nvidia graphics card (i was using a 1050 gtx before), i can still use immich and the machine learning/people/persons features just fine.