Ok i already ordered.

leo seller didnt get back to me. and bit doubtful i can get that to work tbh. it’s good for nas and good value, just i’m not confident i can navigate around it’s quirks

settled on this, already ordered and on the way.

MAG B650 TOMAHAWK WIFI + 7600 am5 cpu

silverstone 4u case

KINGSTON FURY BEAST BLACK 32GB (2*16GB) DDR5 6000MHZ CL30

SEASONIC Focus+ GX Series 80+ 750w (checked the mobo compatibility and also confirmed with seller this works)

scythe mugen 6 black edition single tower (i wanted the single fan, but no stock, so went with this. it was also cheaper than the noctua)

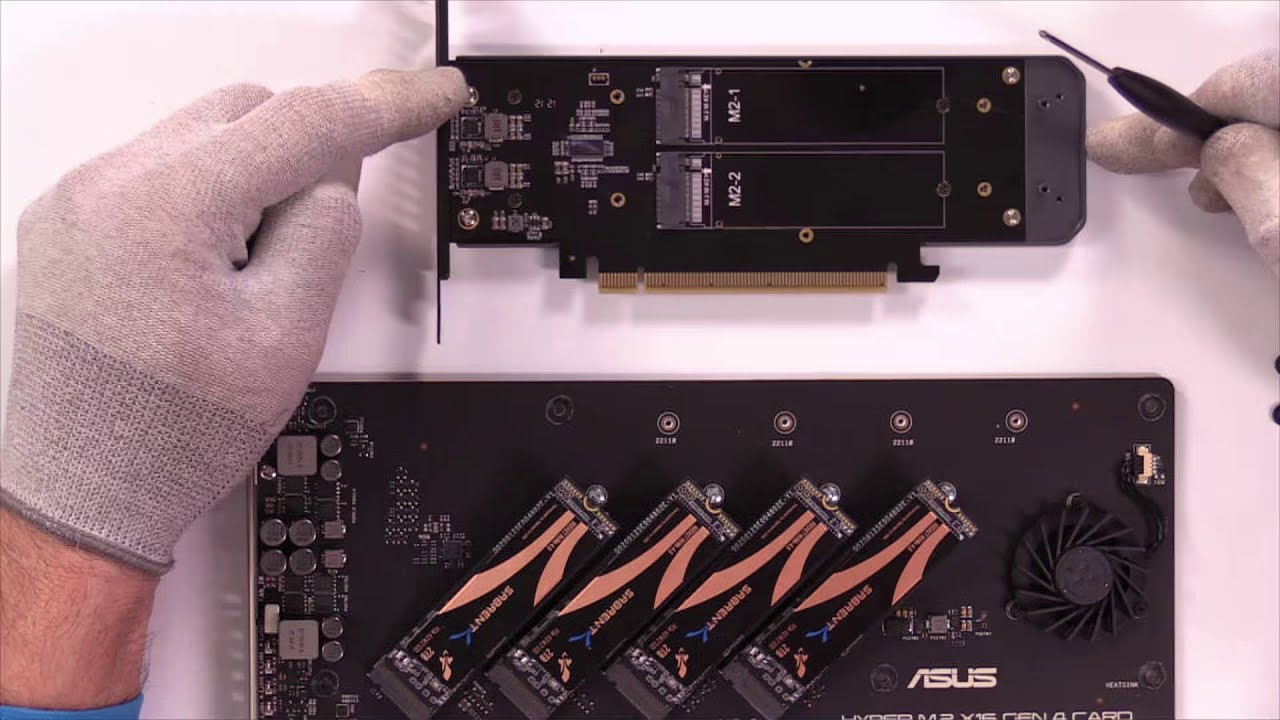

cooleo m.2 heatsink (one of the m2 ssd slots does not have a heatsink, so using this for that.

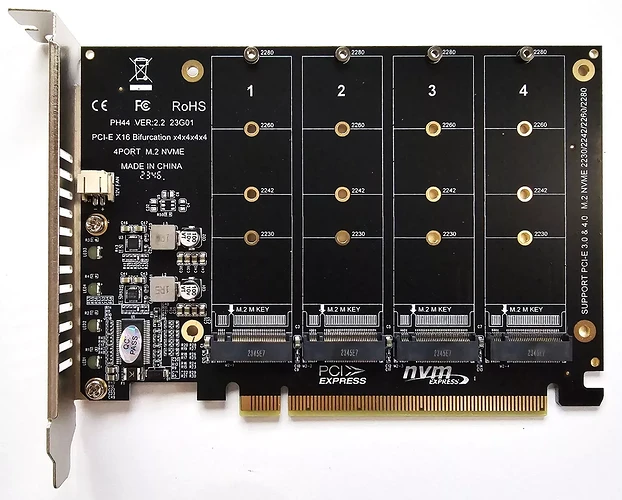

Silicon Power NVMe PCIe Gen4x4 M.2 2280 SSD UD90 500gb (didnt cost too much more than 256gb)

Silicon Power NVMe PCIe Gen4x4 M.2 2280 SSD US75 1tb x2

ill order the seagate x12 12tb x4 at a later date. i just want to install truenas first before i commit (i’m already balls deep in …  )

)

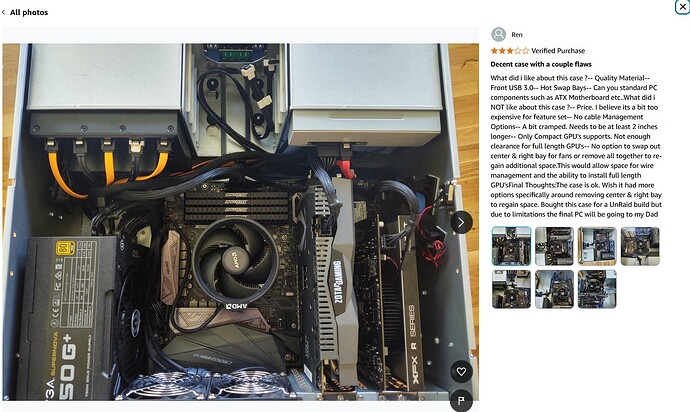

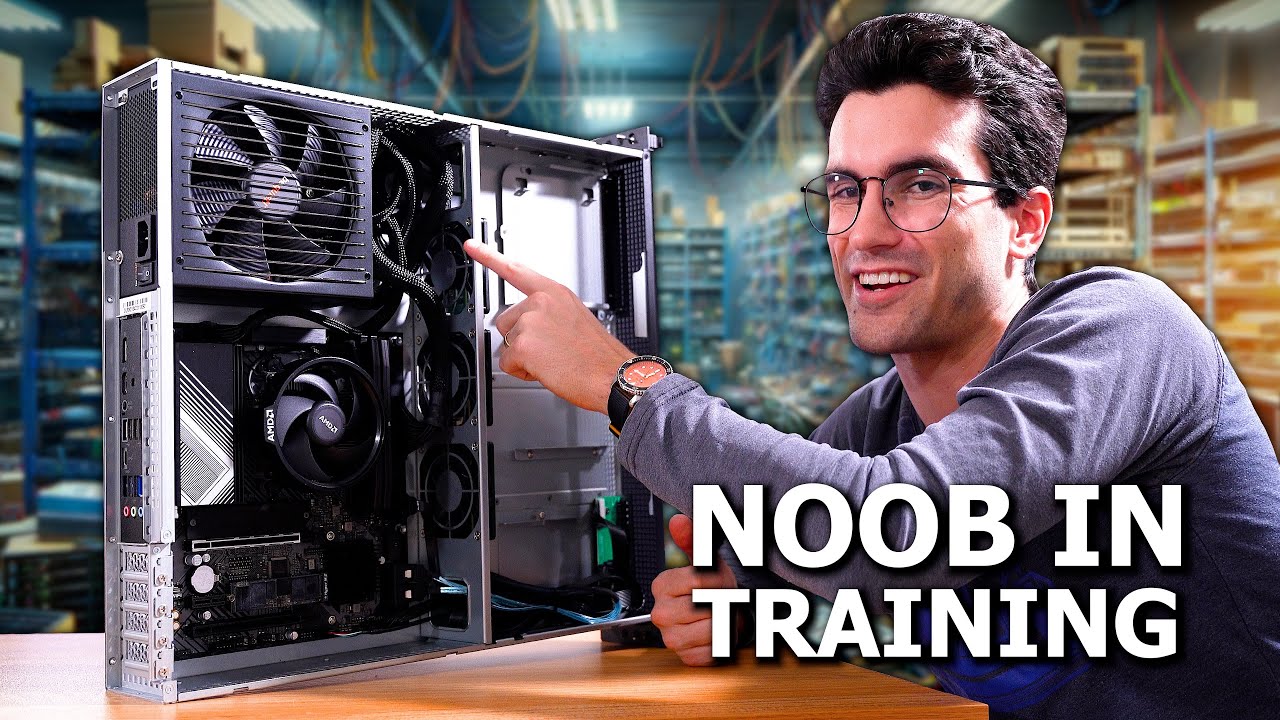

inspired by Greg Salazar’s build (he made 2 videos. 1 was using the same case i ordered, and the 2nd was using a ryzen 7600 cpu. i went with a diff motherboard since i wanted more sata ports, but his pick was good as well). It’s not the perfect build, but it’s what i can work with without too much hassle. i don’t think i did too badly i hope. plz be kind

with the youtube i’ll try install this myself

i want to thank etorix and every1 else for their useful tips. i learned a lot. i wasn’t even aware ipmi was a thing before this

i’ll have to order the 10gtek sfp+ pcie card, a sfp+ transceiver, and om5 optic cable once the seller is back from their vacation  till then i can use the 2.5gb port (yes it might be an aweful ethernet port, but once my 10gtek arrives, i’ll be using that full time like i am for my qnap. no big deal)

till then i can use the 2.5gb port (yes it might be an aweful ethernet port, but once my 10gtek arrives, i’ll be using that full time like i am for my qnap. no big deal)

not much experience with proxmox, and will probably just add another later of complexity. so ill stick to just installing truenas baremetal for dedicated use. i’m aware with proxmox you don’t limit yourself to truenas, you can also install other stuff like vms e.g. linux os maybe. with my rack build that may be possible maybe. ill look it up another time, but for now sticking to what i know.

did ample research and others also using something like this if not the same exact

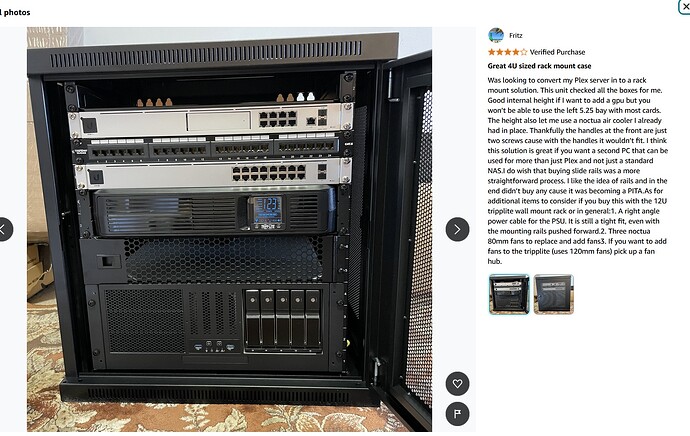

The Ryzen 5700 plays quite nicely for me.

I disabled PBO and put it into eco mode so its running very efficiently.

My whole rack consumes about 160W.

Server:

5 HDDs 16TB each,

4 SSDs,

Intel X550-T2

And the 5700 + 128GB DDR4 ECC consuming probably less than half of that.

My Netgear 12 port 10GbE PoE switch, the Router and UPS using the other half.

Is there a command to check power consumption of the CPU?

I would assume the switch uses the most significant amount as it’s fully populated and running 4 Access Points off oft PoE.

![]()

![]()