Hello,

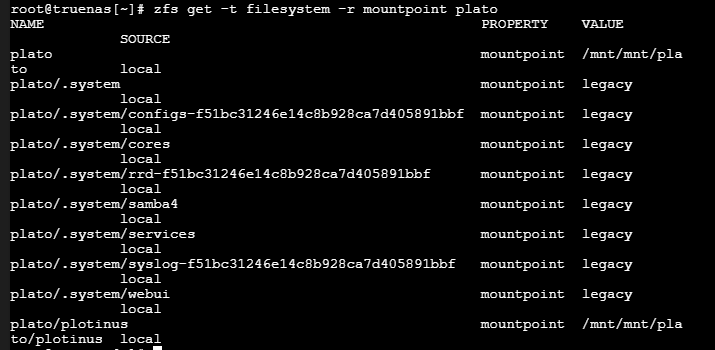

When I first set up my NAS, I had two mountpoints:

/mnt/plato

/mnt/plato/plotinus

I had to reimport my pool, and it created two new mountpoints, possibly because of conflicts.

/plato

/plato/plotinus

I tried remounting my pool to the old mountpoints, but my files are all now hidden and I can’t access my files.

How can I correctly mount my pool so that my files are accessible again?

System Specs

Part List - AMD Ryzen 3 3100, Fractal Design Node 804 MicroATX Mid Tower - PCPartPicker

LSI 9302-8i ServeRAID N2215 12Gbps SAS HBA P16 IT mode for ZFS TrueNAS unRAID | eBay

TrueNAS-13.0-U6.1

What do the pools look like in the gui?

How did you reimport them?

It looks like you may have done so using the shell.

If you do so in the shell without the -R /mnt flag like so: zpool import -R /mnt example_pool you often end up with broken mount points.

In fact, normally it would fail to mount because the root is usually set to read-only, unless that protection has been manually disabled by the user.

2 Likes

Here we go again!

Have you tried exporting the pool and reimporting it properly through the GUI?

1 Like

Yes I did it the shell without the -R flag. Is there a way to fix it?

No I haven’t. Is it safe to export the whole pool?

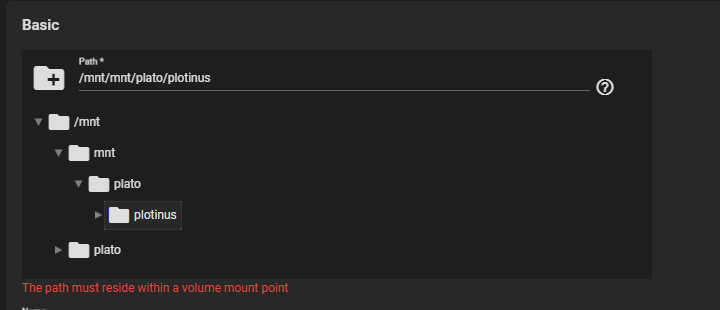

When I reimport the pool, It creates new mountpoints on

/mnt/mnt/plato/plotinus

I assume because

/mnt/plato/plotinus

is already taken.

The new mountpoint has all my files, but I can’t add this mountpoint to SMB.

If this worked with SMB then I’d be okay with it. I’m assuming I have to free up my original /mnt/plato/plotinus mountpoint somehow? I don’t know how to delete my original mountpoint to free it up.

What else did you do?

At any point, did you issue any zfs commands against your pool or datasets?

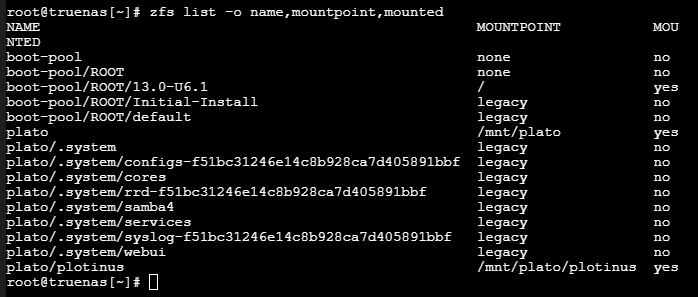

What does this show?

zfs get -t filesystem -r mountpoint plato

I’ve used mount and unmount commands, but not since I reimported. While the pool was exported, I tried deleting the mountpoint directories so they’d be available, but it still appended an extra /mnt when I reimported.

If I could get this mountpoint to work with SMB, then I’d be happy. Do you know why it says “the path must reside within a volume mount point”?

You really should be using an SSH session, rather than the broken “Shell” in Core.

zfs set -u mountpoint=none plato/plotinus

zfs set -u mountpoint=none plato

Now export and reimport the pool via the GUI.

Then report back what the result is for:

zpool get altroot plato

zfs get -t filesystem -r mountpoint plato

1 Like

![]()