Multi-Report is script which was originally designed to monitor key Hard Drive and Solid State Drive data and generate a nice email with a chart to depict the drives and their SMART data. And of course to sound the warning when something is worth noting.

Features:

- Easy To Run (Depends on your level on knowledge)

- Very Customizable

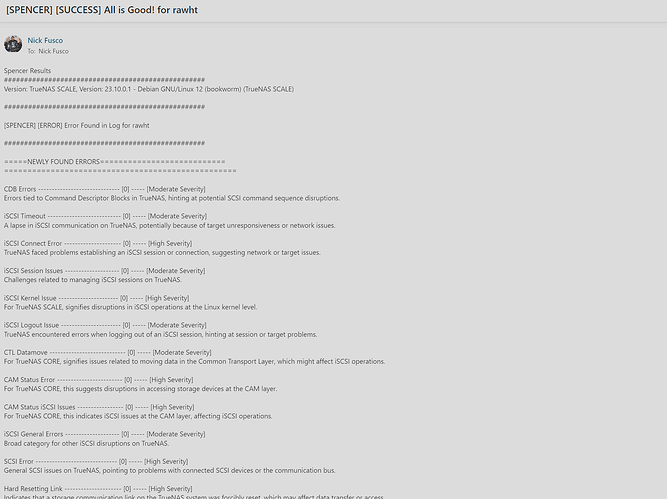

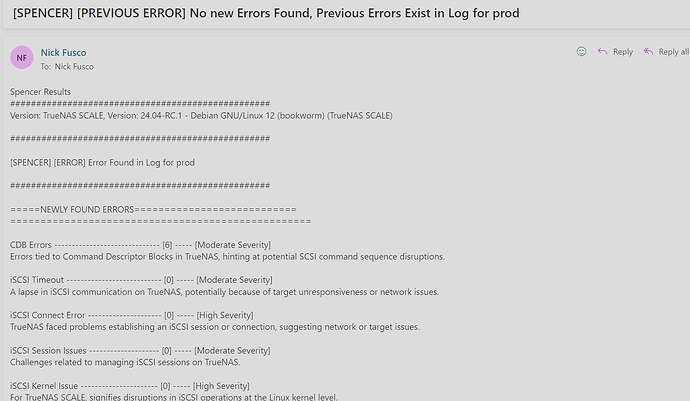

- Sends an Email clearly defining the status of your system

- Runs SMART Self-tests on NVMe (CORE cannot do this yet)

- Online Updates (Manual or Automatic)

- Has Human Support (when I am not living my life)

- Saves Statistical Data in CSV file, can be used with common spreadsheets

- Sets NVMe to Low Power in CORE (SCALE does this automatically)

- Set TLER

- And many other smaller things

SMART was designed to attempt to provide up to 24 hours to warn a user of pending doom. It is very difficult to predict a failure however short of a few things, SMART works pretty well. Up to 24 hours, that means when you find out about a problem, that failure could happen at any moment. Just heed the warning.

Multi-Report has been expanded to also run SMART Self-tests on NVMe drives for anyone running TrueNAS 13.0 or TrueNAS 24.04 an below as these platforms do not have the ability to run and/or configure NVMe for Self-tests. This script gives you the option to run these tests if your NVMe supports SMART Self-tests.

Another key feature is sending your email a copy of the TrueNAS configuration file weekly (the default). How many times have you seen someone lose that darn configuration file?

There is an Automatic Update feature that by default will notify you an update to the script exists. There is another option to have the update automatically applied if you desire.

I have built in troubleshooting help which if you specifically command, you can send me (joeschmuck) and email that contains your drive(s) SMART data. I can then figure out if you need to make a small configuration change or I need to fix the script.

All the files are here on GitHub. I retain a few previous versions in case someone wants to roll back. The files are all dated. Grab the multi_report_vXXXX.txt script and the Multi_Report_User_Guide.pdf, that should get you started.

There is a nice thread on the old TrueNAS Forums Here that is a good history.

Download the Script, take it for a spin. Sorry, this forum does not allow uploading of PDF files, so grab the user guide either from GitHub or run the script using the -update switch and it will grab the most current files.

multi_report_v3.0.1_2024_04_13.sh (407.7 KB)

An alternative to Multi-Report is FreeNAS-Report, also found in this resource section. Both are based off my code (and others who modified the script over the years, names are at the top of the script) and it is freeware to share.