Hi All,

I’m wracking by brains trying to work out how I can get my NVIDIA GTX 1660 working with Plex (transcoding) and Immich (machine learning). I recently upgraded from TrueNAS-Core to Scale (Dragonfish).

I can confrim that Plex is not using my GPU for encoding, as my CPU usage spikes considerably when it’s transcoding, and there are no processes present in when I run nvidia-smi while transcoding.

When I run the maching learning pods in Immich i get continual ERROR Worker was sent code 139 which is a SIGSEGV memory violation error.

I think the issue is that my GPU is being used by something and is not available to the system, as [VGA Controller] is listed after the GPU when I run lspci – if I understand the meaning of that correctly.

TrueNAS Scale Version: ElectricEel-24.10.0

Plex Version: 1.0.24

Immich Version: 1.6.24

I do not have any displays connected.

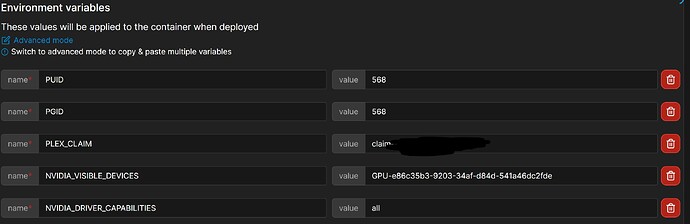

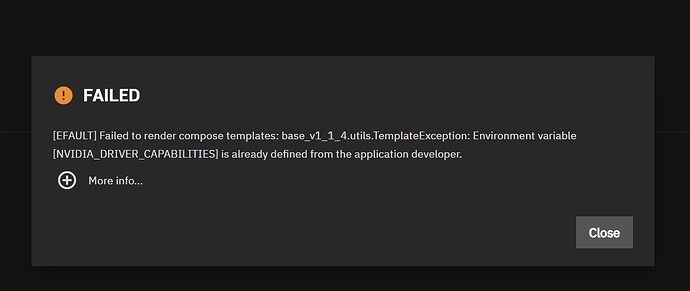

I have followed this post which details adding the following code…

resources:

gpus:

nvidia_gpu_selection:

'0000:07:00.0':

use_gpu: true

uuid: '' <<-- the problem

use_all_gpus: false

… to the user_config.yaml file, located in the ixVolume volume, found at /mnt/.ix-apps/user_config.yaml, and setting the IOMMU and UUID values correcty – which I have done.

I also came across this post. However, I’m able to run the nvidia-smi command without errors.

Interestingly, I don’t have any of the following files on my system:

/etc/modprobe.d/kvm.conf

/etc/modprobe.d/nvidia.conf

/etc/modprobe.d/vfio.conf

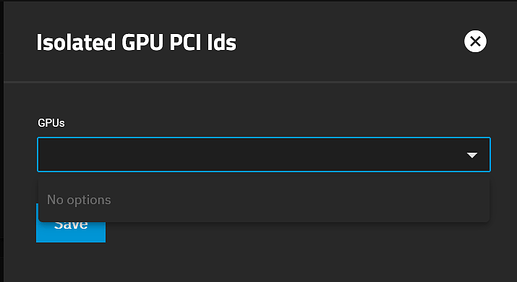

My system also doesn’t present me with any GPUs avaible for isolation, as shown in the screenshot further below.

Is anyone able to point me in the right direction as to what I should do?

— — — Additional Info — — —

nvidia-smi Output

root@truenas[~]# nvidia-smi

Thu Oct 31 12:24:16 2024

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.127.05 Driver Version: 550.127.05 CUDA Version: 12.4 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA GeForce GTX 1660 ... Off | 00000000:01:00.0 Off | N/A |

| 28% 43C P0 N/A / 125W | 1MiB / 6144MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+

modprobe Output

root@truenas[~]# modprobe nvidia_current_drm

modprobe: FATAL: Module nvidia_current_drm not found in directory /lib/modules/6.6.44-production+truenas

root@truenas[~]# modprobe nvidia-current

modprobe: FATAL: Module nvidia-current not found in directory /lib/modules/6.6.44-production+truenas

lsmod Output

root@truenas[~]# lsmod | grep nvidia

nvidia_uvm 4911104 0

nvidia_drm 118784 0

nvidia_modeset 1605632 1 nvidia_drm

nvidia 60620800 2 nvidia_uvm,nvidia_modeset

drm_kms_helper 249856 4 ast,nvidia_drm

drm 757760 6 drm_kms_helper,ast,drm_shmem_helper,nvidia,nvidia_drm

video 73728 1 nvidia_modeset

lspci Output

root@truenas[~]# lspci -v

...

01:00.0 VGA compatible controller: NVIDIA Corporation TU116 [GeForce GTX 1660 SUPER] (rev a1) (prog-if 00 [VGA controller])

Subsystem: NVIDIA Corporation TU116 [GeForce GTX 1660 SUPER]

Flags: bus master, fast devsel, latency 0, IRQ 16, IOMMU group 1

Memory at f6000000 (32-bit, non-prefetchable) [size=16M]

Memory at e0000000 (64-bit, prefetchable) [size=256M]

Memory at f0000000 (64-bit, prefetchable) [size=32M]

I/O ports at e000 [size=128]

Expansion ROM at f7000000 [virtual] [disabled] [size=512K]

Capabilities: [60] Power Management version 3

Capabilities: [68] MSI: Enable- Count=1/1 Maskable- 64bit+

Capabilities: [78] Express Legacy Endpoint, MSI 00

Capabilities: [100] Virtual Channel

Capabilities: [250] Latency Tolerance Reporting

Capabilities: [258] L1 PM Substates

Capabilities: [128] Power Budgeting <?>

Capabilities: [420] Advanced Error Reporting

Capabilities: [600] Vendor Specific Information: ID=0001 Rev=1 Len=024 <?>

Capabilities: [900] Secondary PCI Express

Capabilities: [bb0] Physical Resizable BAR

Kernel driver in use: nvidia

Kernel modules: nouveau, nvidia_drm, nvidia

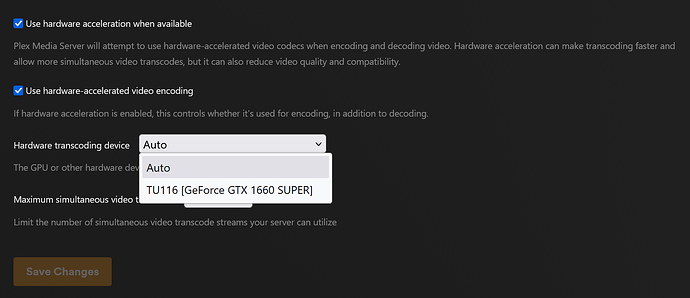

No GPUs to Isolate

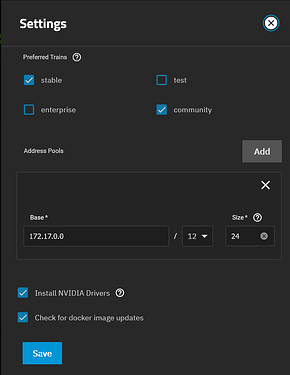

Application Settings

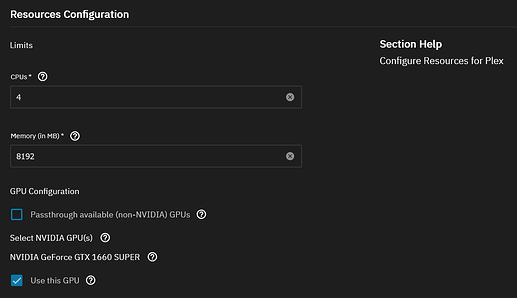

Plex Resources

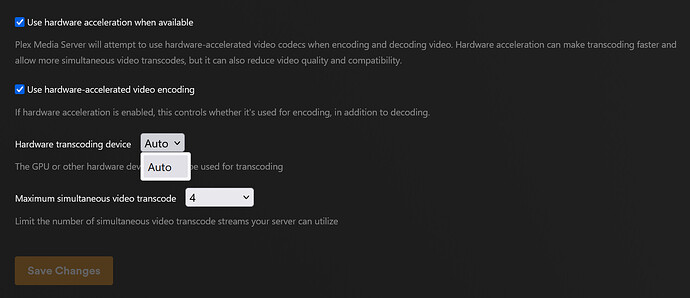

Plex Transcoding Settings - No specific GPU available