I am new to all this. My server would not start, so i removed everything and rebuilt it. Then it started. I was having trouble with network connecting. So reset to default and then I got in. I see my pool is missing. I tried to import an existing pool but have no show. Can anyone please help me to get it back with data.

Version: TrueNAS-13.0-U5.3

Regards Martin

We need a bit more info. Whats the difference between your new and your old hardware ? Do the discs show up in the BIOS ?

What steps have you taken to import the pool ?

If the discs are shown in the BIOS, whats the output of

lsblk

and

sudo zpool import

Paste your output inside ``` (triple backticks).

Here is my personal standard list of commands to run and copy and paste the results, designed to give community support the basic information it needs in one go to diagnose basic issues or check that everything is setup correctly:

lsblk -bo NAME,LABEL,MAJ:MIN,TRAN,ROTA,ZONED,VENDOR,MODEL,SERIAL,PARTUUID,START,SIZE,PARTTYPENAMEsudo ZPOOL_SCRIPTS_AS_ROOT=1 zpool status -vLtsc lsblk,serial,smartx,smartsudo zpool importlspcisudo sas2flash -listsudo sas3flash -listsudo storcli show allfor disk in /dev/sd*; do; sudo zdb -l $disk; donefor disk in /dev/sd?; do; sudo hdparm -W $disk; donefor disk in /dev/sd?; do; sudo smartctl -x $disk; done

I did not change anything. when power on the server did not boot up. So, stripped it and cleaned. Put back together and then it booted up. I have 6 Nvme 4tb drives and mounted on two pcie cards and one Nvme boot drive mounted on motherboard. In Bios it only shows 3 drives as the mounted ones on PCIe card just show as one. And that’s been like that from the beginning. And worked before. I don’t know how to use lsblk or sudo zpool import. I am new to this. Martin

Is the motherboard battery still good?

Have you checked BIOS settings (e.g. bifurcation)?

We’d really like a full hardware description, with make and model of everything, and what’s mounted where, to make sense of the requested outputs. What was the pool layout?

Ideally, you’d set up a SSH session from a terminal in your client, for convenience and the ability to cleanly copy the output to paste here.

Otherwise, go to >_ Shell in the WebUI, type the commands and make (readable!) screenshots.

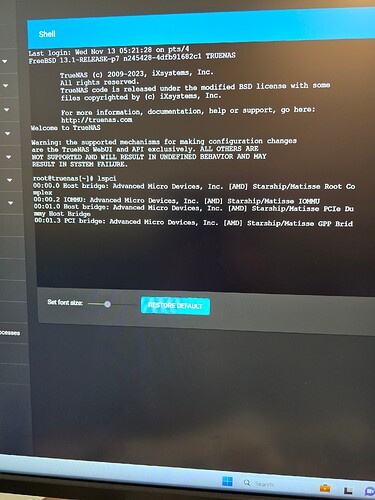

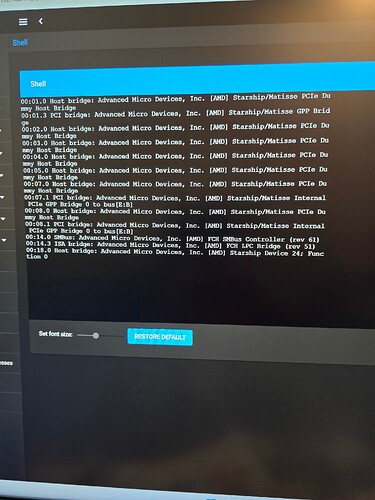

Since you’re on CORE, and with an all-NVMe NAS, the useful commands will be somewhat different:

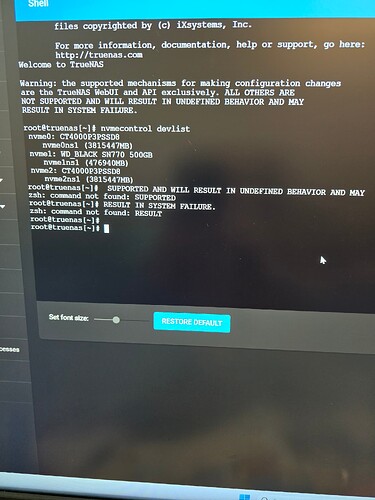

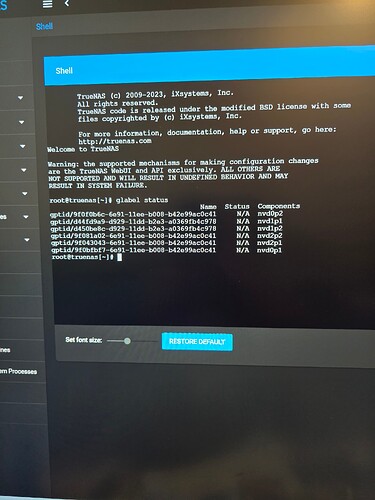

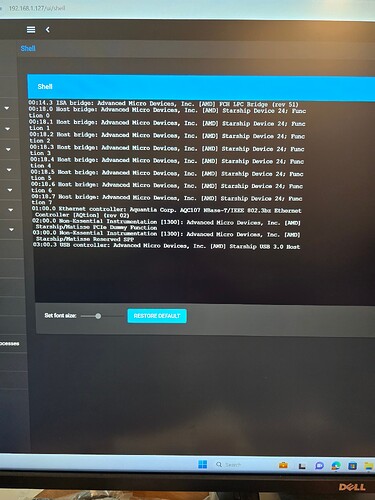

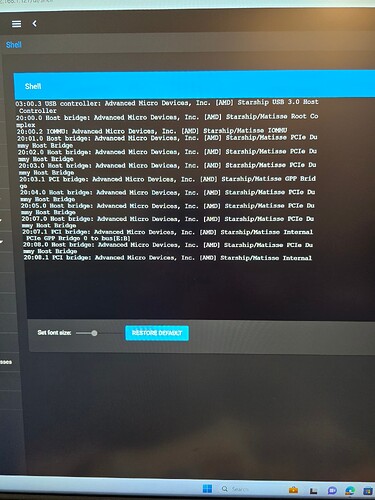

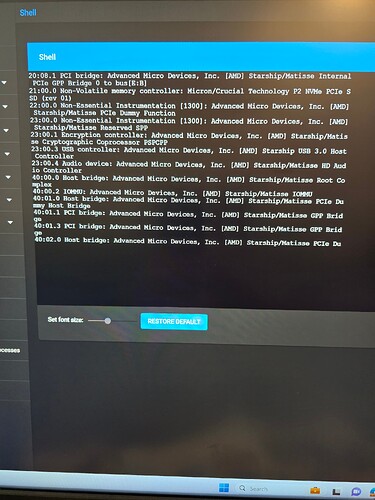

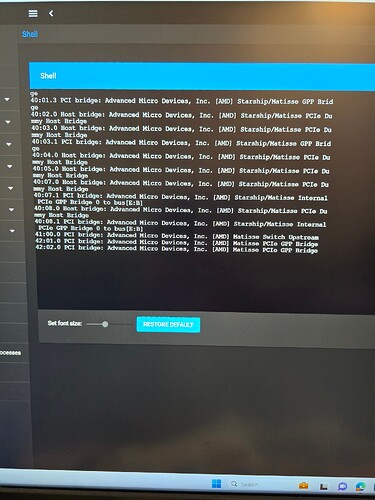

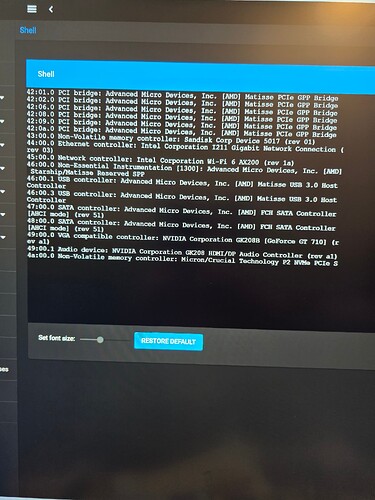

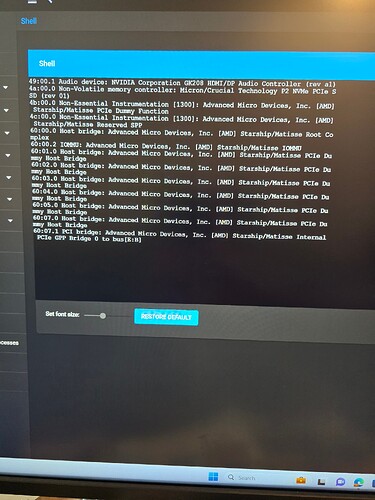

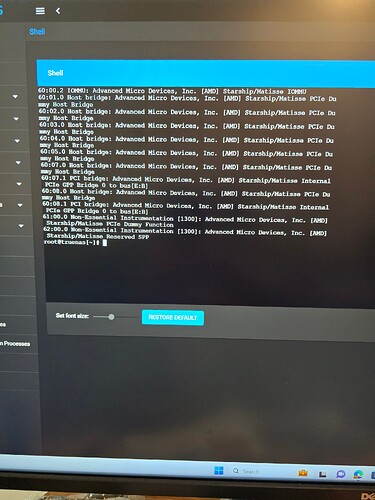

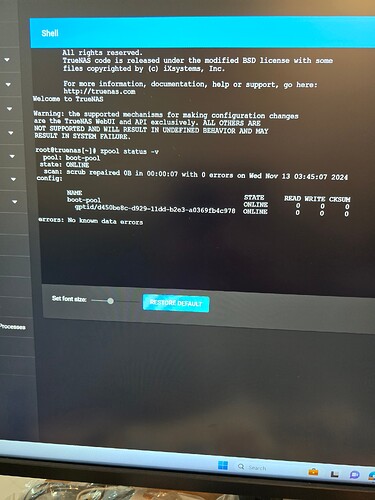

nvmecontrol devlistglabel statuslspcizpool status -vzpool import

root@truenas [~] # 1sblk

zsh: command not found: 1sblk

root@truenas [~] # sudo zpool import

zsh: command not found: sudo

i put it in Shell, is that correct?

That’s correct, but the command is lsblk (list block (devices)), with a lower case ‘L’ not ‘one-sblk’—and would still not be found on CORE because it is a Linux command. CORE does not need sudo: The administrator is already root.

Try my set of commands above.

Sorry didnt see that you are using CORE, since you tagged the post as SCALE. I changed that for you.

Use @etorix commands.

Mother board Gigabyte TRX40 Aorus pro

CPU: AMD Ryzen Threadripper 3960x 24 core

16gb of ram

Lan 10gb

You said you have two PCIe to M.2 adapter cards? The system is only seeing 2/6 of your nvme drives. This might be lack of bifurcation in the bios as etorix previously suggested.

If you use a adapter card that requires bifurcation but don’t have it enabled in the BIOS the system will only see the first NVMe drive.

Go into your BIOS settings and check whether 4x4x4x4 bifurcation is enabled on the PCIe slots containing the adapter cards.

That’s not your problem, but you do not have enough RAM to get the performance you likely expect from an all-NVMe, 10 GbE, NAS.

The good part is that you have a quite resilient raidz2 array. Check BIOS settings, and whether you see all the drives in BIOS.

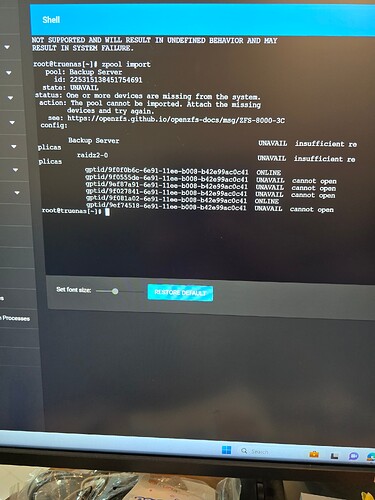

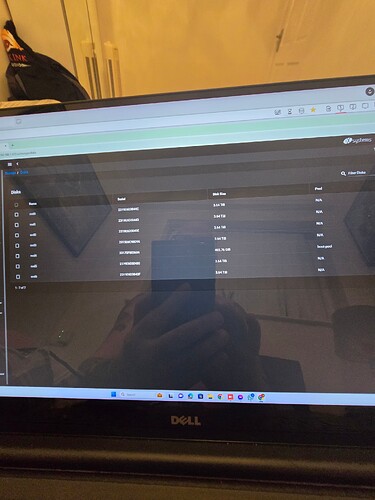

I have changed it in bios, and now have the the boot drive and 6 storage drives. What do i need to do next.

Thanks for advice on upgrading ram. I have sorted the drives in bios as you see from answer i do to the other technician. So what do i do next?

Can run the following command again and post the output?

zpool import

@etorix Would a shortage of RAM be indicated by a report showing an ARC hit rate of less than (say) 99% or is there some other report that would indicate this?

I would imagine that a 16GB machine (running no apps or VMs) would have c. 11GB of ARC - so I suppose that this is below some sort of guideline which says 1GB of ARC for each TB of data i.e. 16GB of ARC for 16TB of usable storage, but I would imagine that the amount of ARC actually needed in reality to achieve (say) a 99% hit rate would depend very much on the workload, and in particular how many reads are going to benefit from sequential pre-fetch, and how much data will be read repeatedly. I also imagine that the most important thing is to have all the metadata cached as this is definitely accessed repeatedly.

For example, my server has only 2.5GB-3GB of ARC serving 16TB of usable storage (and c. 7TB of actual data), and I still get a 99% ARC hit rate, primarily due to it being a media server and the streaming benefiting from pre-fetch.

1 GB/TB is a very rough rule of thumb; I don’t think we are supposed to goo that deep into numbers from it.

But the basic concern with > 1 GbE networking is that RAM also serves as write cache. Two txg at 10 Gb/s could be up to 10-12 GB of received data, leaving 4 GB or less for ARC. Here that’s maybe still enough for metadata and data is on fast access from NVMe… But having enough RAM for both ARC and write cache is the reason to suggest at least 32 GB with fast networking.

Back to the actual issue. All 6 drives are shown in GUI, and should also appear from nvmecontrol devlist. What does zpool import report?

I have nothing to add to the main topic, and you’re getting great help from the others here, but to perhaps assist in future endeavours, my suggestions would be to inspect your images before you post them, crop if necessary.

Have a good day.

Not to mention taking screen snapshots from Windows rather than photographs of the screen…