Hi everybody,

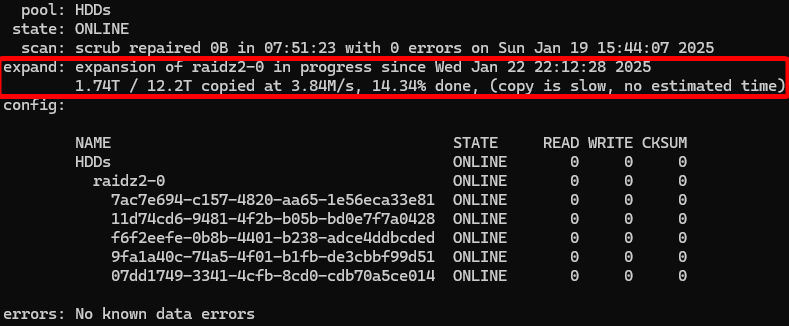

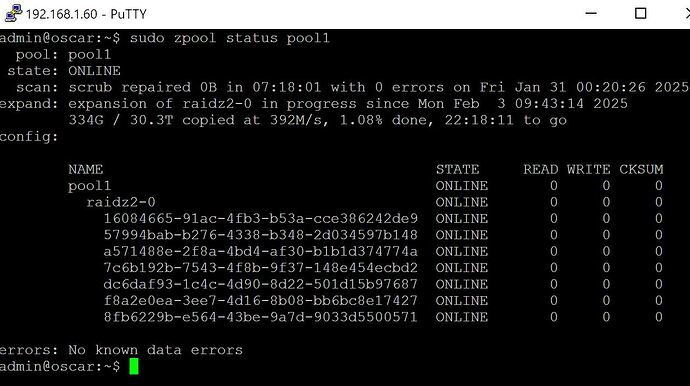

A week ago I started the expansion of my RAID-Z2 pool.

The expansion is extremely slow and I would love to figure out why.

Things I’ve tried:

- RAIDZ Expansion speedup

- Rebooting the system

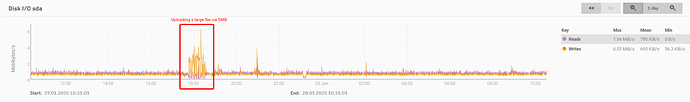

I don’t think that my drive speed is the limit:

zpool iostat -v

capacity operations bandwidth

pool alloc free read write read write

---------------------------------------- ----- ----- ----- ----- ----- -----

HDDs 12.2T 9.68T 267 554 3.20M 3.34M

raidz2-0 12.2T 9.68T 267 554 3.20M 3.34M

7ac7e694-c157-4820-aa65-1e56eca33e81 - - 1 49 819K 685K

11d74cd6-9481-4f2b-b05b-bd0e7f7a0428 - - 1 51 819K 685K

f6f2eefe-0b8b-4401-b238-adce4ddbcded - - 131 157 818K 685K

9fa1a40c-74a5-4f01-b1fb-de3cbbf99d51 - - 133 159 818K 685K

07dd1749-3341-4cfb-8cd0-cdb70a5ce014 - - 0 137 372 678K

---------------------------------------- ----- ----- ----- ----- ----- -----

boot-pool 7.82G 224G 0 10 3.23K 114K

nvme0n1p3 7.82G 224G 0 10 3.23K 114K

---------------------------------------- ----- ----- ----- ----- ----- -----

iostat -x

Linux 6.6.44-production+truenas (Nexus) 01/28/25 _x86_64_ (4 CPU)

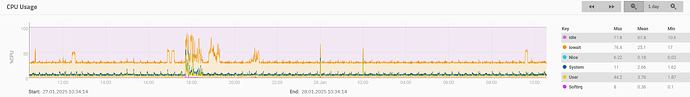

avg-cpu: %user %nice %system %iowait %steal %idle

3.61 0.17 3.02 25.20 0.00 68.00

Device r/s rkB/s rrqm/s %rrqm r_await rareq-sz w/s wkB/s wrqm/s %wrqm w_await wareq-sz d/s dkB/s drqm/s %drqm d_await dareq-sz f/s f_await aqu-sz %util

loop1 0.01 0.28 0.00 0.00 0.06 55.27 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

loop2 0.00 0.01 0.00 0.00 0.16 18.88 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

nvme0n1 0.11 3.41 0.00 0.02 0.15 32.29 11.27 113.51 0.00 0.00 0.05 10.07 0.00 0.00 0.00 0.00 0.00 0.00 0.29 0.34 0.00 0.03

sda 133.69 817.65 0.09 0.07 0.14 6.12 159.32 684.90 0.03 0.02 0.12 4.30 0.00 0.00 0.00 0.00 0.00 0.00 0.11 7.51 0.04 2.39

sdb 1.84 819.07 0.00 0.08 132.00 445.91 51.24 684.72 0.03 0.05 39.73 13.36 0.00 0.00 0.00 0.00 0.00 0.00 0.11 156.90 2.30 97.77

sdc 1.84 819.11 0.00 0.07 129.35 446.21 50.15 684.75 0.03 0.05 40.86 13.65 0.00 0.00 0.00 0.00 0.00 0.00 0.11 157.77 2.30 97.82

sdd 131.09 817.68 0.10 0.08 0.18 6.24 157.50 684.89 0.02 0.01 0.14 4.35 0.00 0.00 0.00 0.00 0.00 0.00 0.11 8.67 0.05 2.73

sde 0.02 0.42 0.00 0.20 53.13 21.69 137.16 677.51 0.03 0.03 3.74 4.94 0.00 0.00 0.00 0.00 0.00 0.00 0.11 68.98 0.52 24.67

I’ve noticed in iostat -x that sdb and sdc are at full utillization:

sdb,sdc,sdeareWD60EFAXsda,sddareWD60EFZX

which is weird, because the FAX should be better than the FZX…

Is this ZFS PR related?

System Specs

ElectricEel-24.10.1- CPU: Intel N100

- HDD: 5x WD Red Pro

- Connected via SATA

- Split over 4 PCIe Lanes

- RAM: 16GB

Is there anything I can do to speed up the expansion?

Or do I just have to wait until it finishes in a few months?

Also, why does the expansion need to go through the whole 12TB of my pool, while only ~7TB are used?