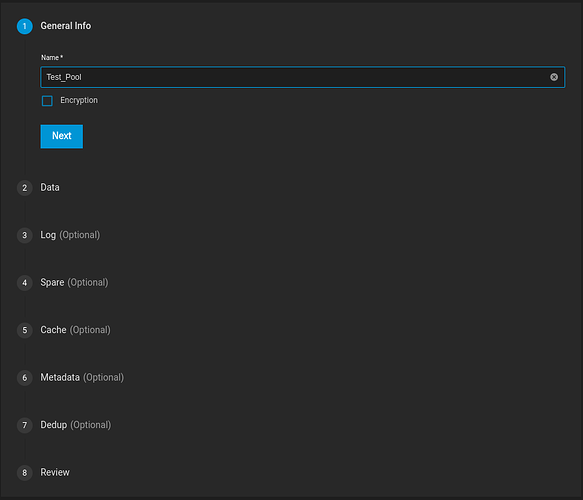

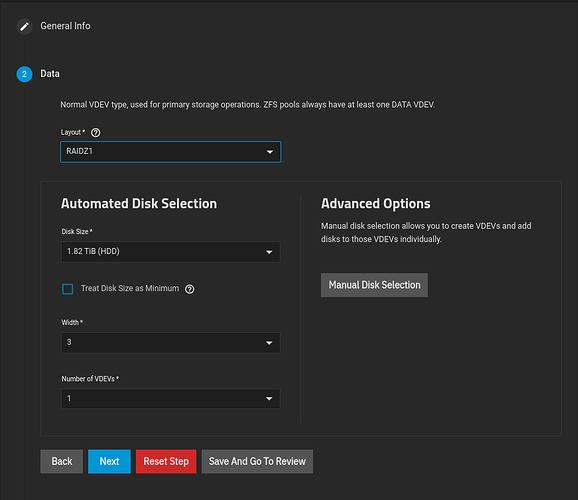

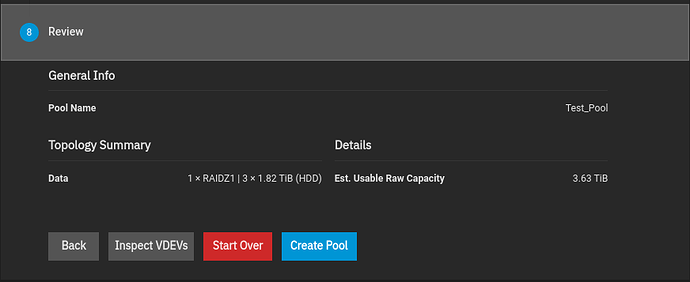

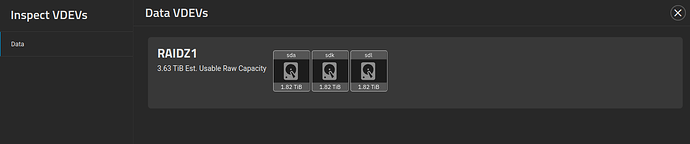

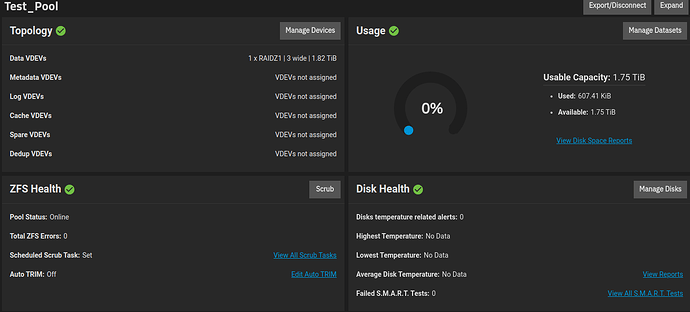

I am creating a new pool (for offsite backup) with 3 - 2TB drives. They are mixed brands and rpms so I expect performance to be poor. The problem I am running into is: The pool’s capacity is on only 50% of what I am expecting. Can someone help me understand/troubleshoot this?

Welcome to the TrueNAS forums!

It almost seems like you have a smaller disk in the RAID-Z1 set. Except one of your screen shots shows 3 x 2TB disks…

Please supply the output of the following commands in CODE tags;

zpool list -v

zpool status

lsblk

This should help determine the problem.

Further, did you setup any ZFS quota, reservations?

Thanks for the response!

I have been trying various things since the original setup.

I tried creating 3 separate pools, one for each drive, to see which drives are causing trouble.

sda: looks fine,

sdk: 50% capacity,

sdl: 50% capacity

So I tried to “Expand Pool”:

sda: sucess, no change,

sdk: immediately faulted,

sdl: didn’t work, I think it was because of the running task on sdk and I didn’t want to wait.

So I deleted the pool with sdl and started a full disk wipe with random zero. I was not able to export sdk since it still had an “expand” job running.

Here are the relevant sections of the commands you asked for:

root@truenas[/home/admin]# zpool list -v

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

ait2408 14.5T 4.08T 10.5T - - 0% 28% 1.00x ONLINE /mnt

raidz1-0 14.5T 4.08T 10.5T - - 0% 28.0% - ONLINE

c62ab49a-dd76-4905-ba8b-f761866178ec 3.64T - - - - - - - ONLINE

7cfb5e69-dfa2-4172-97de-e70f3fa6ca84 3.64T - - - - - - - ONLINE

3172644c-64b5-4de2-9e37-8dbc73e5f30a 3.64T - - - - - - - ONLINE

d76a47bb-b2fa-439c-a2c3-5d47447a4bc1 3.64T - - - - - - - ONLINE

boot-pool 95G 10.2G 84.8G - - 3% 10% 1.00x ONLINE -

mirror-0 95G 10.2G 84.8G - - 3% 10.7% - ONLINE

sdg3 95.3G - - - - - - - ONLINE

sdi3 95.3G - - - - - - - ONLINE

pcs_backup 3.62T 2.30T 1.32T - - 12% 63% 1.00x ONLINE /mnt

745aed7c-ca22-41d0-9bac-5d4182bec4ee 3.64T 2.30T 1.32T - - 12% 63.5% - ONLINE

pny-ssd-pool 1.81T 684G 1.14T - - 30% 36% 1.00x ONLINE /mnt

03cbf51d-fc09-469f-8c88-d142507634cb 930G 339G 589G - - 31% 36.5% - ONLINE

563dd475-6100-4e05-9e8a-c86e737e9c6c 930G 346G 582G - - 29% 37.2% - ONLINE

test1 1.81T 540K 1.81T - - 0% 0% 1.00x ONLINE /mnt

1e0e1882-800c-4fc4-a5d6-0c04b18cd077 1.82T 540K 1.81T - - 0% 0.00% - ONLINE

test2 928G 456K 928G - - 0% 0% 1.00x SUSPENDED /mnt

22c2b5dd-54d2-4489-a14c-17857767cf9f 932G 456K 928G - - 0% 0.00% - FAULTED

root@truenas[/home/admin]#

### trimmed

pool: test1

state: ONLINE

config:

NAME STATE READ WRITE CKSUM

test1 ONLINE 0 0 0

1e0e1882-800c-4fc4-a5d6-0c04b18cd077 ONLINE 0 0 0

errors: No known data errors

pool: test2

state: SUSPENDED

status: One or more devices are faulted in response to IO failures.

action: Make sure the affected devices are connected, then run 'zpool clear'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-JQ

config:

NAME STATE READ WRITE CKSUM

test2 FAULTED 0 0 0 corrupted data

22c2b5dd-54d2-4489-a14c-17857767cf9f FAULTED 0 0 0 corrupted data

errors: 4 data errors, use '-v' for a list

root@truenas[/home/admin]#

root@truenas[/home/admin]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 1.8T 0 disk

└─sda1 8:1 0 1.8T 0 part

sdb 8:16 0 3.6T 0 disk

├─sdb1 8:17 0 2G 0 part

└─sdb2 8:18 0 3.6T 0 part

sdc 8:32 0 3.6T 0 disk

└─sdc1 8:33 0 3.6T 0 part

sdd 8:48 0 3.6T 0 disk

└─sdd1 8:49 0 3.6T 0 part

sde 8:64 0 931.5G 0 disk

├─sde1 8:65 0 2G 0 part

└─sde2 8:66 0 929.5G 0 part

sdf 8:80 0 3.6T 0 disk

└─sdf1 8:81 0 3.6T 0 part

sdg 8:96 0 111.8G 0 disk

├─sdg1 8:97 0 1M 0 part

├─sdg2 8:98 0 512M 0 part

├─sdg3 8:99 0 95.3G 0 part

└─sdg4 8:100 0 16G 0 part

└─md127 9:127 0 16G 0 raid1

└─md127 253:0 0 16G 0 crypt

sdh 8:112 0 3.6T 0 disk

└─sdh1 8:113 0 3.6T 0 part

sdi 8:128 0 111.8G 0 disk

├─sdi1 8:129 0 1M 0 part

├─sdi2 8:130 0 512M 0 part

├─sdi3 8:131 0 95.3G 0 part

└─sdi4 8:132 0 16G 0 part

└─md127 9:127 0 16G 0 raid1

└─md127 253:0 0 16G 0 crypt

sdj 8:144 0 931.5G 0 disk

├─sdj1 8:145 0 2G 0 part

└─sdj2 8:146 0 929.5G 0 part

sdk 8:160 0 1.8T 0 disk

└─sdk1 8:161 0 931.5G 0 part

sdl 8:176 0 1.8T 0 disk

root@truenas[/home/admin]#

I now have some more questions.

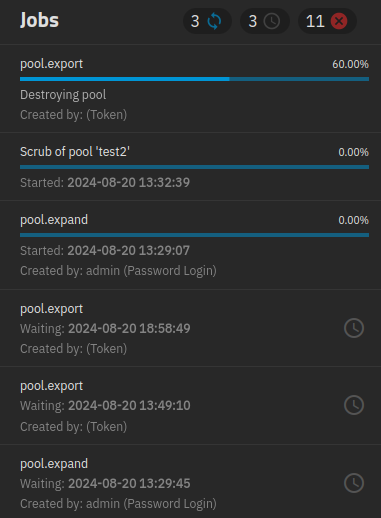

- How can I cancel jobs? That pool expand job is still running at 0%

- Just yesterday I did a long SMART test on all 3 of these drives and they all came back with “success”. How can I access the results of the smart tests and does success mean “no errors”?

- If the drive has errors like this, does it always mean the drive needs to be replaced or can I assume this error has to do with how it it formatted?

smartctl -a /dev/sda

smartctl -a /dev/sdk

smartctl -a /dev/sdl

Not necessarily.

Start from providing the required outputs, we have to evaluate the situation.

Also, do note that 1.8 TiB ≈ 2 TB.

There is nothing wrong with experimenting with an empty NAS server, but boy what a mess you currently have.

A few things you need to do:

-

Get the exact models of your hard drives and check that that specific model is NOT a SMR technology disk. SMR disks are not useable for redundant vDevs with TrueNAS/ZFS.

-

Your pny-ssd-pool is striped without any redundancy - this is NOT a good idea as if one drive dies you will lose the data on the other drive as well.

-

When you are ready to create your production pools, ask for advice about the best setup for the hardware you have. In general terms, vDevs have to use disks of similar sizes (otherwise the space on bigger drives will be wasted), but you can have multiplke vDevs in a pool. For VMs and Apps you do not want to use RAIDZ, and SSDs are best if your data sizes are not too big. For HDDs, generally it is better to have a single pool, but there are circumstances where you may want to split pools.

type or root@truenas[/home/admin]# smartctl -a /dev/sda

smartctl 7.4 2023-08-01 r5530 [x86_64-linux-6.6.32-production+truenas] (local build)

Copyright (C) 2002-23, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Model Family: Hitachi Ultrastar A7K2000

Device Model: Hitachi HUA722020ALA331

Serial Number: BFHG150F

LU WWN Device Id: 5 000cca 229d4790f

Firmware Version: JKAOA3NH

User Capacity: 2,000,398,934,016 bytes [2.00 TB]

Sector Size: 512 bytes logical/physical

Rotation Rate: 7200 rpm

Form Factor: 3.5 inches

Device is: In smartctl database 7.3/5528

ATA Version is: ATA8-ACS T13/1699-D revision 4

SATA Version is: SATA 2.6, 3.0 Gb/s

Local Time is: Tue Aug 20 16:37:42 2024 CDT

SMART support is: Available - device has SMART capability.

SMART support is: Enabled

=== START OF READ SMART DATA SECTION ===

SMART overall-health self-assessment test result: PASSED

General SMART Values:

Offline data collection status: (0x82) Offline data collection activity

was completed without error.

Auto Offline Data Collection: Enabled.

Self-test execution status: ( 0) The previous self-test routine completed

without error or no self-test has ever

been run.

Total time to complete Offline

data collection: (22036) seconds.

Offline data collection

capabilities: (0x5b) SMART execute Offline immediate.

Auto Offline data collection on/off support.

Suspend Offline collection upon new

command.

Offline surface scan supported.

Self-test supported.

No Conveyance Self-test supported.

Selective Self-test supported.

SMART capabilities: (0x0003) Saves SMART data before entering

power-saving mode.

Supports SMART auto save timer.

Error logging capability: (0x01) Error logging supported.

General Purpose Logging supported.

Short self-test routine

recommended polling time: ( 1) minutes.

Extended self-test routine

recommended polling time: ( 367) minutes.

SCT capabilities: (0x003d) SCT Status supported.

SCT Error Recovery Control supported.

SCT Feature Control supported.

SCT Data Table supported.

SMART Attributes Data Structure revision number: 16

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x000b 100 100 016 Pre-fail Always - 0

2 Throughput_Performance 0x0005 133 133 054 Pre-fail Offline - 102

3 Spin_Up_Time 0x0007 111 111 024 Pre-fail Always - 647 (Average 650)

4 Start_Stop_Count 0x0012 099 099 000 Old_age Always - 5622

5 Reallocated_Sector_Ct 0x0033 100 100 005 Pre-fail Always - 0

7 Seek_Error_Rate 0x000b 100 100 067 Pre-fail Always - 0

8 Seek_Time_Performance 0x0005 121 121 020 Pre-fail Offline - 35

9 Power_On_Hours 0x0012 091 091 000 Old_age Always - 68103

10 Spin_Retry_Count 0x0013 100 100 060 Pre-fail Always - 0

12 Power_Cycle_Count 0x0032 100 100 000 Old_age Always - 94

192 Power-Off_Retract_Count 0x0032 096 096 000 Old_age Always - 5872

193 Load_Cycle_Count 0x0012 096 096 000 Old_age Always - 5872

194 Temperature_Celsius 0x0002 240 240 000 Old_age Always - 25 (Min/Max 19/56)

196 Reallocated_Event_Count 0x0032 100 100 000 Old_age Always - 0

197 Current_Pending_Sector 0x0022 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0008 100 100 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x000a 200 200 000 Old_age Always - 0

SMART Error Log Version: 1

ATA Error Count: 3736 (device log contains only the most recent five errors)

CR = Command Register [HEX]

FR = Features Register [HEX]

SC = Sector Count Register [HEX]

SN = Sector Number Register [HEX]

CL = Cylinder Low Register [HEX]

CH = Cylinder High Register [HEX]

DH = Device/Head Register [HEX]

DC = Device Command Register [HEX]

ER = Error register [HEX]

ST = Status register [HEX]

Powered_Up_Time is measured from power on, and printed as

DDd+hh:mm:SS.sss where DD=days, hh=hours, mm=minutes,

SS=sec, and sss=millisec. It "wraps" after 49.710 days.

Error 3736 occurred at disk power-on lifetime: 34730 hours (1447 days + 2 hours)

When the command that caused the error occurred, the device was active or idle.

After command completion occurred, registers were:

ER ST SC SN CL CH DH

-- -- -- -- -- -- --

40 51 05 4b 18 f7 02 Error: UNC at LBA = 0x02f7184b = 49748043

Commands leading to the command that caused the error were:

CR FR SC SN CL CH DH DC Powered_Up_Time Command/Feature_Name

-- -- -- -- -- -- -- -- ---------------- --------------------

60 08 08 48 18 f7 40 00 33d+19:28:09.469 READ FPDMA QUEUED

ef 02 00 00 00 00 00 00 33d+19:28:09.467 SET FEATURES [Enable write cache]

60 08 08 48 18 f7 a0 ff 33d+19:28:09.249 READ FPDMA QUEUED

60 08 08 48 18 f7 a0 ff 33d+19:28:09.243 READ FPDMA QUEUED

60 08 08 48 18 f7 40 00 33d+19:27:52.616 READ FPDMA QUEUED

Error 3735 occurred at disk power-on lifetime: 34730 hours (1447 days + 2 hours)

When the command that caused the error occurred, the device was active or idle.

After command completion occurred, registers were:

ER ST SC SN CL CH DH

-- -- -- -- -- -- --

40 51 05 4b 18 f7 02 Error: UNC at LBA = 0x02f7184b = 49748043

Commands leading to the command that caused the error were:

CR FR SC SN CL CH DH DC Powered_Up_Time Command/Feature_Name

-- -- -- -- -- -- -- -- ---------------- --------------------

60 08 08 48 18 f7 40 00 33d+19:27:52.616 READ FPDMA QUEUED

ef 02 00 00 00 00 00 00 33d+19:27:52.614 SET FEATURES [Enable write cache]

60 08 08 48 18 f7 a0 ff 33d+19:27:52.414 READ FPDMA QUEUED

60 08 08 48 18 f7 a0 ff 33d+19:27:52.408 READ FPDMA QUEUED

60 08 08 48 18 f7 40 00 33d+19:27:35.762 READ FPDMA QUEUED

Error 3734 occurred at disk power-on lifetime: 34730 hours (1447 days + 2 hours)

When the command that caused the error occurred, the device was active or idle.

After command completion occurred, registers were:

ER ST SC SN CL CH DH

-- -- -- -- -- -- --

40 51 05 4b 18 f7 02 Error: UNC at LBA = 0x02f7184b = 49748043

Commands leading to the command that caused the error were:

CR FR SC SN CL CH DH DC Powered_Up_Time Command/Feature_Name

-- -- -- -- -- -- -- -- ---------------- --------------------

60 08 08 48 18 f7 40 00 33d+19:27:35.762 READ FPDMA QUEUED

ef 02 00 00 00 00 00 00 33d+19:27:35.760 SET FEATURES [Enable write cache]

60 08 08 48 18 f7 a0 ff 33d+19:27:35.680 READ FPDMA QUEUED

60 08 08 48 18 f7 a0 ff 33d+19:27:35.675 READ FPDMA QUEUED

60 08 08 48 18 f7 40 00 33d+19:27:19.003 READ FPDMA QUEUED

Error 3733 occurred at disk power-on lifetime: 34730 hours (1447 days + 2 hours)

When the command that caused the error occurred, the device was active or idle.

After command completion occurred, registers were:

ER ST SC SN CL CH DH

-- -- -- -- -- -- --

40 51 05 4b 18 f7 02 Error: UNC at LBA = 0x02f7184b = 49748043

Commands leading to the command that caused the error were:

CR FR SC SN CL CH DH DC Powered_Up_Time Command/Feature_Name

-- -- -- -- -- -- -- -- ---------------- --------------------

60 08 08 48 18 f7 40 00 33d+19:27:19.003 READ FPDMA QUEUED

ef 02 00 00 00 00 00 00 33d+19:27:19.002 SET FEATURES [Enable write cache]

60 08 08 48 18 f7 a0 ff 33d+19:27:18.795 READ FPDMA QUEUED

60 08 08 48 18 f7 a0 ff 33d+19:27:18.789 READ FPDMA QUEUED

60 08 08 48 18 f7 40 00 33d+19:27:02.150 READ FPDMA QUEUED

Error 3732 occurred at disk power-on lifetime: 34730 hours (1447 days + 2 hours)

When the command that caused the error occurred, the device was active or idle.

After command completion occurred, registers were:

ER ST SC SN CL CH DH

-- -- -- -- -- -- --

40 51 05 4b 18 f7 02 Error: UNC at LBA = 0x02f7184b = 49748043

Commands leading to the command that caused the error were:

CR FR SC SN CL CH DH DC Powered_Up_Time Command/Feature_Name

-- -- -- -- -- -- -- -- ---------------- --------------------

60 08 08 48 18 f7 40 00 33d+19:27:02.150 READ FPDMA QUEUED

ef 02 00 00 00 00 00 00 33d+19:27:02.148 SET FEATURES [Enable write cache]

60 08 08 48 18 f7 a0 ff 33d+19:27:02.061 READ FPDMA QUEUED

60 08 08 48 18 f7 a0 ff 33d+19:27:02.056 READ FPDMA QUEUED

60 08 08 48 18 f7 40 00 33d+19:26:45.391 READ FPDMA QUEUED

SMART Self-test log structure revision number 1

Num Test_Description Status Remaining LifeTime(hours) LBA_of_first_error

# 1 Extended offline Completed without error 00% 2552 -

# 2 Short captive Completed without error 00% 20918 -

# 3 Short offline Completed without error 00% 20004 -

SMART Selective self-test log data structure revision number 1

SPAN MIN_LBA MAX_LBA CURRENT_TEST_STATUS

1 0 0 Not_testing

2 0 0 Not_testing

3 0 0 Not_testing

4 0 0 Not_testing

5 0 0 Not_testing

Selective self-test flags (0x0):

After scanning selected spans, do NOT read-scan remainder of disk.

If Selective self-test is pending on power-up, resume after 0 minute delay.

The above only provides legacy SMART information - try 'smartctl -x' for more

root@truenas[/home/admin]# smartctl -a /dev/sdk

smartctl 7.4 2023-08-01 r5530 [x86_64-linux-6.6.32-production+truenas] (local build)

Copyright (C) 2002-23, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Model Family: Seagate Barracuda LP

Device Model: ST32000542AS

Serial Number: 5XW1VLEV

LU WWN Device Id: 5 000c50 02e93115b

Firmware Version: CC34

User Capacity: 2,000,398,934,016 bytes [2.00 TB]

Sector Size: 512 bytes logical/physical

Rotation Rate: 5900 rpm

Device is: In smartctl database 7.3/5528

ATA Version is: ATA8-ACS T13/1699-D revision 4

SATA Version is: SATA 2.6, 3.0 Gb/s

Local Time is: Tue Aug 20 16:38:07 2024 CDT

==> WARNING: A firmware update for this drive may be available,

see the following Seagate web pages:

http://knowledge.seagate.com/articles/en_US/FAQ/207931en

http://knowledge.seagate.com/articles/en_US/FAQ/213915en

SMART support is: Available - device has SMART capability.

SMART support is: Enabled

=== START OF READ SMART DATA SECTION ===

SMART overall-health self-assessment test result: PASSED

General SMART Values:

Offline data collection status: (0x82) Offline data collection activity

was completed without error.

Auto Offline Data Collection: Enabled.

Self-test execution status: ( 0) The previous self-test routine completed

without error or no self-test has ever

been run.

Total time to complete Offline

data collection: ( 633) seconds.

Offline data collection

capabilities: (0x7b) SMART execute Offline immediate.

Auto Offline data collection on/off support.

Suspend Offline collection upon new

command.

Offline surface scan supported.

Self-test supported.

Conveyance Self-test supported.

Selective Self-test supported.

SMART capabilities: (0x0003) Saves SMART data before entering

power-saving mode.

Supports SMART auto save timer.

Error logging capability: (0x01) Error logging supported.

General Purpose Logging supported.

Short self-test routine

recommended polling time: ( 1) minutes.

Extended self-test routine

recommended polling time: ( 453) minutes.

Conveyance self-test routine

recommended polling time: ( 2) minutes.

SCT capabilities: (0x103f) SCT Status supported.

SCT Error Recovery Control supported.

SCT Feature Control supported.

SCT Data Table supported.

SMART Attributes Data Structure revision number: 10

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x000f 120 099 006 Pre-fail Always - 235700753

3 Spin_Up_Time 0x0003 100 100 000 Pre-fail Always - 0

4 Start_Stop_Count 0x0032 096 096 020 Old_age Always - 4358

5 Reallocated_Sector_Ct 0x0033 100 100 036 Pre-fail Always - 0

7 Seek_Error_Rate 0x000f 041 041 030 Pre-fail Always - 5647897819954

9 Power_On_Hours 0x0032 068 068 000 Old_age Always - 28196

10 Spin_Retry_Count 0x0013 100 100 097 Pre-fail Always - 0

12 Power_Cycle_Count 0x0032 100 100 020 Old_age Always - 132

183 Runtime_Bad_Block 0x0032 001 001 000 Old_age Always - 17520

184 End-to-End_Error 0x0032 100 100 099 Old_age Always - 0

187 Reported_Uncorrect 0x0032 100 100 000 Old_age Always - 0

188 Command_Timeout 0x0032 100 100 000 Old_age Always - 27

189 High_Fly_Writes 0x003a 099 099 000 Old_age Always - 1

190 Airflow_Temperature_Cel 0x0022 076 058 045 Old_age Always - 24 (Min/Max 19/27)

194 Temperature_Celsius 0x0022 024 042 000 Old_age Always - 24 (0 16 0 0 0)

195 Hardware_ECC_Recovered 0x001a 030 030 000 Old_age Always - 235700753

197 Current_Pending_Sector 0x0012 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0010 100 100 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x003e 200 163 000 Old_age Always - 17490

240 Head_Flying_Hours 0x0000 100 253 000 Old_age Offline - 6805 (74 0 0)

241 Total_LBAs_Written 0x0000 100 253 000 Old_age Offline - 554583647

242 Total_LBAs_Read 0x0000 100 253 000 Old_age Offline - 2466595393

SMART Error Log Version: 1

No Errors Logged

SMART Self-test log structure revision number 1

Num Test_Description Status Remaining LifeTime(hours) LBA_of_first_error

# 1 Extended offline Completed without error 00% 28180 -

# 2 Short offline Completed without error 00% 27884 -

# 3 Short offline Completed without error 00% 27883 -

SMART Selective self-test log data structure revision number 1

SPAN MIN_LBA MAX_LBA CURRENT_TEST_STATUS

1 0 0 Not_testing

2 0 0 Not_testing

3 0 0 Not_testing

4 0 0 Not_testing

5 0 0 Not_testing

Selective self-test flags (0x0):

After scanning selected spans, do NOT read-scan remainder of disk.

If Selective self-test is pending on power-up, resume after 0 minute delay.

The above only provides legacy SMART information - try 'smartctl -x' for more

root@truenas[/home/admin]# smartctl -a /dev/sdl

smartctl 7.4 2023-08-01 r5530 [x86_64-linux-6.6.32-production+truenas] (local build)

Copyright (C) 2002-23, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Device Model: HITACHI HUS724020ALA640

Serial Number: P6HADWHP

LU WWN Device Id: 5 000cca 22dd2d397

Firmware Version: MF6ONS00

User Capacity: 2,000,398,934,016 bytes [2.00 TB]

Sector Size: 512 bytes logical/physical

Rotation Rate: 7200 rpm

Form Factor: 3.5 inches

Device is: Not in smartctl database 7.3/5528

ATA Version is: ATA8-ACS T13/1699-D revision 4

SATA Version is: SATA 3.0, 6.0 Gb/s (current: 6.0 Gb/s)

Local Time is: Tue Aug 20 16:38:12 2024 CDT

SMART support is: Available - device has SMART capability.

SMART support is: Enabled

=== START OF READ SMART DATA SECTION ===

SMART overall-health self-assessment test result: PASSED

General SMART Values:

Offline data collection status: (0x00) Offline data collection activity

was never started.

Auto Offline Data Collection: Disabled.

Self-test execution status: ( 0) The previous self-test routine completed

without error or no self-test has ever

been run.

Total time to complete Offline

data collection: (18963) seconds.

Offline data collection

capabilities: (0x5b) SMART execute Offline immediate.

Auto Offline data collection on/off support.

Suspend Offline collection upon new

command.

Offline surface scan supported.

Self-test supported.

No Conveyance Self-test supported.

Selective Self-test supported.

SMART capabilities: (0x0003) Saves SMART data before entering

power-saving mode.

Supports SMART auto save timer.

Error logging capability: (0x01) Error logging supported.

General Purpose Logging supported.

Short self-test routine

recommended polling time: ( 1) minutes.

Extended self-test routine

recommended polling time: ( 316) minutes.

SCT capabilities: (0x003d) SCT Status supported.

SCT Error Recovery Control supported.

SCT Feature Control supported.

SCT Data Table supported.

SMART Attributes Data Structure revision number: 16

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x000b 100 100 016 Pre-fail Always - 0

2 Throughput_Performance 0x0005 100 100 054 Pre-fail Offline - 0

3 Spin_Up_Time 0x0007 186 186 024 Pre-fail Always - 322 (Average 350)

4 Start_Stop_Count 0x0012 100 100 000 Old_age Always - 29

5 Reallocated_Sector_Ct 0x0033 100 100 005 Pre-fail Always - 0

7 Seek_Error_Rate 0x000b 100 100 067 Pre-fail Always - 0

8 Seek_Time_Performance 0x0005 100 100 020 Pre-fail Offline - 0

9 Power_On_Hours 0x0012 095 095 000 Old_age Always - 37392

10 Spin_Retry_Count 0x0013 100 100 060 Pre-fail Always - 0

12 Power_Cycle_Count 0x0032 100 100 000 Old_age Always - 29

192 Power-Off_Retract_Count 0x0032 099 099 000 Old_age Always - 1584

193 Load_Cycle_Count 0x0012 099 099 000 Old_age Always - 1584

194 Temperature_Celsius 0x0002 214 214 000 Old_age Always - 28 (Min/Max 20/60)

196 Reallocated_Event_Count 0x0032 100 100 000 Old_age Always - 0

197 Current_Pending_Sector 0x0022 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0008 100 100 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x000a 200 200 000 Old_age Always - 0

SMART Error Log Version: 1

No Errors Logged

SMART Self-test log structure revision number 1

Num Test_Description Status Remaining LifeTime(hours) LBA_of_first_error

# 1 Extended offline Completed without error 00% 37374 -

# 2 Short offline Completed without error 00% 26980 -

SMART Selective self-test log data structure revision number 1

SPAN MIN_LBA MAX_LBA CURRENT_TEST_STATUS

1 0 0 Not_testing

2 0 0 Not_testing

3 0 0 Not_testing

4 0 0 Not_testing

5 0 0 Not_testing

Selective self-test flags (0x0):

After scanning selected spans, do NOT read-scan remainder of disk.

If Selective self-test is pending on power-up, resume after 0 minute delay.

The above only provides legacy SMART information - try 'smartctl -x' for more

root@truenas[/home/admin]# paste code here

Thanks!

- The exact models seem to be included with smart reporting. I will try to find whether they are SMR shortly.

- I understand the risk. Most of the data on the pny-ssd-pool is static and I replicate that pool to the ait2408 pool every night. There is a chance I would lose data but unless I also loose the other backup pool it will at most be a day.

- Yes, I am interested in some input on a good setup. I was not aware that RAIDZ is not good for apps and VMs but it makes sense.

Some explanation of the rest of the mess. I have 3 pools in here.

- ssd storage

- HDD backup

- pcs_backup single drive. This is an offsite backup for someone else who has various other backups. If this fails no data is lost unless other terrible things happen at the same.

The rest of the pools are simply to test the drives in question.

Update:

I have not been able to find whether the Hitachi Ultrastar A7K2000 is SMR or not.

The Seagate Barracuda LP seems to be according to this, if Barracuda is same as Barracuda LP

I have not been able find information on the HITACHI HUS724020ALA640 either

These are drives I had laying around and I thought I would use them for my offsite backup. I am not losing much if these drives aren’t any good.

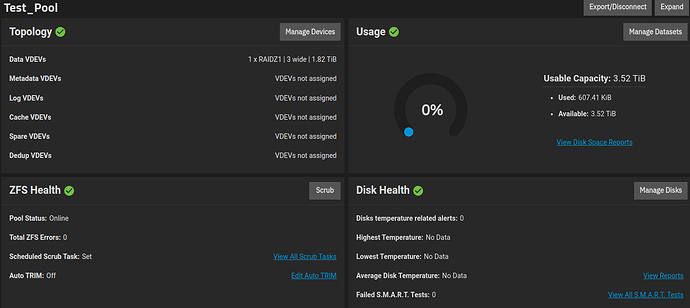

You’ve listed “sdk” as one of the drives. It has a partition of 1/2 the storage, so if that was used in the pool, that would explain the reduced storage.

To fix, simply wipe “sdk”, either by removing the “sdk1” partition or write zeros to the disk. Then retry creating the 3 x 2TByte RAID-Z1 pool again.

Assuming OP used the GUI, how did this happen?

First thing TrueNAS usually does when you setup a pool is format and partition the disks right?

A bug?

That is correct. Everything was done as shown in the screenshots above. This was a used drive so I am not surprised by the partition but I really expected TrueNAS would keep its word and destroy “all” data on the disk…

Aliens, it has to be the evil Goa’uld! At least that’s my story and I am sticking with it.

Back in reality, that is a very good question. Either the GUI should have said something like “disk already has data” because of the preexisting partition table entry and fail to use it. Or simply prompted the user to over-write it.

How can I stop these jobs? They have been stuck here ever since I tried to export the faulted test2 pool. I can’t either export test1 pool. Do I need to reboot?

I have physically removed the drives in hopes that would help but it does not seem to make a difference.

Guys! it works!!

I went ahead and rebooted to clear out those jobs since I could not find any way to stop them and they were preventing me from removing the one disk from Test1.

Removing the partitions did fix the problem.

Thanks for your help!!

If I have time (haha) I might try to reproduce the issue but currently I am thinking I probably had one of those 2TB disks in with a 1TB disk while I was doing some other testing. I am suspicious that since there was a zfs made partition there already it was not wiped.

As far as smart test go, I don’t really understand how to read them. Can I just trust TrueNAS to tell me when there is a problem with a drive or should I check the smart test results occasionally?

Thanks again for your help!

I think there is a bug somewhere there… there are also active reports at the moment of auto-expand not functioning correctly.

If the partitions are being sized wrong that would certainly explain the above.

You should check. Using this helps greatly.

You want to mainly look out for:

UDMA_CRC_Error_CountOffline_UncorrectableReallocated_Sector_Ct

And SMART short or long tests that cannot complete the test.