Hello, after searching for guides on how to get vGPU working on TrueNAS without finding much, I started experimenting and eventually managed to make it work. Here’s how I did it:

WARNING: What I did is not officially supported, and I’m far from an expert. You risk breaking your system by following these steps. Proceed with caution. If you plan to try this yourself, make sure to back up your configuration first, and consider testing everything in a temporary VM before applying it to your main system.

After setting up vGPU on Proxmox and allocating a portion of my Tesla P4 to my TrueNAS SCALE VM, I noticed that my GPU wasn’t recognized. Running nvidia-smi returned the following output: NVIDIA-SMI has failed because it couldn't communicate with the NVIDIA driver. Make sure that the latest NVIDIA driver is installed and running.I also saw the following messages during boot:

[ 5365.534389] NVRM: The NVIDIA GPU 0000:02:00.0 (PCI ID: 10de:ibb3) [ 5365.534389] NVRM: installed in this system is not supported by the [ 5365.534389] NVRM: NVIDIA 550.127.05 driver release. [ 5365.534389] NVRM: Please see 'Appendix A - Supported NVIDIA GPU Products' [ 5365.534389] NVRM: in this release's README, available on the operating system [ 5365.534389] NVRM: specific graphics driver download page at www.nvidia.com. [ 5365.537097] nvidia: probe of 0000:02:00.0 failed with error -1 [ 5365.537355] NVRM: The NVIDIA probe routine failed for 1 device(s). [ 5365.537535] NVRM: None of the NVIDIA devices were initialized. [ 5366.332187] [ 5366.335732] NVRM: The NVIDIA GPU 0000:02:00.0 (PCI ID: 10de:ibb3) [ 5366.335732] NVRM: installed in this system is not supported by the [ 5366.335732] NVRM: NVIDIA 550.127.05 driver release. [ 5366.335732] NVRM: Please see 'Appendix A - Supported NVIDIA GPU Products' [ 5366.335732] NVRM: in this release's README, available on the operating system [ 5366.335732] NVRM: specific graphics driver download page at www.nvidia.com. [ 5366.338884] nvidia: probe of 0000:02:00.0 failed with error -1 [ 5366.339198] NVRM: The NVIDIA probe routine failed for 1 device(s). [ 5366.339435] NVRM: None of the NVIDIA devices were initialized.

So, I decided to take my chances and manually install the required drivers by following these steps:

-

I first disabled the default NVIDIA drivers from the app settings (configuration->settings->uncheck “install nvidia drivers”) and rebooted the system

-

I enabled developer mode by running

install-dev-tools(see: Developer Mode (Unsupported) | TrueNAS Documentation Hub) -

I downloaded and installed the Nvidia 535 GRID drivers following these instructions: Proxmox vGPU - v3 - wvthoog.nl (important: don’t download the driver files to your home directory — they won’t be runnable. I saved them in my main pool instead.)

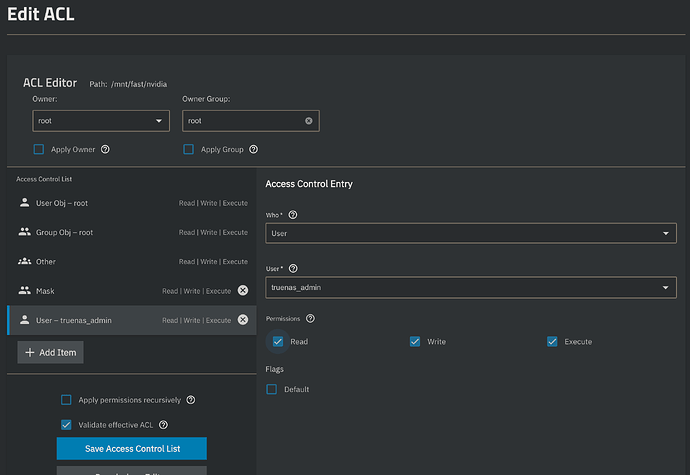

After that, I encountered an error about an unset UUID, which I fixed thanks to this forum post: Docker Apps and UUID issue with NVIDIA GPU after upgrade to 24.10; Once that was resolved, I got another error when starting the container: [EFAULT] Failed 'up' action for 'jellyfin' app. Please check /var/log/app_lifecycle.log for more details. In the app_lifecycle.log file i found the following error: Error response from daemon: failed to create task for container: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: error during container init: error running hook #1: error running hook: exit status 1, stdout: , stderr: Auto-detected mode as 'legacy' nvidia-container-cli: initialization error: nvml error: driver not loaded: unknown that I fixed by installing the NVIDIA Container Toolkit, following this guide: Installing the NVIDIA Container Toolkit — NVIDIA Container Toolkit. After completing all these steps and restarting Docker, everything started working perfectly.

The following day after a vgpu profile change and a restart I started getting the same Failed ‘up’ error but with the following error in the logs: Error response from daemon: failed to create task for container: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: error during container init: error running hook #1: error running hook: exit status 1, stdout: , stderr: Auto-detected mode as 'legacy' nvidia-container-cli: device error: GPU-59a463e2-2821-11f0-a2f6-d880bd3f90c1: unknown device: unknown. The solution was the same as for the unset UUID error.

Final disclaimer: Forgive me for any possible grammatical errors, and don’t blame me if your system implodes. Have fun!

If you know a better and especially more supported way of achieving this, please let me know.