I inherited a truenas system and i am getting a notification that one of the drive is failing.

" Pool Tank1 state is DEGRADED: One or more devices are faulted in response to persistent errors. Sufficient replicas exist for the pool to continue functioning in a degraded state.

The following devices are not healthy:

- Disk IBM-XIV ST6000NM0054 D5 S4D154WX0000K705JFZ7 is FAULTED"

The sas2ircu command does not show the serial number of the drive

tired@truenas1[~] # sas2ircu 0 display

LSI Corporation SAS2 IR Configuration Utility.

Version 20.00.00.00 (2014.09.18)

Copyright (c) 2008-2014 LSI Corporation. All rights reserved.

Read configuration has been initiated for controller 0

Controller information

Controller type : SAS2008

BIOS version : 7.39.02.00

Firmware version : 20.00.07.00

Channel description : 1 Serial Attached SCSI

Initiator ID : 0

Maximum physical devices : 255

Concurrent commands supported : 3432

Slot : Unknown

Segment : 0

Bus : 2

Device : 0

Function : 0

RAID Support : No

IR Volume information

Physical device information

Initiator at ID #0

Device is a Hard disk

Enclosure # : 2

Slot # : 0

SAS Address : 5000c50-0-862c-ad9d

State : Ready (RDY)

Size (in MB)/(in sectors) : 5723166/11721045160

Manufacturer : IBM-XIV

Model Number : ST6000NM0054 D5

Firmware Revision : EC68

Serial No : Z4D4S80Y1027EC68

GUID : N/A

Protocol : SAS

Drive Type : SAS_HDD

Device is a Hard disk

Enclosure # : 2

Slot # : 1

SAS Address : 5000c50-0-98ba-f5c1

State : Ready (RDY)

Size (in MB)/(in sectors) : 5723166/11721045160

Manufacturer : IBM-XIV

Model Number : ST6000NM0054 D5

Firmware Revision : EC6D

Serial No : S4D1BBHB0820EC6D

GUID : N/A

Protocol : SAS

Drive Type : SAS_HDD

Device is a Hard disk

Enclosure # : 2

Slot # : 2

SAS Address : 5000c50-0-984b-3edd

State : Ready (RDY)

Size (in MB)/(in sectors) : 5723166/11721045160

Manufacturer : IBM-XIV

Model Number : ST6000NM0054 D5

Firmware Revision : EC6D

Serial No : S4D16E110820EC6D

GUID : N/A

Protocol : SAS

Drive Type : SAS_HDD

Device is a Hard disk

Enclosure # : 2

Slot # : 3

SAS Address : 5000c50-0-98bb-1579

State : Ready (RDY)

Size (in MB)/(in sectors) : 5723166/11721045160

Manufacturer : IBM-XIV

Model Number : ST6000NM0054 D5

Firmware Revision : EC6D

Serial No : S4D1BB920820EC6D

GUID : N/A

Protocol : SAS

Drive Type : SAS_HDD

Device is a Hard disk

Enclosure # : 2

Slot # : 4

SAS Address : 5000c50-0-97ea-089d

State : Ready (RDY)

Size (in MB)/(in sectors) : 5723166/11721045167

Manufacturer : SEAGATE

Model Number : ST6000NM0034

Firmware Revision : MS2D

Serial No : S4D13HD1

GUID : N/A

Protocol : SAS

Drive Type : SAS_HDD

Device is a Hard disk

Enclosure # : 2

Slot # : 5

SAS Address : 5000c50-0-984a-2241

State : Ready (RDY)

Size (in MB)/(in sectors) : 5723166/11721045160

Manufacturer : IBM-XIV

Model Number : ST6000NM0054 D5

Firmware Revision : EC6D

Serial No : S4D154WX0820EC6D

GUID : N/A

Protocol : SAS

Drive Type : SAS_HDD

Device is a Hard disk

Enclosure # : 2

Slot # : 6

SAS Address : 5000c50-0-846b-8355

State : Ready (RDY)

Size (in MB)/(in sectors) : 5723166/11721045160

Manufacturer : IBM-XIV

Model Number : ST6000NM0054 D5

Firmware Revision : EC6D

Serial No : Z4D3A3VM0820EC6D

GUID : N/A

Protocol : SAS

Drive Type : SAS_HDD

Device is a Hard disk

Enclosure # : 2

Slot # : 7

SAS Address : 5000c50-0-98f6-b199

State : Ready (RDY)

Size (in MB)/(in sectors) : 5723166/11721045160

Manufacturer : IBM-XIV

Model Number : ST6000NM0054 D5

Firmware Revision : EC6D

Serial No : S4D1DDMV0820EC6D

GUID : N/A

Protocol : SAS

Drive Type : SAS_HDD

Device is a Hard disk

Enclosure # : 2

Slot # : 8

SAS Address : 5000c50-0-98f6-bb81

State : Ready (RDY)

Size (in MB)/(in sectors) : 5723166/11721045160

Manufacturer : IBM-XIV

Model Number : ST6000NM0054 D5

Firmware Revision : EC6D

Serial No : S4D1DDGT0820EC6D

GUID : N/A

Protocol : SAS

Drive Type : SAS_HDD

Device is a Hard disk

Enclosure # : 2

Slot # : 9

SAS Address : 5000c50-0-98f6-c24d

State : Ready (RDY)

Size (in MB)/(in sectors) : 5723166/11721045160

Manufacturer : IBM-XIV

Model Number : ST6000NM0054 D5

Firmware Revision : EC6D

Serial No : S4D1DDEE0820EC6D

GUID : N/A

Protocol : SAS

Drive Type : SAS_HDD

Device is a Hard disk

Enclosure # : 2

Slot # : 10

SAS Address : 5000c50-0-984a-e1d1

State : Ready (RDY)

Size (in MB)/(in sectors) : 5723166/11721045160

Manufacturer : IBM-XIV

Model Number : ST6000NM0054 D5

Firmware Revision : EC6D

Serial No : S4D16A6X0820EC6D

GUID : N/A

Protocol : SAS

Drive Type : SAS_HDD

Device is a Hard disk

Enclosure # : 2

Slot # : 11

SAS Address : 5000c50-0-984a-a325

State : Ready (RDY)

Size (in MB)/(in sectors) : 5723166/11721045160

Manufacturer : IBM-XIV

Model Number : ST6000NM0054 D5

Firmware Revision : EC6D

Serial No : S4D16AP10820EC6D

GUID : N/A

Protocol : SAS

Drive Type : SAS_HDD

Device is a unknown device

Enclosure # : 2

Slot # : 24

SAS Address : 500056b-3-7789-abfd

State : Standby (SBY)

Manufacturer : DP

Model Number : SAS2 EXP BP

Firmware Revision : 1.07

Serial No : x360107

GUID : N/A

Protocol : SAS

Drive Type : SAS_HDD

Enclosure information

Enclosure# : 1

Logical ID : 5b8ca3a0:f14f9700

Numslots : 8

StartSlot : 0

Enclosure# : 2

Logical ID : 500056b3:6789abff

Numslots : 38

StartSlot : 0

SAS2IRCU: Command DISPLAY Completed Successfully.

SAS2IRCU: Utility Completed Successfully.

I wanted to see if i can turn on the led on the remaining drives and do a process of elimination but that doesn’t seem to work

tired@truenas1[~]# sas2ircu 0 LOCATE 2:10 ON

LSI Corporation SAS2 IR Configuration Utility.

Version 20.00.00.00 (2014.09.18)

Copyright (c) 2008-2014 LSI Corporation. All rights reserved.

SAS2IRCU: IocStatus = 4 IocLogInfo = 824180928

SAS2IRCU: SEP write request failed. Cannot perform LOCATE.

SAS2IRCU: Error executing command LOCATE.

My question is can i power off my nas system, pop the disks and record the serial number, put all the disks back in, power on the nas and i should be good to go?

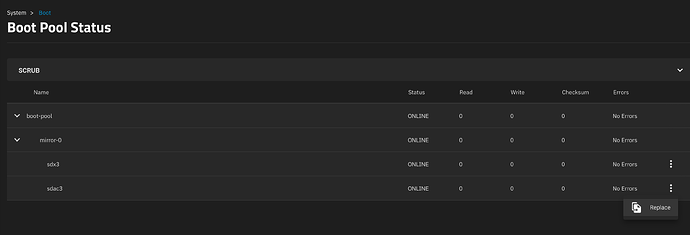

To replace the drive i know i have to…

Storage → Pool → Gear icon → Status → Select faulty disk → Offline → Wait a few minutes, pull the disk and then insert new disk. → Select same drive and replace

Thanks a lot