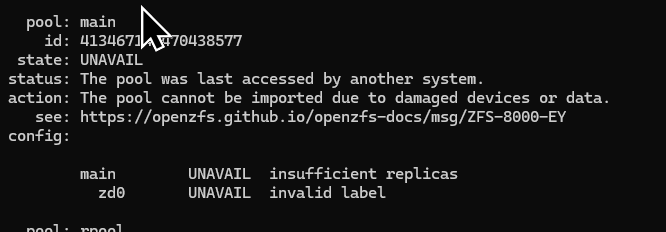

I had these “invalid label” entries on all my truenas systems.

I noticed this after doing an export into to import the checkpoint. When I tried to import main, it said main was ambiguous and I had to import by number.

I tried:

zpool labelclear -f /dev/zd....

will clear the entry but that is NOT recommended and didn’t work.

THE SOLUTION

So this turned out to be a misleading error message and nothing was wrong. The reason I couldn’t just import “main” is because I have a Zvol containing a ZFS pool with the name “main” so that caused the ambiguity which led me to zpool import to find the correct number. If you don’t try to run truenas in truenas, you’ll never run in to this problem ![]()

Here’s what happened.

Note: Most people will never run into this.

I created a truenas VM to install truenas on truenas. It worked flawlessly which is great. I named my pool “main” just like my main system. That is where the confusion happens. Most people aren’t running truenas in a truenas VM and using the same pool name.

So this was not the problem.

The problem was the local backup copies of the ZVol for the pool show up with that message above.

My main system (called truenas) has a pool called main. The VM’ed truenas has a pool called main (a ZVol on the real truenas).

I have three backup systems: one is SSD pool attached to my main system and I have two offsite replicas where I zfs replicate the main pool to a dataset on the backup devices.

So the main truenas system was showing the SSD backup of the truenas-pool Zvol, and of course the other systems had replicas of the same Zvol.

So all 3 systems had a backup copy of the same ZVol used for the VM.

This caused “zpool import” to give me the very misleading error message which isn’t even documented (if you follow the link, it doesn’t describe the error I got).

So there was absolutely nothing wrong; it was a misleading error message caused by the particular choices I made.

But I learned a lot during the process.

I restored the label removal mistake simply by restoring an earlier snapshot, but I now realize that I simply removed the label of the backup, not the original.

Next time, I’ll check the /dev/z### device in /dev/zvols first to see where it was. That would’ve saved a lot of time.