Total newb to Truenas and zfs so please be gentle.

My storage dashboard is showing 93.7% usage with only 3.39TB left. I have 2 iscsi datasets. I checked them both (connected to a windows server) and there is only maybe 10TB total of a 54TB pool.

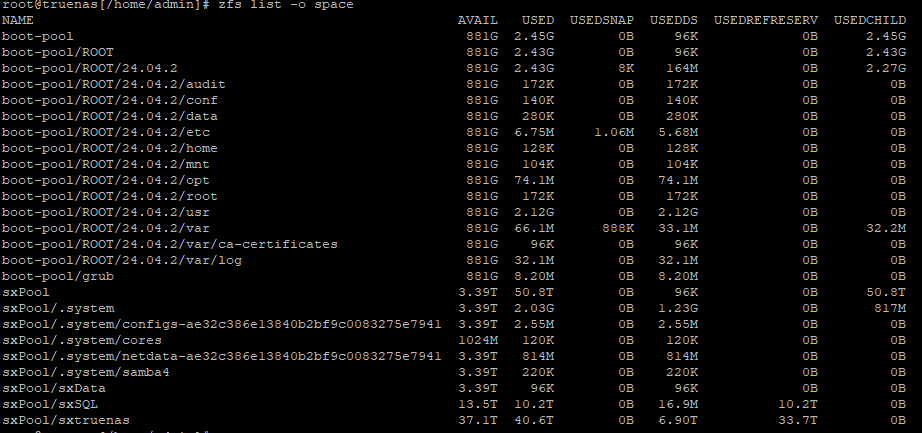

I don’t understand how or where it is getting the 93.7% usage warning.

I ran the command :

root@truenas[/home/admin]# zfs list -o space

NAME AVAIL USED USEDSNAP USEDDS USEDREFRESERV USEDCHILD

boot-pool 881G 2.45G 0B 96K 0B 2.45G

boot-pool/ROOT 881G 2.43G 0B 96K 0B 2.43G

boot-pool/ROOT/24.04.2 881G 2.43G 8K 164M 0B 2.27G

boot-pool/ROOT/24.04.2/audit 881G 172K 0B 172K 0B 0B

boot-pool/ROOT/24.04.2/conf 881G 140K 0B 140K 0B 0B

boot-pool/ROOT/24.04.2/data 881G 280K 0B 280K 0B 0B

boot-pool/ROOT/24.04.2/etc 881G 6.75M 1.06M 5.68M 0B 0B

boot-pool/ROOT/24.04.2/home 881G 128K 0B 128K 0B 0B

boot-pool/ROOT/24.04.2/mnt 881G 104K 0B 104K 0B 0B

boot-pool/ROOT/24.04.2/opt 881G 74.1M 0B 74.1M 0B 0B

boot-pool/ROOT/24.04.2/root 881G 172K 0B 172K 0B 0B

boot-pool/ROOT/24.04.2/usr 881G 2.12G 0B 2.12G 0B 0B

boot-pool/ROOT/24.04.2/var 881G 66.1M 888K 33.1M 0B 32.2M

boot-pool/ROOT/24.04.2/var/ca-certificates 881G 96K 0B 96K 0B 0B

boot-pool/ROOT/24.04.2/var/log 881G 32.1M 0B 32.1M 0B 0B

boot-pool/grub 881G 8.20M 0B 8.20M 0B 0B

sxPool 3.39T 50.8T 0B 96K 0B 50.8T

sxPool/.system 3.39T 2.03G 0B 1.23G 0B 817M

sxPool/.system/configs-ae32c386e13840b2bf9c0083275e7941 3.39T 2.55M 0B 2.55M 0B 0B

sxPool/.system/cores 1024M 120K 0B 120K 0B 0B

sxPool/.system/netdata-ae32c386e13840b2bf9c0083275e7941 3.39T 814M 0B 814M 0B 0B

sxPool/.system/samba4 3.39T 220K 0B 220K 0B 0B

sxPool/sxData 3.39T 96K 0B 96K 0B 0B

sxPool/sxSQL 13.5T 10.2T 0B 16.9M 10.2T 0B

sxPool/sxtruenas 37.1T 40.6T 0B 6.90T 33.7T 0B