What is a ZFS Pool “Checkpoint”?

If you do destructive or experimental actions against files and folders within a filesystem, you can always resort to rolling back to a dataset’s snapshot.

Even if you don’t wish to do a full rollback (which will ultimately lose any new data after the point-in-time of the snapshot), you can still retrieve old or deleted files from the read-only filesystem (i.e, "snapshot) on an individual basis.

But what safeguard exists if you do something outside of a filesystem?

What happens if you…

- …destroy a snapshot?

- …destroy an entire dataset?

- …overwrite good datasets with a misconfigured replication?

- …accidentally rollback a dataset, losing important snapshots?

- …add a new vdev to the pool that you instantly regret?

- …create chaos with a poorly vetted batch script that contains

zfscommands? - …trust ChatGPT with

rm -rfagainst everything? - …enable pool features that you immediately regret, thus forfeiting backwards compatibility?

- …rename / shuffle your dataset structure, only to immediately realize it was a bad idea?

This is where a pool checkpoint can come in handy.

You never want to find yourself in a situation where you need to resort to “rewinding” your pool back to a checkpoint, just as you never want to be in a situation where a seatbelt saves your life from a vehicle collision.

Ideally, you never mess up your pool.

Ideally, you never get into a car accident.

But just as seatbelts exist, so do pool checkpoints.

So then what is a ZFS Pool “Checkpoint”?

It is an immutable point-in-time state of the entire ZFS pool.

Managing Checkpoints with the command-line

To check the existence of a pool checkpoint, use the zpool get command, and look for a “size” under the VALUE column.

In this example, the pool “mypool” has no checkpoint:

zpool get checkpoint mypool

NAME PROPERTY VALUE SOURCE

mypool checkpoint - -

To create a checkpoint, use the zpool checkpoint command:

zpool checkpoint mypool

Now we can see the VALUE column has a “size”:

zpool get checkpoint mypool

NAME PROPERTY VALUE SOURCE

mypool checkpoint 540K -

If you want to remember when you created a checkpoint:

zpool status mypool | grep checkpoint

checkpoint: created Tue June 4 14:40:30 2024, consumes 540K

![]() An empty output means that no checkpoint exists.

An empty output means that no checkpoint exists.

To discard a checkpoint, use the -d flag in the command:

zpool checkpoint -d mypool

Now we see that there is no “size” under the VALUE column once again:

zpool get checkpoint mypool

NAME PROPERTY VALUE SOURCE

mypool checkpoint - -

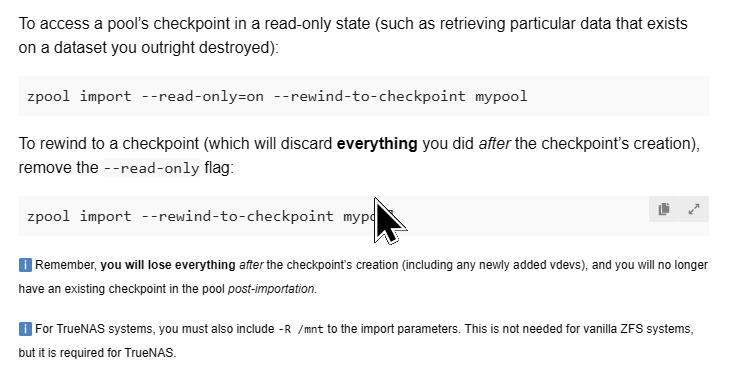

To actually “view” or “rewind” to a checkpoint requires that the pool is first exported, and then re-imported.

To access a pool’s checkpoint in a read-only state (such as retrieving particular data that exists on a dataset you outright destroyed):

zpool import --readonly=on --rewind-to-checkpoint mypool

To rewind to a checkpoint (which will discard everything you did after the checkpoint’s creation), remove the --readonly flag:

zpool import --rewind-to-checkpoint mypool

![]() Remember, you will lose everything after the checkpoint’s creation (including any newly added vdevs), and you will no longer have an existing checkpoint in the pool post-importation.

Remember, you will lose everything after the checkpoint’s creation (including any newly added vdevs), and you will no longer have an existing checkpoint in the pool post-importation.

![]() For TrueNAS systems, you must also include

For TrueNAS systems, you must also include -R /mnt to the import parameters. This is not needed for vanilla ZFS systems, but it is required for TrueNAS.

To automate a daily checkpoint at 03:00, I have been using a single-line Cron Job on my TrueNAS Core 13.3 server. The same can be configured with SCALE.

Click here to see it.

Cron Job

Description: Daily checkpoint for my pool

Command: zpool checkpoint -d -w mypool; zpool checkpoint mypool

Run As: root

Schedule: 0 3 * * * (03:00 every day)

What this does is create a new checkpoint daily at 03:00. This means that I will never sit on a checkpoint for more than 24 hours, while hopefully giving me enough time to “use” a checkpoint if something goes wrong, as long as I “rewind” the pool or disable the Cron Job before the next time the clock hits 03:00. (Otherwise, the Cron Job will replace the good checkpoint with a new checkpoint after the mistake was made.)

The -w flag is important for the Cron Job, since you want the first half of the command to “exit” only after it finishes discarding the checkpoint. The semicolon is also important, since you want them to run one after the other, even if the first half “fails” because “no checkpoint currently exists”.

Important caveats about Checkpoints

![]() Do not treat pool checkpoints as you would dataset snapshots.

Do not treat pool checkpoints as you would dataset snapshots.

There are some important caveats and distinctions:

- A pool can only have a single checkpoint

- A checkpoint’s contents cannot be accessed from a (normal) imported pool; you must export and re-import with the

--rewind-to-checkpointoption to access checkpoint-exclusive content - A scrub on a (normal) imported pool will not check the data that only exists in the checkpoint

- A checkpoint is pool-wide, thus “rewinding” back to a checkpoint will undo everything in the pool that you’ve done after its creation

- You are not supposed to “sit” on a checkpoint: After you create one and then do some “stuff”, you should very soon make a decision on whether you want to discard the checkpoint or rewind to it

- You cannot remove or modify vdevs, which includes replacing a disk, if a checkpoint exists

- You can add a new vdev after creating a checkpoint, in which rewinding the checkpoint will act as if the new vdev (including any files saved after its addition) never existed

- A “hot spare” drive will not automatically activate if a pool has a checkpoint. This is an important consideration if you plan to use hot spares.

- If you’re going to resilver a pool, you should discard the existing checkpoint and temporarily disable any automatic checkpoints. You can resume with automatic checkpoints after the resilver completes.

TL;DR: What should I do?

- You want to try something that affects the entire pool or dataset(s). This includes “upgrading” pool features, destroying datasets or snapshots, adding a new vdev, trying out a batch script that uses

zfscommands, receiving a replication stream to a dedicated backup pool that you might reconsider, and so on - Before doing so, you create a checkpoint with

zpool checkpoint mypool - You go ahead and continue with whatever you decided on

- You assess the results. You need to make a decision, since it’s unwise to let a checkpoint “sit” in a pool for too long.

4a. Are you happy with the results? Discard the checkpoint with

Discard the checkpoint with zpool checkpoint -d mypool

4b. Are you unhappy with the results? Export the pool and then rewind to the checkpoint with

Export the pool and then rewind to the checkpoint with zpool import --rewind-to-checkpoint mypool

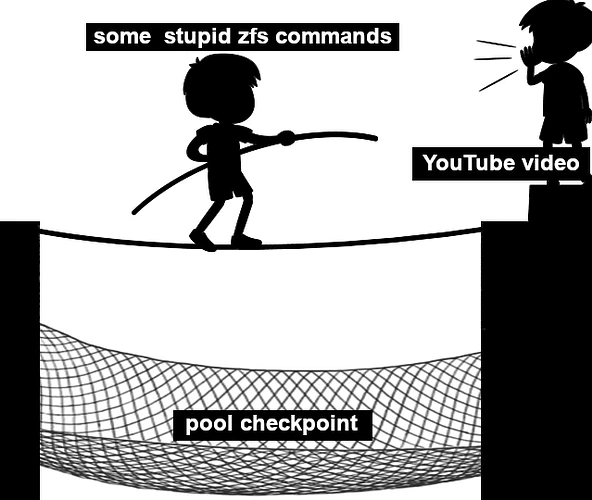

Always remember, kids!™

Use pool checkpoints as a safety net, with the mindset that you’ll never have to actually rewind your pool.

Wear seatbelts as a safety measure, with the mindset that you’ll never depend on them to save your life from a car accident.