Cool.I wanted to demonstrate that for pools which already have a sVDEV it’s fairly trivial to figure out how much is being used for planning reasons. In your case, it’s 25.8G

Reporting this somewhere in the UI is a feature request I’d happily vote for if you want to make it.

special - - - - - - - - -

mirror-2 1.45T 25.8G 1.43T - - 14% 1.73% - ONLINE

da6p1 1.46T - - - - - - - ONLINE

gptid/65cf991f-90d0-11eb-acc9-ac1f6b738b00 1.46T - - - - - - - ONLINE

gptid/6602f626-90d0-11eb-acc9-ac1f6b738b00 1.46T - - - - - - - ONLINE

2 Likes

I’ll amend more this weekend and look into your script also. In the meantime, I have a deadline I have to meet. Thank you for your help.

Dumb question - when writing to a single-VDEV rust, what are your transfer speeds like these days? Over 10GbE I recently transferred some large files to 1M recordsize dataset and was amazed at 400MB/s transfer speeds. Is that common now or is the sVDEV impacting this?

I get about 5Gb read and writes on this pool (config seen above)

Ex

Fascinating. I’m only running a single VDEV, so I would have expected your system to be performing closer to 10GbE. Perhaps the NAS was busy with other stuff?

More likely a single threaded and single client limitation, in parallel with many is when we’d see the difference.

1 Like

Stux

May 18, 2024, 10:43pm

29

Amazed good? Or amazed bad?

1 Like

Definitely amazed good! In the past the best I could hope for was 250MB/s.

1 Like

Stux

May 19, 2024, 12:04am

31

Might be something to do with not having to also write the metadata to the rust disks.

You said a single vdev… what is it ?

8x 10TB in RaidZ3?

Single z3 VDEV of eight He10 drives supplemented by a sVDEV with S3610 in 4-way mirror. I also spent a lot of time rebalancing the pool to ensure that as many small files migrated into the sVDEV as possible. Iocage and apps reside entirely on the sVDEV.

If I were to implement a VM, it would be on a dataset that lives 100% on the sVDEV of the pool.

Apologies, I don’t consider the sVDEV a second VDEV for the pool. Technically, it likely is?

Hey @kris , @will , and @Captain_Morgan ,

Would you consider the sVDEV planning article above decent enough for inclusion in the Resources section?

If so, what is the process to submit such an article for consideration re: inclusion in the Resource Section since I cannot presently post there?

kris

May 24, 2024, 4:37pm

34

Absolutely I think it is. @HoneyBadger do you want to move that to resources?

dan

May 24, 2024, 5:55pm

35

What I’ve done with mine is to post them in TrueNAS General, then flag my own post, give a reason of “something else,” and then in the text field ask that it be moved to Resources.

1 Like

Done - @Constantin you can find your new Resource here:

This resource was originally sourced from a Suggestion thread here:

https://forums.truenas.com/t/suggestion-better-svdev-planning-oversight-tools-in-gui/394/

4 Likes

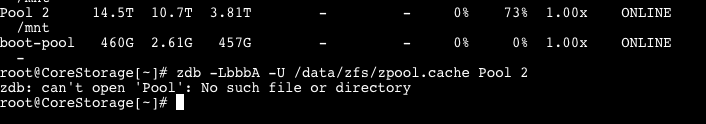

Tried to use zdb -LbbbA -U /data/zfs/zpool.cache poolname but i get the following error:

Any idea what i could be doing wrong?

etorix

February 10, 2025, 1:40pm

38

What’s this “2” at the end of the line? Do you have a space in the pool name?

And, by all means, do no use spaces or weird characters in pool and dataset names.

Yes, its Pool 1 and Pool 2. What command should i use?

@etorix

I was able to run the command and here’s the output:

Traversing all blocks ...

39.2T completed (47718MB/s) estimated time remaining: 0hr 00min 00sec

bp count: 273931836

ganged count: 0

bp logical: 35487125886976 avg: 129547

bp physical: 34389847032320 avg: 125541 compression: 1.03

bp allocated: 43050878574592 avg: 157159 compression: 0.82

bp deduped: 0 ref>1: 0 deduplication: 1.00

Normal class: 43050878550016 used: 53.83%

Embedded log class 16384 used: 0.00%

additional, non-pointer bps of type 0: 1518161

number of (compressed) bytes: number of bps

17: 3318 *

18: 1384 *

19: 27 *

20: 207 *

21: 198 *

22: 1248 *

23: 61 *

24: 979 *

25: 138 *

26: 76 *

27: 675340 ****************************************

28: 3692 *

29: 9781 *

30: 12176 *

31: 141 *

32: 1530 *

33: 2856 *

34: 36 *

35: 1987 *

36: 57 *

37: 154 *

38: 9987 *

39: 129 *

40: 202 *

41: 187 *

42: 6260 *

43: 496 *

44: 123 *

45: 775 *

46: 2391 *

47: 1589 *

48: 152 *

49: 2152 *

50: 378 *

51: 1461 *

52: 4338 *

53: 8078 *

54: 2054 *

55: 4982 *

56: 5788 *

57: 1405 *

58: 2373 *

59: 1287 *

60: 838 *

61: 766 *

62: 2011 *

63: 1026 *

64: 1960 *

65: 1670 *

66: 2727 *

67: 2925 *

68: 1467 *

69: 866 *

70: 1243 *

71: 1570 *

72: 5891 *

73: 743 *

74: 678 *

75: 904 *

76: 881 *

77: 945 *

78: 1228 *

79: 1046 *

80: 938 *

81: 3387 *

82: 30191 **

83: 15531 *

84: 80625 *****

85: 527640 ********************************

86: 3008 *

87: 1021 *

88: 2146 *

89: 1445 *

90: 1752 *

91: 1777 *

92: 2815 *

93: 3311 *

94: 1291 *

95: 1051 *

96: 1586 *

97: 1217 *

98: 1082 *

99: 865 *

100: 785 *

101: 694 *

102: 628 *

103: 843 *

104: 989 *

105: 1668 *

106: 1095 *

107: 3665 *

108: 13567 *

109: 3777 *

110: 1231 *

111: 1423 *

112: 1759 *

Dittoed blocks on same vdev: 1512665

Blocks LSIZE PSIZE ASIZE avg comp %Total Type

- - - - - - - unallocated

2 32K 8K 48K 24K 4.00 0.00 object directory

1 32K 8K 48K 48K 4.00 0.00 L1 object array

73 36.5K 36.5K 1.71M 24K 1.00 0.00 L0 object array

74 68.5K 44.5K 1.76M 24.3K 1.54 0.00 object array

2 32K 4K 24K 12K 8.00 0.00 packed nvlist

- - - - - - - packed nvlist size

93 11.6M 372K 2.18M 24K 32.00 0.00 bpobj

- - - - - - - bpobj header

- - - - - - - SPA space map header

73 1.14M 292K 1.71M 24K 4.00 0.00 L1 SPA space map

4.68K 597M 40.1M 197M 42.1K 14.88 0.00 L0 SPA space map

4.75K 598M 40.4M 199M 41.8K 14.80 0.00 SPA space map

6 336K 336K 432K 72K 1.00 0.00 ZIL intent log

96 12M 384K 1.50M 16K 32.00 0.00 L5 DMU dnode

96 12M 384K 1.50M 16K 32.00 0.00 L4 DMU dnode

96 12M 384K 1.50M 16K 32.00 0.00 L3 DMU dnode

96 12M 388K 1.52M 16.2K 31.67 0.00 L2 DMU dnode

198 24.8M 4.77M 13.4M 69.3K 5.19 0.00 L1 DMU dnode

93.9K 1.47G 377M 1.48G 16.1K 3.98 0.00 L0 DMU dnode

94.4K 1.54G 383M 1.50G 16.2K 4.11 0.00 DMU dnode

97 388K 388K 1.52M 16.1K 1.00 0.00 DMU objset

- - - - - - - DSL directory

13 6.50K 1K 48K 3.69K 6.50 0.00 DSL directory child map

11 10.5K 4K 24K 2.18K 2.62 0.00 DSL dataset snap map

23 322K 80K 480K 20.9K 4.02 0.00 DSL props

- - - - - - - DSL dataset

- - - - - - - ZFS znode

- - - - - - - ZFS V0 ACL

334 10.4M 1.31M 5.25M 16.1K 7.95 0.00 L3 ZFS plain file

31.0K 993M 137M 529M 17.0K 7.26 0.00 L2 ZFS plain file

1.22M 39.0G 12.8G 35.6G 29.2K 3.03 0.09 L1 ZFS plain file

259M 32.2T 31.3T 39.1T 155K 1.03 99.90 L0 ZFS plain file

260M 32.3T 31.3T 39.2T 154K 1.03 99.99 ZFS plain file

12.3K 392M 49.0M 196M 16K 8.00 0.00 L1 ZFS directory

822K 837M 160M 1.32G 1.64K 5.22 0.00 L0 ZFS directory

834K 1.20G 209M 1.51G 1.85K 5.87 0.00 ZFS directory

10 10K 10K 160K 16K 1.00 0.00 ZFS master node

- - - - - - - ZFS delete queue

- - - - - - - zvol object

- - - - - - - zvol prop

- - - - - - - other uint8[]

- - - - - - - other uint64[]

- - - - - - - other ZAP

- - - - - - - persistent error log

1 32K 4K 24K 24K 8.00 0.00 L1 SPA history

5 640K 40K 216K 43.2K 16.00 0.00 L0 SPA history

6 672K 44K 240K 40K 15.27 0.00 SPA history

- - - - - - - SPA history offsets

- - - - - - - Pool properties

- - - - - - - DSL permissions

- - - - - - - ZFS ACL

- - - - - - - ZFS SYSACL

- - - - - - - FUID table

- - - - - - - FUID table size

1 1K 1K 24K 24K 1.00 0.00 DSL dataset next clones

- - - - - - - scan work queue

288 144K 512 16K 56 288.00 0.00 ZFS user/group/project used

- - - - - - - ZFS user/group/project quota

- - - - - - - snapshot refcount tags

- - - - - - - DDT ZAP algorithm

- - - - - - - DDT statistics

4.27K 2.20M 2.20M 68.3M 16K 1.00 0.00 System attributes

- - - - - - - SA master node

10 15K 15K 160K 16K 1.00 0.00 SA attr registration

20 320K 80K 320K 16K 4.00 0.00 SA attr layouts

- - - - - - - scan translations

- - - - - - - deduplicated block

98 274K 240K 1.90M 19.8K 1.14 0.00 DSL deadlist map

- - - - - - - DSL deadlist map hdr

1 1K 1K 24K 24K 1.00 0.00 DSL dir clones

- - - - - - - bpobj subobj

- - - - - - - deferred free

- - - - - - - dedup ditto

2 64K 8K 48K 24K 8.00 0.00 L1 other

129 537K 101K 648K 5.02K 5.32 0.00 L0 other

131 601K 109K 696K 5.31K 5.51 0.00 other

96 12M 384K 1.50M 16K 32.00 0.00 L5 Total

96 12M 384K 1.50M 16K 32.00 0.00 L4 Total

430 22.4M 1.69M 6.75M 16.1K 13.30 0.00 L3 Total

31.1K 1005M 137M 530M 17.0K 7.32 0.00 L2 Total

1.23M 39.4G 12.9G 35.8G 29.1K 3.05 0.09 L1 Total

260M 32.2T 31.3T 39.1T 154K 1.03 99.91 L0 Total

261M 32.3T 31.3T 39.2T 153K 1.03 100.00 Total

Block Size Histogram

block psize lsize asize

size Count Size Cum. Count Size Cum. Count Size Cum.

512: 130K 64.8M 64.8M 130K 64.8M 64.8M 0 0 0

1K: 117K 148M 213M 117K 148M 213M 0 0 0

2K: 84.6K 216M 429M 84.6K 216M 429M 0 0 0

4K: 5.83M 23.4G 23.8G 54.7K 302M 732M 0 0 0

8K: 2.34M 24.0G 47.8G 59.4K 654M 1.35G 5.72M 45.7G 45.7G

16K: 431K 8.90G 56.7G 198K 3.74G 5.09G 1.91M 31.7G 77.4G

32K: 726K 32.7G 89.4G 1.33M 43.6G 48.6G 1.65M 60.0G 137G

64K: 2.38M 222G 311G 57.6K 5.32G 54.0G 1.65M 161G 298G

128K: 248M 31.0T 31.3T 258M 32.2T 32.3T 249M 38.9T 39.2T

256K: 0 0 31.3T 0 0 32.3T 131 40.4M 39.2T

512K: 0 0 31.3T 0 0 32.3T 0 0 39.2T

1M: 0 0 3

If i read the guide correctly, it seems like my metadata information on this pool is exactly 35.8GB.

However, i’m bit confused about the Block Size Histogram and need help with the calculation so that i can choose the right capacity for Special VDEV.

Just to give you the insights, this is a 5*RAID-Z1 with Seagate EXOS X16 16TB disks.

Also, any resource or information on what kind of drives to select for the Special VDEV? I’m not sure whether i can use normal consumer grade drives or need Intel Optane (best suited i guess, not really sure tho) and whether it needs to be PLP or not and other features which i’m not aware of. I plan to use 4 way mirror and maybe 250GB disks. Please advice