Oh, that makes sense. So if I understand correctly stable features get only bug fixes in point releases but experimental feature can get any change in point release.

I didnt know that, thanks for clarification.

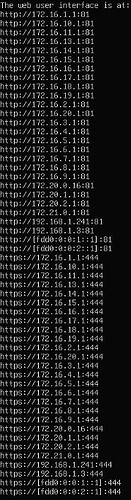

Why does Middleware bind web interfaces to every network interface, including docker networks? Isn’t that a bit insecure, especially to a network meant for reverse proxy facing the internet? Don’t remember this happening with Electric Eel.

I have an encrypted zvol which is a disk for a VM which resides within an encrypted parent dataset outside of the .ix-virt dataset. Under the Fangtooth RC I attached this to an incus VM and it is running fine as a VM. Now under 25.04 it seems that, if I wanted to repeat this step i.e. attach that zvol to a new VM instance, this is no longer possible as the UI now requires that zvol to be cloned or moved into the .ix-virt dataset (which is not possible as that would move the zvol outside of its encrypted parent which is not permitted). Is it possible to encrypt the zvols for VMs under 25.04 (or for that matter encrypt the datasets for LXC instances) - or does 25.04 force only unencruopted zvol and datasets to be used for VMs (other than bind-mounts of encrypted datasets)? If so, is there a plan to add the ability to use encrypted zvols and datasets for instances in the future?

Not just a reporting bug either, you can actually access webui from withing a docker network, even if you’ve set up iptables rules to prevent that.

I can’t see any record of these changes… if it impacts you, please start another thread and copy a link into your posts.

This is general advice for most issues found in a new release, use this thread to notify us of an issue, but start a new thread to troubleshoot the issue. Just include a link to the new thread if possible.

In the troubleshooting thread, we may recommend a bug report, but probably want some other diagnostic steps first.

Upgraded from RC.1 to the release version, as soon as it was available.

I previously had system freezes on RC.1, likely related to Incus VMs: TrueNAS 25.04-RC.1 is Now Available! - #141 by TheJulianJES

I did a bit of research after that post and stumbled upon this thread: Incus VM Crashing, which I also experienced on RC.1.

After upgrading to the Fangtooth release version, I imported the existing ZVOLs into the managed Incus volumes and kinda hope that the freezing and crashing problems are resolved with that (all VMs use VirtIO-SCSI). I’ll keep monitoring obviously.

However, I’ve been getting checksum errors on my main (encrypted) HDD pool on a i7 7700k/Z270F system since upgrading from 24.10 to 25.04, only 1 or 2 checksum errors per run, sometimes spread across multiple disks.

I did five(!) separate full scrubs with system reboots in between and got checksum errors every time. I obviously suspected the disks first, but they seem to check out fine. A full/long SMART test also completed without issues. It was also unlikely to have multiple disks “failing” at the same time with widely differing production dates.

Since the system doesn’t have ECC ram, I ran a multiple hour-long memory test, which checked out fine as well.

Later, I also read Frequent Checksum Errors During Scrub on ZFS Pool · Issue #16452 · openzfs/zfs · GitHub (about an AMD CPU) and someone mentioned that VMs can sometimes impact ZFS checksum calculation on “flawed” hardware.

Finally, I shut down both of my Windows Incus VMs that were running on a separate (encrypted) SSD pool, unset the Incus/VM pool to completely disable that part of TrueNAS, and did another reboot of the machine.

Now, a sixth scrub is about 70% done and I’ve got no checksum errors so far, whilst I’ve always had ones before before with 25.04 and the VMs running.

(24.10 was fine since release with the same VMs and pool, never any issues during scrubs.)

Digging into the checksum errors, the same zio_objset and zio_object were present across different scrubs. The same part of encryption metadata, written/created months ago according to ZDB on a particular dataset.

Other errors were present as well, but they differed a bit.

The same object being seemingly corrupt multiple times reinforced my suspicion of a hard drive issue at first, but I now feel like the CPU keeps messing up the same calculation for the checksum there, for some reason…

This is obviously a very weird issue, especially since 24.10 VMs weren’t seemingly affecting scrubs on an entirely different ZFS pool, but I’d be really interested to see if anyone else suddenly sees checksum errors on their pools when running Incus VMs.

The SSD pool where the Incus VMs were running is encrypted, as well as the main HDD pool with the issues, which seems to impact/change ZFS checksum calculation, compared to if the pools/datasets weren’t encrypted, and thus contributes to the issue(?)

I’ll do some more scrubs, re-enable Incus VMs to verify the issue appears/vanishes like described, and so on. I’ll likely also try swapping the 7700k/Z270F with a Ryzen 5650G on an x570 board, keeping everything else the same to see if the issue also manifests on a different platform.

I can’t really imagine what Incus would be doing differently to possibly cause such a weird issue like that. I’m also not aware of the i7 7700k having/causing any issues like this. If there’s any more ideas on what else to test, let me know.

EDIT: New thread for future updates on this here: ZFS checksum errors due to Incus VMs on 25.04

No ideas,

I do recommend starting a new thread and perhaps there will be some suggestions on diagnostics. AFAIK, its a unique problem.

Fangtooth.0 has passed 10,000 users in less than 2 days.

Its generally good and we appreciate the bug reports. The experimental Incus (instances) functionality is getting the most reports and fixes.

Regading bug report NAS-135396 where there was no Disk Temperature metric under Reporting/Disk. This has been resolved with a clean install from the downloadable ISO 25.04.0 so I do not know what the issue was.

Great release!

Not sure if I should open a bug report about this or not.

It’s happened for quite awhile now. Anytime that a network interface is added, deleted, or modified, when you click test, it always takes down Incus Instances. This was an issue in with jailmaker as well.

Basically if I create a new vlan or bridge, it will knock everything offline. I don’t recall if this affects apps or not.

I have a bond setup with several vlan and bridge adapters. It’s a bit annoying, since there is a bond with lacp. It would be nice if things didn’t go offline.

Let me know your thoughts. Thank you.

I can concurr with this reported behaviour. I have resolved to wait patiently while the Incus focused bug fixes happen. I tried to run a few VMs yesterday and was met with network perculiarities.

Pretty much uneventful upgrade from latest EE on my secondary system. My two apps (Dockge and Tailscale) started up without incident, and Dockge proceeded to start my various stacks also without incident. Migrating my one VM wasn’t too much of a chore, except that I had to fire up a VNC client to get console access to that VM in order to reconfigure its networking, because the interface name in the VM had changed.

SMB shares continue to work. NFS shares continue to work. Time Machine continues to work. UPS reporting continues to show completely nonsensical units (specifically, runtime is reported in days), but that’s been the case for a while. The SCALE logo now seems to be abandoned.

Now off to see about setting up a LXC…

I guess I understand the network going offline if I were messing with my bond or main bridge the nas IP is running off, but it happens whenever I add a new vlan or bridge and not modifying existing interfaces.

I’m just not sure if it should be filled as a bug report or feature request.

OK, “Disk” to set up mounted storage for a LXC is just weird. But it’s otherwise up and running to obtain and distribute certs through the LAN, and to sync my Compose stacks using Gitwatch.

Does that include the network interfaces that Docker creates automatically e.g. when bringing up a new Compose stack? (I’m debating whether I’m feeling brave enough to upgrade my VM-using system to Fangtooth. So far, the gotchas I’ve seen documented all sound like they won’t affect me, but if the VM goes down every time I tweak my Docker setup, that’s a different story altogether.)

Yes, I’d consider this a bug, Please submit and report bug-ticket ID here.

Even if its difficult to fix, we should be made aware so w can test and verify why its happening.

That’s worth noting.

Can you see any reason why the name I/F name changed?

If there’s nothing in release notes, we should fix it there.

Can you suggest a change to simplify process for later migratees?

Will do. This has been happening all the way back to 23.10, I’ve never really said anything, but I’m just tired of adding a VLAN or bridge and everything going offline each time, especially since I’m not modifying the main TN bridge or bond. Thank you for the response. ![]()

I can only assume it’s because the underlying virtual machine has changed. IIRC it was enp3 under EE; it’s enp5s0 under 25.04.

Automate the migration, obviously–which you should have done before releasing this. And if that meant you couldn’t keep your self-imposed six-month release schedule, so what? It isn’t like anyone’s going to be hurt if Fangtooth is 25.06 rather than 25.04.

It’s one thing for LXCs to be experimental–they’re a new feature, after all. It’s quite another to take out what you claimed[1] to be a production-ready VM system (SCALE was well into “enterprise” territory, and there were no warnings or disclaimers in the product about this feature) and replace it with one you call experimental.

incorrectly, IMO ↩︎