Summary

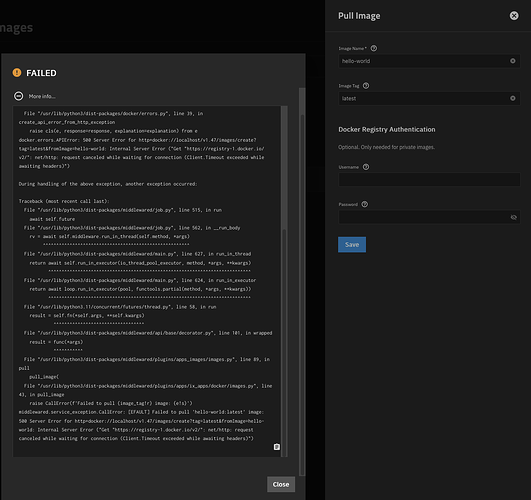

Docker on TrueNAS 25.04.2.4 (Fangtooth) consistently fails to pull images from any container registry with timeout errors. Network connectivity is confirmed working, DNS resolution succeeds, and curl from within Docker’s network namespace can successfully connect to registries. However, Docker’s own pull mechanism fails with “net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)”.

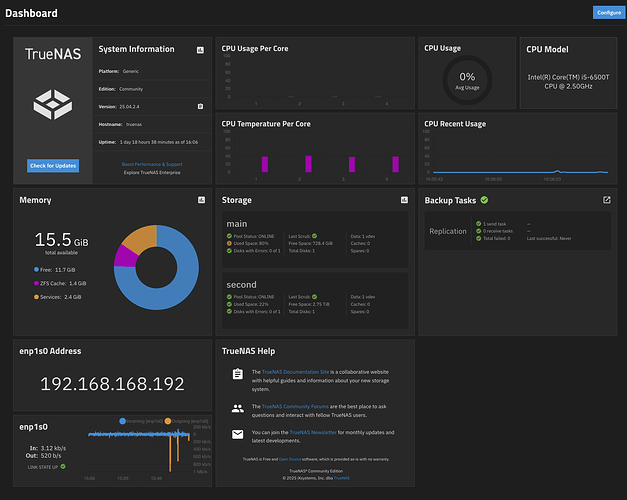

System Information

-

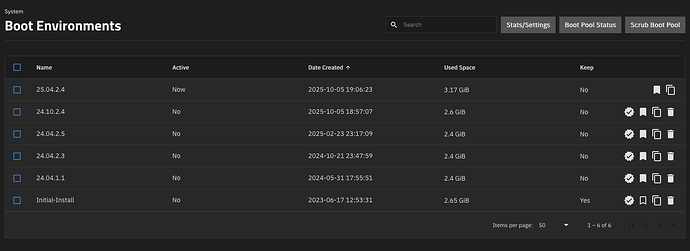

TrueNAS version: 25.04.2.4

-

Docker version: 27.5.0 (community edition)

-

containerd version: 1.7.25

-

runc version: 1.2.4

-

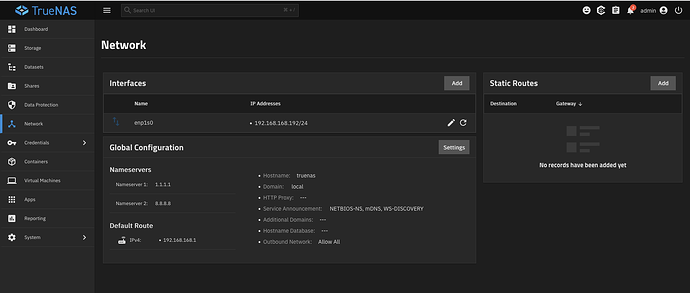

Network: 192.168.168.192/24 on working LAN

-

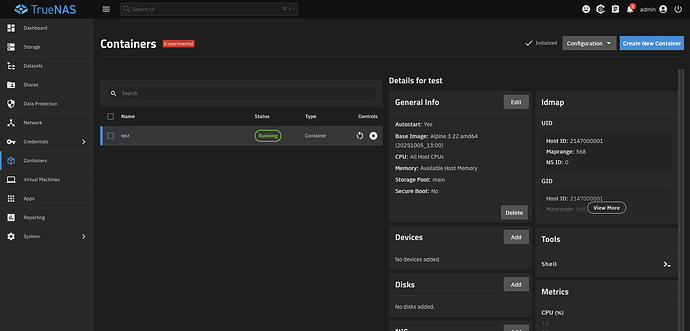

Migration context: Upgraded from TrueNAS 24.04.2.5 (trying to migrate from k3s to Docker-based apps manually)

Problem Description

All docker pull commands fail with timeout errors:

$ sudo docker pull hello-world

Using default tag: latest

Error response from daemon: Get "https://registry-1.docker.io/v2/": net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

This affects all registries (Docker Hub, ghcr.io, gcr.io, quay.io).

What Works

- Host can reach Docker Hub:

$ curl https://registry-1.docker.io/v2/

# Returns 401 (expected - authentication required)

- DNS resolution works:

$ nslookup registry-1.docker.io

# Resolves correctly to multiple IPs

-

Docker daemon starts and runs normally

-

No proxy configuration present

-

No firewall blocking (iptables OUTPUT chain is ACCEPT)

Diagnostic Journey

Initial Investigation

Docker daemon logs show timeout before attempting connection:

time="2025-10-05T23:32:10.576807676+01:00" level=debug msg="Trying to pull hello-world from https://registry-1.docker.io"

time="2025-10-05T23:32:25.577663725+01:00" level=warning msg="Error getting v2 registry: Get \"https://registry-1.docker.io/v2/\": context deadline exceeded"

tcpdump during pull attempt shows zero packets sent to registry:

$ sudo tcpdump -i any -n host registry-1.docker.io

# 0 packets captured - Docker doesn't even attempt the connection

IPv6 Investigation

Docker was attempting to use IPv6 despite the network being IPv4-only:

$ sudo journalctl -u docker | grep -i ipv6

time="2025-10-05T23:31:22.902956494+01:00" level=debug msg="Adding route to IPv6 network fdd0::1/64 via device docker0"

time="2025-10-05T23:31:22.903089365+01:00" level=debug msg="Could not add route to IPv6 network fdd0::1/64 via device docker0: network is down"

Mitigation attempted:

-

Removed IPv6 configuration from

/etc/docker/daemon.json -

Disabled IPv6 in Docker’s network namespace:

nsenter -t $(pidof dockerd) -n sysctl -w net.ipv6.conf.all.disable_ipv6=1 -

Created systemd startup script to permanently disable IPv6 in Docker’s namespace

Result: IPv6 disabled successfully, but pull still fails.

DNS Investigation

Testing DNS within Docker’s network namespace initially showed timeout:

$ sudo nsenter -t $(pidof dockerd) -n curl -v --max-time 10 https://registry-1.docker.io/v2/

* Resolving timed out after 10000 milliseconds

Mitigation attempted:

Added explicit DNS servers to /etc/docker/daemon.json:

{

"dns": ["1.1.1.1", "8.8.8.8"]

}

Result: DNS resolution now works in Docker’s namespace, but pull still fails.

Network Connectivity Verification

After DNS fix, curl from within Docker’s namespace works perfectly:

$ sudo nsenter -t $(pidof dockerd) -n curl -4 -v https://registry-1.docker.io/v2/

* Connected to registry-1.docker.io (34.237.110.211) port 443

< HTTP/2 401

{"errors":[{"code":"UNAUTHORIZED","message":"authentication required","detail":null}]}

# Connection succeeds, returns expected 401 auth error

But Docker pull still fails:

$ sudo docker pull hello-world

Error response from daemon: Get "https://registry-1.docker.io/v2/": net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

Traffic Analysis

During a pull attempt, tcpdump shows Docker makes NO network connections to external registries. Only local traffic (192.168.168.x) is captured. This confirms Docker’s HTTP client is failing before attempting any network I/O.

Current Configuration

/etc/docker/daemon.json:

{

"data-root": "/mnt/.ix-apps/docker",

"exec-opts": ["native.cgroupdriver=cgroupfs"],

"iptables": true,

"storage-driver": "overlay2",

"default-address-pools": [

{

"base": "172.17.0.0/12",

"size": 24

}

],

"dns": ["1.1.1.1", "8.8.8.8"]

}

/etc/systemd/system/docker.service.d/override.conf:

[Unit]

StartLimitBurst=1

StartLimitIntervalSec=910

[Service]

ExecStartPost=/bin/sh -c "iptables -P FORWARD ACCEPT && ip6tables -P FORWARD ACCEPT"

ExecStartPost=/mnt/.ix-apps/docker-disable-ipv6.sh

TimeoutStartSec=900

TimeoutStartFailureMode=terminate

Restart=on-failure

Key Findings

-

Docker’s Go HTTP client fails while curl succeeds from the same network namespace - This indicates the issue is not network-level but specific to Docker’s HTTP transport implementation.

-

Zero network packets sent to registries - Docker times out before attempting any TCP connection, suggesting the failure occurs in Docker’s registry client initialization or HTTP transport setup.

-

All registries affected equally - Not specific to Docker Hub; affects ghcr.io, gcr.io, quay.io as well.

-

Standard Docker binary -

strings /usr/bin/dockerd | grep -i truenasreturns no results; this is upstream Docker, not a TrueNAS custom build. -

No proxy or environment issues - Verified no HTTP_PROXY, HTTPS_PROXY, or NO_PROXY variables in dockerd process environment.

Unsuccessful Mitigation Attempts

-

Disabled IPv6 completely (both in daemon.json and via sysctl)

-

Added explicit DNS servers to daemon.json

-

Verified and corrected iptables NAT/MASQUERADE rules

-

Removed all IPv6 address pools from Docker configuration

-

Deleted and recreated docker0 bridge interface

-

Removed

/var/lib/docker/networkstate -

Tested with registry mirrors

-

Verified MTU settings (1500 on all interfaces)

-

Confirmed no seccomp/AppArmor/SELinux restrictions

-

Verified no systemd service hardening blocking network access

Comparison with Working System

On a working system, curl from Docker’s namespace would behave identically to Docker pull. On TrueNAS 25.04.2.4:

-

nsenter+ curl: Works perfectly -

Docker pull: Fails consistently

This strongly suggests a bug in Docker’s registry client or HTTP transport specific to how TrueNAS 25.04.2.4 was built or configured during the k3s to Docker migration.

Hypothesis

This appears to be a regression introduced in TrueNAS 25.04.2.4’s Docker implementation. The k3s to Docker migration may have left Docker in a broken state, or there’s an incompatibility between TrueNAS’s system configuration and Docker 27.5.0’s HTTP client.

Given that the June 1st 2025 app catalog update was supposed to break automatic migration from Dragonfish, users who migrated after that date may have incomplete or corrupted Docker setups.

Questions for TrueNAS Team

-

Is this a known issue in TrueNAS 25.04.2.4?

-

Are there TrueNAS-specific Docker configurations or middleware components that could interfere with Docker’s HTTP client?

-

Should users who migrated from 24.04.2.5 to 25.04.2.4 perform any additional migration steps?

-

Is downgrading to Electric Eel 24.10.2.2 a viable workaround?

Additional Context

This issue makes TrueNAS Apps completely non-functional as no images can be pulled. The system is otherwise healthy with all storage, networking, and services working correctly.

Any guidance would be greatly appreciated. Happy to provide additional diagnostics or test potential fixes.