I was using 24.04-RC.1, everything was normal.

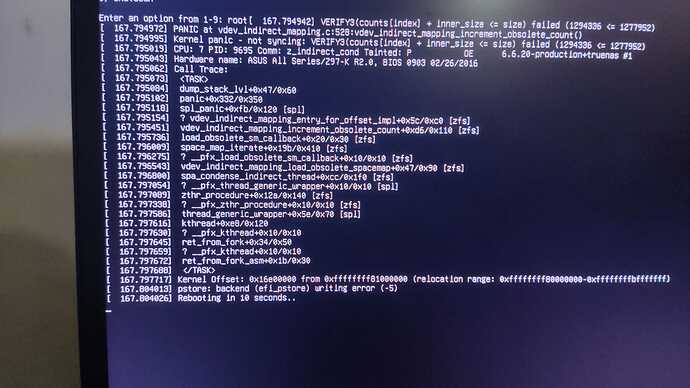

I updated to 24.04.0 and I’m having a problem with one of my pools, as soon as I take a backup of the settings, or even if I install clean and just try to import the pool, the error is the same.

The worst thing is that I can’t go back to RC.1, because kernel panic also happens.

my config:

Processor: i7-4790k

motherboard: Asus Z97-k R2.0

RAM: 32GiB HyperX

Disks - pools:

1 ssd - WD_Green_M.2_2280_240GB => boot-pool

4x1TiB - WD10EXEX => pool-HD - RAIDZ1

2x500GiB - WDC WDS500G1B0C-00S6U0 => pool-ssd - Stripe

1x4TiB - ST4000VX007-2DT166 => pool-trash

The problem is precisely in the SSD pool, the other 2 work normally, as long as I don’t import the SSD pool.

The kernel panic happens when trying to import the pool.

Did I lose everything, or is there a way to at least try to recover the data somehow, using command lines?