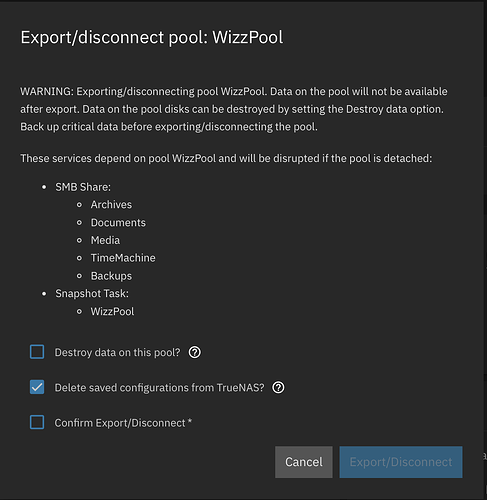

Do I want to leave this checked?

Uncheck the first two.

dmin@truenas[~]$ sudo zdb -l /dev/disk/by-partuuid/e4bb08a2-9a5d-4a24-9b8b-3ab220bb0267

------------------------------------

LABEL 0

------------------------------------

version: 5000

name: 'WizzPool'

state: 1

txg: 12007450

pool_guid: 3518430912930309335

errata: 0

hostid: 1780220649

hostname: 'truenas'

top_guid: 11842154841165029957

guid: 18340154160196067035

vdev_children: 1

vdev_tree:

type: 'raidz'

id: 0

guid: 11842154841165029957

nparity: 1

metaslab_array: 133

metaslab_shift: 34

ashift: 12

asize: 11995904212992

is_log: 0

create_txg: 4

children[0]:

type: 'disk'

id: 0

guid: 18340154160196067035

path: '/dev/disk/by-partuuid/e4bb08a2-9a5d-4a24-9b8b-3ab220bb0267'

whole_disk: 0

DTL: 90826

create_txg: 4

children[1]:

type: 'disk'

id: 1

guid: 15295080939741160980

path: '/dev/disk/by-partuuid/02f5451d-1e09-400a-9e08-2ba73150618f'

whole_disk: 0

DTL: 90825

create_txg: 4

children[2]:

type: 'disk'

id: 2

guid: 1389250103858833512

path: '/dev/disk/by-partuuid/c1e813a1-9f62-4450-88e0-1a7c64def8a3'

whole_disk: 0

DTL: 90824

create_txg: 4

features_for_read:

com.delphix:hole_birth

com.delphix:embedded_data

labels = 0 1 2 3

admin@truenas[~]$ sudo zdb -l /dev/disk/by-partuuid/02f5451d-1e09-400a-9e08-2ba73150618f

------------------------------------

LABEL 0

------------------------------------

version: 5000

name: 'WizzPool'

state: 1

txg: 12007450

pool_guid: 3518430912930309335

errata: 0

hostid: 1780220649

hostname: 'truenas'

top_guid: 11842154841165029957

guid: 15295080939741160980

vdev_children: 1

vdev_tree:

type: 'raidz'

id: 0

guid: 11842154841165029957

nparity: 1

metaslab_array: 133

metaslab_shift: 34

ashift: 12

asize: 11995904212992

is_log: 0

create_txg: 4

children[0]:

type: 'disk'

id: 0

guid: 18340154160196067035

path: '/dev/disk/by-partuuid/e4bb08a2-9a5d-4a24-9b8b-3ab220bb0267'

whole_disk: 0

DTL: 90826

create_txg: 4

children[1]:

type: 'disk'

id: 1

guid: 15295080939741160980

path: '/dev/disk/by-partuuid/02f5451d-1e09-400a-9e08-2ba73150618f'

whole_disk: 0

DTL: 90825

create_txg: 4

children[2]:

type: 'disk'

id: 2

guid: 1389250103858833512

path: '/dev/disk/by-partuuid/c1e813a1-9f62-4450-88e0-1a7c64def8a3'

whole_disk: 0

DTL: 90824

create_txg: 4

features_for_read:

com.delphix:hole_birth

com.delphix:embedded_data

labels = 0 1 2 3

admin@truenas[~]$ sudo zdb -l /dev/disk/by-partuuid/02f5451d-1e09-400a-9e08-2ba73150618f

------------------------------------

LABEL 0

------------------------------------

version: 5000

name: 'WizzPool'

state: 1

txg: 12007450

pool_guid: 3518430912930309335

errata: 0

hostid: 1780220649

hostname: 'truenas'

top_guid: 11842154841165029957

guid: 15295080939741160980

vdev_children: 1

vdev_tree:

type: 'raidz'

id: 0

guid: 11842154841165029957

nparity: 1

metaslab_array: 133

metaslab_shift: 34

ashift: 12

asize: 11995904212992

is_log: 0

create_txg: 4

children[0]:

type: 'disk'

id: 0

guid: 18340154160196067035

path: '/dev/disk/by-partuuid/e4bb08a2-9a5d-4a24-9b8b-3ab220bb0267'

whole_disk: 0

DTL: 90826

create_txg: 4

children[1]:

type: 'disk'

id: 1

guid: 15295080939741160980

path: '/dev/disk/by-partuuid/02f5451d-1e09-400a-9e08-2ba73150618f'

whole_disk: 0

DTL: 90825

create_txg: 4

children[2]:

type: 'disk'

id: 2

guid: 1389250103858833512

path: '/dev/disk/by-partuuid/c1e813a1-9f62-4450-88e0-1a7c64def8a3'

whole_disk: 0

DTL: 90824

create_txg: 4

features_for_read:

com.delphix:hole_birth

com.delphix:embedded_data

labels = 0 1 2 3

All the same TXG of 12007450

I supposed it’s had… a lot of TXGs since it was 11851573? This is all so confusing. ![]()

Go ahead and import the pool normally with the GUI and check that everything works and your data is there.

Everything seems to be functioning correctly. I can access my files. My computer found the backup files and is now running a backup.

I’m extremely relieved right now!!!

What a relief! ![]()

I wish we knew what happened and if it’s related to the others who faced something like this.

This is a good time to make a backup plan if you don’t already.

I really appreciate the hand holding through this! Couldn’t have done it without your help!

Minor remark: the errors after your first import could have been avoided if you had used the proper

zpool import -o altroot=/mnt ...

instead of just

zpool import ...

I’m assuming you’re referring to the dataset error… Yeah, would be nice to understand the options for the zpool command. The documentation that I could find was not very helpful.

Thank you!

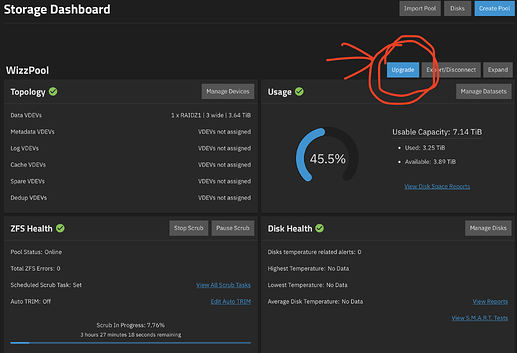

Upgrades cannot be undone. If you ever need to import your pool into an older system or previous version of ZFS, you will be unable to.

Only upgrade if you really need the new features.

It is just what the UI does when importing a pool. You should do the same when using the command line.

You might have noticed that in TrueNAS all pools are mounted at /mnt/<poolname> instead of /<poolname>. That’s accomplished with that altroot option.

When you just did an import without properly specifying altroot the system tried to mount your pool at /WizzPool but failed because / is read-only:

root@truenas:~# mkdir /WizzPool

mkdir: cannot create directory ‘/WizzPool’: Read-only file system

HTH,

Patrick

Gotcha. Thanks for the lesson!

OK, I will hold off then. Thanks again!

Never. I had a membership to the beta test club years ago and realized it was a horrible club to be a part of if you’re relying on anything.

Great work on resolving this.

It seems like the problem is that on one upgrade, one of the HDDs was removed from the pool, fell behind in TXGs and was no longer able to be imported into the pool.

So, the question is when did this happen… on what upgrade? and then why?

The nature of such issues are hard to diagnose because of the urgency of trying to recover data. ![]()

Superficially, a pattern seemed to overlap with four different cases with a degree of similarity.

I’m not trying to sound ungrateful or rude to anyone in particular, and I can understand how they must be feeling in such situations, but if they reuse or format the drives, it’s impossible to diagnose.

I wonder if a dd of the first 32MB of each drive can provide some hints? I’m not sure if anyone has yet uploaded an attachment to @HoneyBadger regarding this.

I think you’re right, though. “Something” caused one of the drives to stall long enough where the TXG lagged behind the rest.

It’s not really ZFS that requires this.

TrueNAS uses an “altroot” of /mnt for all storage pools. This is not a ZFS default.

@pmh was pointing out that if you use the command-line, but you don’t specify the “altroot” to use /mnt, then it will confuse the middleware and any configured shares or apps that are expecting your dataset mountpoints to start with /mnt.

Using the GUI automatically does this. That’s why you should always stick to using the GUI for pool and datasert operations, unless you need to bypass the GUI or middleware for reasons of troubleshooting.

Nothing yet, likely for the same reason stated of

To really solve this is going to require the ability to reproduce on-demand.

with that said if you happen to be suffering from this, users can do dd if=/dev/sda1 of=sda1.32m bs=1M count=32 to grab that first 32MB of device sda1 - assuming that’s your ZFS partition, as identified by lsblk - and then copy those files somewhere.

Got it. Probably looking in the wrong spot for the correct documentation. My bad! ![]()