Hi all. Just want to run my planned hardware setup by you to make sure I’m not making any obvious mistakes. I’m a complete rookie at TrueNAS and Linux in general (though I’ve been reading the forum archives for the past month), so I’d appreciate any feedback.

Disclaimer: a lot of the hardware choices were dictated by what I already have, and they may not be perfect/have overkill functionality.

Use case: general media server with Jellyfin + seedbox.

Hardware:

CPU: AMD Ryzen 5 5600G (with be quiet! Pure Rock 2 cooler)

MB: Gigabyte B550M-K (2x M.2, 4x SATA)

RAM: GeiL 16GB DDR4-3200 x2 (32GB total)

GPU: AsRock Arc A310 LP

HBA: LSI 9211-8i

PSU: Corsair RM750x

Drives:

Boot: ARDOR Gaming Ally 256GB (NVMe) connected to M2A

Applications pool: Samsung SSD 980 1TB (NVMe) connected to M2B

L2ARC: Samsung 860 Evo 512GB (M.2-SATA through adapter box) connected to SATA0

HDD: 10x WD HC520 12TB (SATA): 8 of them connected to HBA card through 2 SFF8087-4xSATA cables, the other 2 to SATA1 and SATA2 on the MB.

Expansion slots:

PCIe x16: LSI 9211-8i HBA

PCIe x1: Intel Arc A310 through x1-x16 adapter

Some notes:

-

I initially planned to just use the 5600G’s integrated graphics as the Jellyfin transcoding device, but I’ve read really bad reviews on AMD graphics for that purpose in general, so instead I’m going with an Arc card (it’s more modern and future-proof anyway) and will probably just disable the iGPU so it doesn’t cause conflicts. Since the motherboard only has one x16 slot and it’s taken up by the HBA, I bought a simple passive adapter on Aliexpress and plan on plugging the GPU into it. Should be fine…?

-

the L2ARC drive is chosen because it’s an old, worn-down SATA M.2 SSD that I otherwise have no use for, and I don’t want to trust with anything more important than a cache that won’t disrupt anything if it dies. Similarly, the app pool drive is so large solely because I’m repurposing existing hardware. I’d go without an L2ARC at all, but if this is to be a seedbox, I’d love if it soaks up some of the random reads torrent seeding does and reduce HDD head thrashing.

-

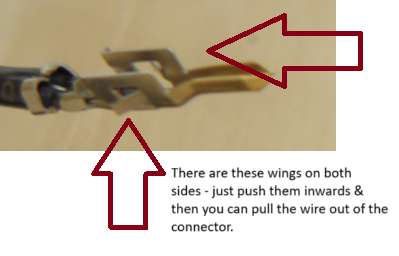

The HDDs unfortunately have the Power Disable pin active, so I’ll have to power them from the Molex leads on the power supply through Molex-2x SATA adapters. Hopefully won’t be an issue. I know “Molex to SATA, lose all your data”, but I’ve been running two of those drives in a PC for many years and have not had issues.

-

I’ve read somewhere that LSI cards don’t behave particularly well on Gigabyte motherboards. I already have this board so I didn’t explicitly choose it, but if anyone wants to substantiate this claim I’d love to know.

-

Case compatibiltity isn’t an issue, since I’m DIYing a piece of plywood and some metal brackets to mount everything on, and it’ll sit in a ventilated closet away from the living spaces.

Software: I’m planning to run Scale 24.04 (because this appears to be the more actively developed and preferred version by developers) with Jellyfin and qBitTorrent apps from the official catalog running 24/7. The idea is 2x 5-wide RAIDZ1 vdevs in one pool for one huge SMB share that all the apps (and Windows clients) interact with.

I also need to migrate 40TB of existing data from my current 5-drive DAS, and ideally I want to do the following: create a pool with 1 5-drive RAIDZ1, transfer all the data to it, delete the DAS array thus freeing up its 5 drives, then add those 5 to the existing pool as a second 5-drive vdev, thus doubling the size of the pool and the big SMB share. Will this work as I imagine it to? Will TrueNAS then balance the load to account for the newly-added drives?

Again, if I’m making any incorrect assumptions or mistakes here, I’d love if people here correct me! Thanks in advance!