BASH Sucks to write a program with! But it has its uses.

9000+ lines, it started out significantly smaller. Why so much larger? Features and almost half of it is for the -config routine. But then you have to account for every possible non-standard message/report you get. It is not fun having a report of a problem when you thought you covered everything. And it still happens after this long. Part of those 9000 lines is also a built in simulator where I can take the -dump data and run the script to experience what the end user is seeing, for the most part. This is for the sole purpose if troubleshooting a problem.

When you are writing a program just for yourself, it is easy and the code can be very small. When you give it to others and problems happen, those fixes add a lot.

The TrueNAS API is nice and I have been using it in a small script (1229 lines right now but looking to make it smaller). The next version of Multi-Report will be using it almost exclusively and hopefully only use smartctl to issue testing commands only. I have a script in testing right now but that is testing. Once all looks safe, it will be released.

The purpose of Multi-Report (originally called FreeNAS Report or something like that 10+ years ago) was to catch drives that were not being tested. FreeNAS 8.x and 9.x at the time had a habit of if you replaced a drive, the drive (call it ada0) would drop off the SMART testing list. Why? Who knows. But you could go months without even realizing it. So a script was born, for my personal use. I offered it to others and it became sort of popular.

The script does a lot more these days, including running SMART Self-test on NVMe drives. TrueNAS will be supporting NVMe drive testing finally but until it is in all products, I will still support it. Once I no longer need it, I will disable it and eventually remove it.

And Multi-Report does that as well in the form of a comma separated variable (CSV) file, AKA, spreadsheet.

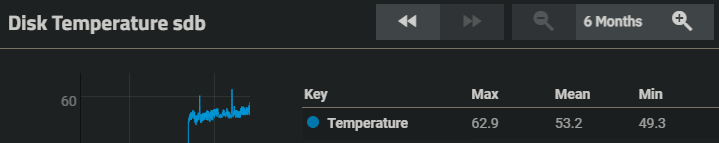

Hum, other things it can do (by request), it can monitor drive temps and send you an email when it passes a threshold. It can log data each time your run it without sending out a full report. It does a lot that I would think, most people would not use, but some have asked for so I rolled it in.

Right out of the box, Multi-Report should run with only one setup change, adding your email address to send the report to. The defaults should be fine for most people. Oh yea, a big one… the script will attach a copy of your TrueNAS config file by default on each Monday the script is run. This is a big CYA for many people.

Feel free to examine the script and hopefully something will help you out. FreeNAS-Report is now maintained by @dak180 and does a good job at keeping it up to date as issues occur. His version is significantly smaller.

And if you want to learn BASH or the TrueNAS API, finding a project you are passionate about is a good way to learn. And if you find an issue with Multi-Report, please toss me a message.