Hi,

I would very much like to use Fangtooth on my NAS as I hope for better support for my Arc A310 and maybe even the poissibilty of passing my Ryzens iGPU to VMs.

As I don’t want to be surprised I have created a virtual TrueNAS 24.10 installation on my Windows PC under Hyper-V with nested virtualization and installed a Windows 11 VM there (setting machine type to q35 and enabling tpm).

I used virtio devices as that’s what my actual Windows VM on my actual NAS uses and installed all the drivers in the VM.

After this I updated the virtual TrueNAS to 25.04 and recreated the VM as mentioned here.

I also took a look at this guide but

as it states

Before switching to Fangtooth, download stable Virtio drivers ISO and install into your VM by running virtio-win-guest-tools.exe .

Then upgrade to Fangtooth.

If you want to use NVMe for the zvol, thats all. It will work if your Windows supports it (Win10 and Win11).

I was confident that I would not need to do anything in addition to the official guide.

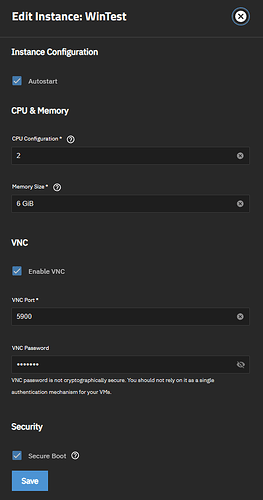

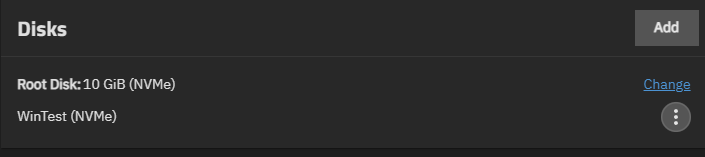

Here is my VM configuration:

Now to my problem: after I power on, the VM starts and if I connect a VNC client fast enough I also see Windows beginning to boot (spinning circle…)

After a few seconds it hangs though and the VM becomes powered off.

I could not find any logs about this in /var/log/incus

Any ideas how to get this working?

If I am not mistaken in 24.10 there is no q35 or tpm setting, so what exactly do you mean?

Seeing your screenshot, maybe try disabling Secure Boot?

1 Like

The common workaround to get Windows 11 working under TrueNAS >=24.10 was to set the vm to q35 via cli as described here.

I could of course also modify my Windows 11 installation to accept a machine without TPM and with no secure boot enabled but that wouldn’t be a supported

Windows installation.

Disabling secure boot did actually allow an existing Windows 11 installation to boot though, so thanks for the thought!

Now the question is: why was this necessary? I would like to enable secure boot if possible.

1 Like

Well, new VMs will work with Secure Boot. Only the migrated ones seem to have problem. I will add this to the guide.

But I dont know why. Maybe the Secure Boot actually tries to protect you?

This is just guessing but Secure Boot somehow cryptographically verifies the OS bootloader and if its unsigned or something it refuses to boot. So maybe the migration somehow broke this verification.

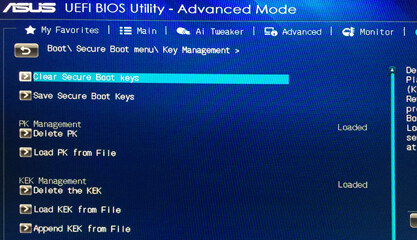

I know that on real motherboards there are Secure Boot keys like this:

Maybe TianoCore (UEFI of the VMs) has something similar which can be reset? You can go into UEFI by pressing Esc during boot if you want to take a look.

I know (superficially) how Secure Boot works.

The necessary keys should be deployed by default. Maybe they’re missing in TrueNAS’ incus implementation  that would make the secure boot feature basically useless though.

that would make the secure boot feature basically useless though.

I just updated my actual NAS, the VM worked great (without Secure Boot for now).

I then shut it down as I wanted to add the iGPU and test, if that would work.

Now I can’t see it in “Instances” anymore, I am getting this error:

3 validation errors for VirtInstanceQueryResult result.list[VirtInstanceQueryResultItem].0.status Input should be 'RUNNING', 'STOPPED' or 'UNKNOWN' [type=literal_error, input_value='ERROR', input_type=str]

It seems, that Incus thought the VM was in an error state and the TrueNAS UI cannot handle that.

This seems like a bug.

A reboot of the server did not help, I had to look into Incus’ CLI:

incus stop VMNAME -f worked.

1 Like

that’s great to see!

As I would’ve needed it after the very first shutdown of my very first VM I would’ve honestly expected this to be noticed after 25.04 BETA 1 at the latest though

Probably bad luck on my end…

After fooling around in my Windows VM I gotta say it feels like performance has decreased a lot from 24.10.

I assigned the VM 3 cores which are utilized 100% all the time according to task manager.

The metrics on the TrueNAS GUI do not work but incus top reports CPU times of over 250. I have no idea how to interpret this value though as I haven’t worked with incus yet.

I created a new Windows VM from scratch just to be sure and it now shows >350 CPU time in incus top. The actual CPU usage according to htop is about 20% (of one core) combined at that moment.

As my Windows VM now, after it was turned off for the night, didn’t boot up again (just hung at boot and then turned off - same as if I had enabled Secure Boot (which I did not)) I rolled back to 24.10.2.1 for now - I had not expected just how experimental Incus still is in TrueNAS.

It’s still a shame, that TrueNAS Apps on 24.10 won’t get updates after june though. Maybe until then 25.04.1 has fixed some issues.

Not sure if you tested this but are you able to mount and boot a Windows ISO in 25.04 because I have been unsuccessful as it always throws error (0) when uploading?

That worked flawlessly for me.

Yeah I see it works for some, but not for others. It does work on small ISOs around 2GB but crashes on larger ones around 5GB and can’t figure it out?

Does it always crash after uploading a certain amount? 4GB for example?

It always crashes on Wifi after about 2GB but I’ll have more time tomorrow to test more specifically. I did try ethernet cable tonight and the 5GB ISO got to 50% instead of the usual 10-15% and I’ve also been asking on Discord for other members experiencing the same issue, and it doesn’t seem to be ECC/non-ECC related or Wifi/Ethernet. I’ve even matched MTU across all connections and still no difference

Looks like we can manipulate the ISO from local storage instead of using upload

incus config device add win11vm install disk source=/home/myusername/Downloads/Win11_23H2_English_x64v2.incus.iso boot.priority=10

1 Like

A matching MTU should always be set of course