Hey all, first time here!

Let me start by saying that although I have plenty of experience with virtualization and Windows Servers, I have zero experience with Linux, TrueNAS and ZFS, so… bear with me, but turn me in right direction if you should.

I need to design a backup solution for my company and the plan is to work around Proxmox + TrueNAS, as I’ve ditched vmware thanks to Broadcomm, but that’s another story.

So even though this is work project, and I’d like to purchase complete system, I do have some room for DIYing some of the pieces for better performance and reliability, as I will be the one supporting the system.

The concept is like this: I have two Windows File Servers, one is about 20TB and 10 TB the other, I need to backup them and then replicate to another system offsite, the offsite system will probably be a twin of the primary one.

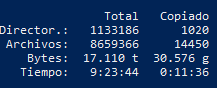

The current plan is to robocopy / sync the file structure from the File Server to the TN (a pull would be better) daily, that means comparing millions of files every day to check for changes, and copy just a few, snapshot them, and transfer them offsite via WAN. The daily change is about 20-30 GBs, but that’s after checking 7,5 million files. This also means that only “one” user will be accessing the server, as the Production File Server will still be another one.

Now, I’d like you to give me some ideas hardware-wise, from what I’ve found after hours of reading the forums, some metadata index would be interesting, as the transfers are just small office-like files.

The base hardware looks like this (nothing purchased yet)

-

A Dell R740xd with a HBA330

-

128 GBs RAM (at least), can I go with less?, do I need more?

-

2 sata ssd drives for proxmox boot mirrored

-

10 x 8 TB WD Red Pro drives in RAIDZ2, looking at 60-80TB available capacity.

And for the caching part… I’m open to suggestions. What vdevs do you think will benefit me the most? Metadata?, L2ARC? What configuration would you suggest?

I was thinking of 2 mirrored SATA SSDs for Metadata and 2 mirrored NVMEs for L2ARC, but that could be the other way around as well. What’s the speed and endurance requirements for these?

Connection to the main servers is 10Gbit SFP+, I could go 25Gbit, but I very much doubt it would be beneficial for the millions of files to be moved since SMB protocol takes so much overhead.

Server will be connected to UPS.

TL;DR: I need help designing a backup system for a couple of big file servers, around 30TBs (millions of files), would appreciate some insight about what vdevs and drives would give me the best configuration.

Phew, that was a long introduction. Thank you!