So I have done a fair amount with TrueNAS and ZFS (we are a partner).

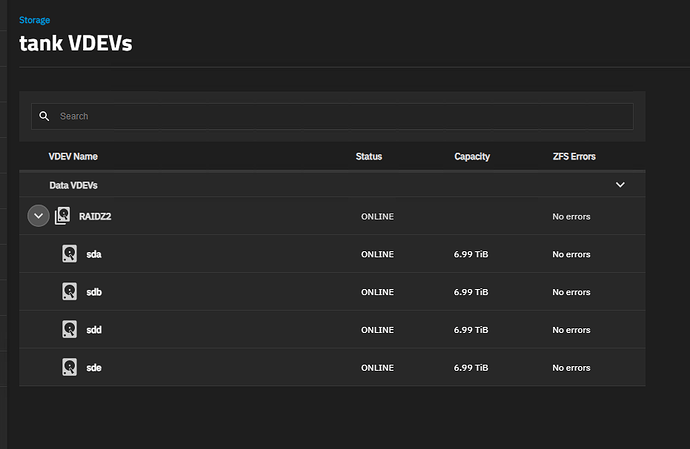

This however not an enterprise system, it’s a c240 M5 Cisco server, SAS Backplane, with of course direct HBA internal and external. The drives are NetAPP 7.68TB SSD’s that have been reformatted to 512b etc etc, the external shelfs worked great, after the reformat was able to make an 8x 3.84tb raidz2 with 6x drives each.

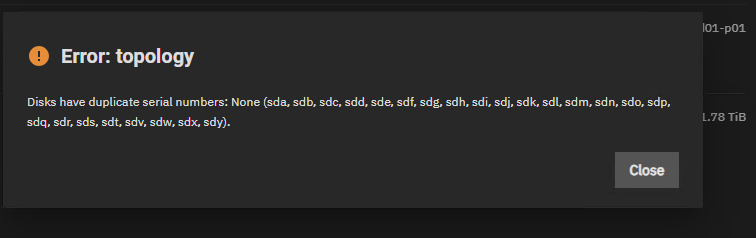

These 7.68 are being slightly more difficult. The GUI is telling me these drives are different, but the pool creation is telling me they are the same. Is there a log I can check to get a little more details?

put they show that they don’t in the gui and in the cli?

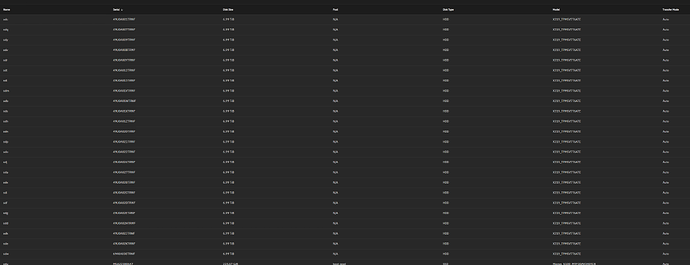

root@tn01-ssd01[/home/truenas_admin]# midclt call disk.query | jq ‘. | {name: .name, serial: .serial, lunid: .lunid}’

{“name”: “sdu”,“serial”: “MSA22380647”,“lunid”: “500a07511eaaf785”}{“name”: “sdk”,“serial”: “49U0A02JTRWF”,“lunid”: “58ce38ee20917878”}{“name”: “sdd”,“serial”: “49U0A02HTRWF”,“lunid”: “58ce38ee20917874”}{“name”: “sde”,“serial”: “49U0A02KTRWF”,“lunid”: “58ce38ee2091787c”}{“name”: “sdh”,“serial”: “49U0A01ZTRWF”,“lunid”: “58ce38ee2091782c”}{“name”: “sdp”,“serial”: “49U0A021TRWF”,“lunid”: “58ce38ee20917834”}{“name”: “sdi”,“serial”: “49U0A02CTRWF”,“lunid”: “58ce38ee20917860”}{“name”: “sdm”,“serial”: “49U0A01VTRWF”,“lunid”: “58ce38ee2091781c”}{“name”: “sdt”,“serial”: “49U0A012TRWF”,“lunid”: “58ce38ee209177b0”}{“name”: “sdo”,“serial”: “49U0A022TRWF”,“lunid”: “58ce38ee20917838”}{“name”: “sdq”,“serial”: “49U0A007TRWF”,“lunid”: “58ce38ee209172ec”}{“name”: “sds”,“serial”: “49U0A01XTRWF”,“lunid”: “58ce38ee20917824”}{“name”: “sdn”,“serial”: “49U0A020TRWF”,“lunid”: “58ce38ee20917830”}{“name”: “sda”,“serial”: “49U0A027TRWF”,“lunid”: “58ce38ee2091784c”}{“name”: “sdy”,“serial”: “49U0A009TRWF”,“lunid”: “58ce38ee209172f4”}{“name”: “sdw”,“serial”: “6940A008TRWF”,“lunid”: “58ce38ee20986824”}{“name”: “sdv”,“serial”: “49U0A00BTRWF”,“lunid”: “58ce38ee209172fc”}{“name”: “sdx”,“serial”: “49U0A028TRWF”,“lunid”: “58ce38ee20917850”}{“name”: “sdl”,“serial”: “49U0A013TRWF”,“lunid”: “58ce38ee209177b4”}{“name”: “sdb”,“serial”: “49U0A01WTRWF”,“lunid”: “58ce38ee20917820”}{“name”: “sdc”,“serial”: “49U0A001TRWF”,“lunid”: “58ce38ee209172d4”}{“name”: “sdf”,“serial”: “49U0A02DTRWF”,“lunid”: “58ce38ee20917864”}{“name”: “sdg”,“serial”: “49U0A02ETRWF”,“lunid”: “58ce38ee20917868”}{“name”: “sdr”,“serial”: “49U0A00YTRWF”,“lunid”: “58ce38ee209177a0”}{“name”: “sdj”,“serial”: “49U0A024TRWF”,“lunid”: “58ce38ee20917840”}{“name”: “sdax”,“serial”: “S3SGNX0M413850”,“lunid”: “5002538b4944fb00”}{“name”: “sday”,“serial”: “S3SGNX0M413849”,“lunid”: “5002538b4944faf0”}{“name”: “sdbh”,“serial”: “S3SGNX0M413972”,“lunid”: “5002538b494502a0”}{“name”: “sdbi”,“serial”: “S3SGNX0M413969”,“lunid”: “5002538b49450270”}{“name”: “sdbj”,“serial”: “S3SGNX0M414293”,“lunid”: “5002538b494516b0”}{“name”: “sdbk”,“serial”: “S3SGNX0M414993”,“lunid”: “5002538b49454270”}{“name”: “sdbl”,“serial”: “S3SGNX0M413847”,“lunid”: “5002538b4944fad0”}{“name”: “sdbm”,“serial”: “S3SGNX0M413911”,“lunid”: “5002538b4944fed0”}{“name”: “sdbn”,“serial”: “S3SGNX0M414257”,“lunid”: “5002538b49451470”}{“name”: “sdbo”,“serial”: “S3SGNX0M414258”,“lunid”: “5002538b49451480”}{“name”: “sdbp”,“serial”: “S3SGNX0M413917”,“lunid”: “5002538b4944ff30”}{“name”: “sdbq”,“serial”: “S3SGNX0M411809”,“lunid”: “5002538b49444ec0”}{“name”: “sdaz”,“serial”: “S3SGNX0M413856”,“lunid”: “5002538b4944fb60”}{“name”: “sdbr”,“serial”: “S3SGNX0M413858”,“lunid”: “5002538b4944fb80”}{“name”: “sdbs”,“serial”: “S3SGNX0M411765”,“lunid”: “5002538b49444c00”}{“name”: “sdbt”,“serial”: “S3SGNX0M411758”,“lunid”: “5002538b49444b90”}{“name”: “sdbu”,“serial”: “S3SGNX0M414280”,“lunid”: “5002538b494515e0”}{“name”: “sdba”,“serial”: “S3SGNX0M411699”,“lunid”: “5002538b494447e0”}{“name”: “sdbb”,“serial”: “S3SGNX0M413901”,“lunid”: “5002538b4944fe30”}{“name”: “sdbc”,“serial”: “S3SGNX0M411701”,“lunid”: “5002538b49444800”}{“name”: “sdbd”,“serial”: “S3SGNX0M411810”,“lunid”: “5002538b49444ed0”}{“name”: “sdbe”,“serial”: “S3SGNX0M413981”,“lunid”: “5002538b49450330”}{“name”: “sdbf”,“serial”: “S3SGNX0M414991”,“lunid”: “5002538b49454250”}{“name”: “sdbg”,“serial”: “S3SGNX0M414192”,“lunid”: “5002538b49451060”}{“name”: “sdz”,“serial”: “S3SGNX0M411763”,“lunid”: “5002538b49444be0”}{“name”: “sdaa”,“serial”: “S3SGNX0M411812”,“lunid”: “5002538b49444ef0”}{“name”: “sdaj”,“serial”: “S3SGNX0M414291”,“lunid”: “5002538b49451690”}{“name”: “sdak”,“serial”: “S3SGNX0M411760”,“lunid”: “5002538b49444bb0”}{“name”: “sdal”,“serial”: “S3SGNX0M411754”,“lunid”: “5002538b49444b50”}{“name”: “sdam”,“serial”: “S3SGNX0M414071”,“lunid”: “5002538b494508d0”}{“name”: “sdan”,“serial”: “S3SGNX0M411757”,“lunid”: “5002538b49444b80”}{“name”: “sdao”,“serial”: “S3SGNX0M414284”,“lunid”: “5002538b49451620”}{“name”: “sdap”,“serial”: “S3SGNX0M414290”,“lunid”: “5002538b49451680”}{“name”: “sdaq”,“serial”: “S3SGNX0M411775”,“lunid”: “5002538b49444ca0”}{“name”: “sdar”,“serial”: “S3SGNX0M411772”,“lunid”: “5002538b49444c70”}{“name”: “sdas”,“serial”: “S3SGNX0M414285”,“lunid”: “5002538b49451630”}{“name”: “sdab”,“serial”: “S3SGNX0M413914”,“lunid”: “5002538b4944ff00”}{“name”: “sdat”,“serial”: “S3SGNX0M411755”,“lunid”: “5002538b49444b60”}{“name”: “sdau”,“serial”: “S3SGNX0M414061”,“lunid”: “5002538b49450830”}{“name”: “sdav”,“serial”: “S3SGNX0M414074”,“lunid”: “5002538b49450900”}{“name”: “sdaw”,“serial”: “S3SGNX0M414278”,“lunid”: “5002538b494515c0”}{“name”: “sdac”,“serial”: “S3SGNX0M413859”,“lunid”: “5002538b4944fb90”}{“name”: “sdad”,“serial”: “S3SGNX0M411756”,“lunid”: “5002538b49444b70”}{“name”: “sdae”,“serial”: “S3SGNX0M411731”,“lunid”: “5002538b494449e0”}{“name”: “sdag”,“serial”: “S3SGNX0M411764”,“lunid”: “5002538b49444bf0”}{“name”: “sdaf”,“serial”: “S3SGNX0M413908”,“lunid”: “5002538b4944fea0”}{“name”: “sdah”,“serial”: “S3SGNX0M411771”,“lunid”: “5002538b49444c60”}{“name”: “sdai”,“serial”: “S3SGNX0M414287”,“lunid”: “5002538b49451650”}