TL/DR: If you want to put the drives of your storage pool into a standby (spindown) state:

- Enable the standby for each drive in Truenas Scale’s “Drives” options.

- Move the system log off the pool that you want to sleep.

- Move the ix-applications dataset off the pool.

Both of the above will be put automatically on the first pool you create, so you may want to either create your “always on” pool first, then the one that you want to have a standby on, or move them after the fact. Moving the apps gave me trouble both times I did it.

-

Move any VM block storage that the VM would randomly access (like the system volume) off the pool.

-

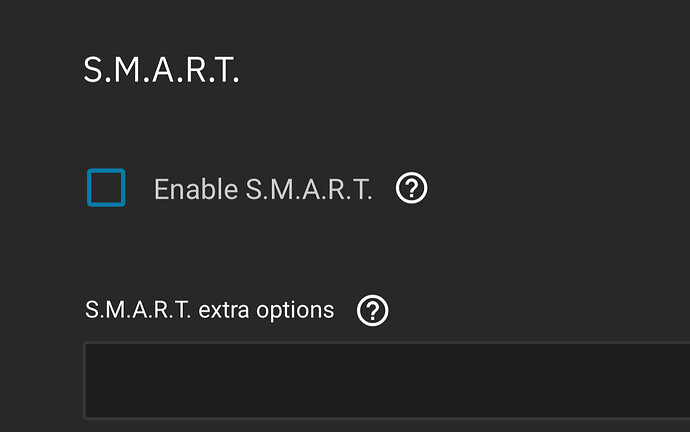

Disable SMART for the drives you want to sleep.

That is what it took for my drives to spindown. Your mileage may vary. Make sure you have no activity on the drives. If you do, recheck all of the above.

End of TL/DR

I did my first Truenas deployment fairly recently and I had to navigate a quite steep learning curve. These forums have been a lifeline that made it possible. So after I spent an inordinate amount of time trying to spindown my drives, and coming across bits and pieces of information that finally let me achieve my goal I figured I could give back by posting the above summary of what works for me.

So, many discussions about how to spindown, turn into “don’t spindown” and “why spindown”. So let me address those in a factual fashion.

The argument against spindown, and that is quite aggressively pushed, is that it reduces the lifespan of the drives and is considered a failure inducing action. I can’t provide my own take on this, but when deciding for myself, I based my decision on the manufacturer specs. In my case, the Seagate Constellation ES.3 drives are rated for 600 000 load-unload cycles, which are not the spindowns themselves but the act of parking the heads. So, considering the cost of the drives and their robust nature I decided the power savings are worth the risk. The “why” part is easy - cost and noise. I run 4 drives into a mid tower enclosure with a single fan. When the drives come to temperature, the fan spins quite aggressively, both raising a few watts and a lot of decibels. The fan and the drives themselves combined make a 30-40W difference between active and standby.

Another factor for deciding on using standby, was my use case. I usually don’t access the data on my HDD pool during the day, since most of it is storage for personal data. I have regular use during the evening when my Plex server is at work and then the regular backup synchs with my other storage spaces at 1AM. That leaves huge chunks of time (many hours) without any access to the drives. The bulk of the routine activity is handled by my second SSD that hosts my Apps and the system drive for my VM(s). That is not a redundant pool, so it is backed up every night to the main HDD pool. The system log is sent on the boot pool, that is also SSD.

The implementation of the above was a whole ordeal and the reason I made this post. After a ton of research, I found bits and pieces on the forums. The bulk of what I found was leading me to a custom script that uses hdparm to force the drives to a standby. I didn’t like the idea that a mature system like Scale has to rely on a custom script to do a basic task that basically any customer OS has done for decades. And the option was present. Hoe er it didn’t work. As my initial search found out, users had been complaining of “mysterious” activity of regular writing to the drives that never stops. I then found out, these chunks are how ZFS writes small amounts of data. Then I found the system log is the usual cause for those. Moving the log did reduce the activity, but didn’t stop it. Stopping all the apps and VMs didn’t kill the activity either. But moving them did. Still, no spindown. And then, after reading yet another thread on the topic, that had devolved from “how to do it” into “do not do it”, but was still interesting, someone that deserves a ton of credit had posted a really short post that had “disable SMART” in it. That did the job for me. My drives did go into standby as expected. The noise went down, the temperature in the closet where the machine is went down and most importantly, the power draw almost halved.