Fair point. But the only alternative, as small, low-power, embedded board that is still current and some years away from EOL, is Atom C3000.

Atom C5000/P5000 is not meant for storage.

Xeon D-2100 is not low power, and typically comes in larger than mini-ITX if that matters.

Xeon D-1700? Between the price and the $CENSORED$ X12SDV heatsinks which are closed at the top ![]() it is far from being as attractive as we would like.

it is far from being as attractive as we would like.

That’s exactly my (ours) plight.

Aaaanyway, right now the plan is to go this way:

- Gigabyte MB10-DS4 as motherboard + CPU

- 128 GB of RDIMM

- 2-5 Toshiba MG drives

- One cheap SATA SSD for the boot drive since apparently Seagate Ironwolf 125 SSDs are hard to find today

- A PCIe to M.2 expansion card for a crapton of M.2 storage (jails and L2ARC drive)

- Jonsbo N1 case

- A SFX PSU

I looked into that case a while ago, and cooling of the HDs seems do be a major issue. There is no real airflow over the drives.

I saw that video as well, however multiple sources state the temperatures are fine.

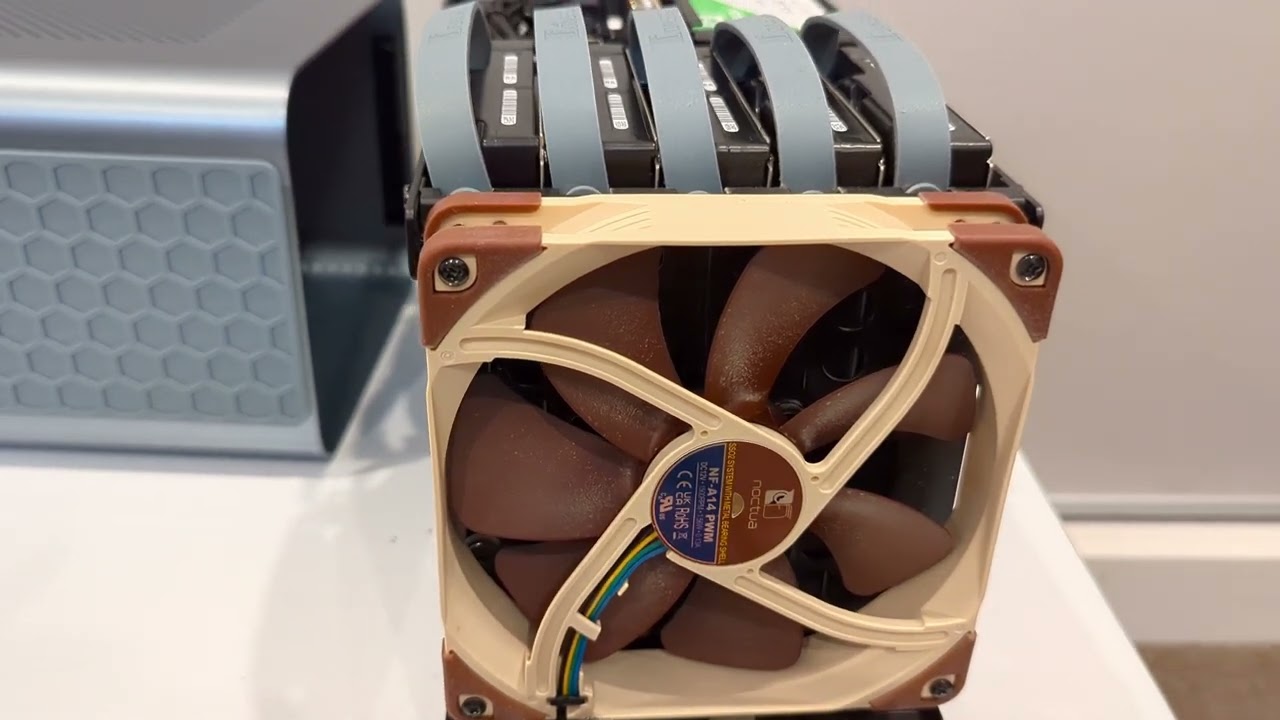

Swapping the fan with a low pressure one from noctua likely did not help those temperatures.

This seems to be the better plan, with two caveats:

- What ITS-Haenlein offers is actually not a MB10-DS4 but the Datto rebrand of a Gigabyte board which is not in the official MB10 lineup (DS0: D-1540/1541, 2*i210; DS1: D-1520/D-1521, 2*i210; DS3: D-1541, 2*i210 + 2*SFP+; DS4: D-1521, 2*i210 + 2*SFP+; and I’ve seen mentions of a DS5 with the D-1581).

The Datto board appears to have only two 10 GBase-T ports, with the PHY under a little heatsink; no SFP+, and no stacked 1 GbE ports from i210. Among eBay listings for this board, one shows a “REV: 1.0” marking and evidence that the board sports a D-1521; consistent with the rest of the lineup, I would have guessed a “DS2 rev. 1.0” with a D-1520 and 2*X557, never released as such under the Gigabyte brand, but the D-1521 puts the “Datto rev. 1.0” on par with the “rev. 1.3” from Gigabyte. - Your choice of M.2 carrier card sports an ASM1184e, which is a “PCI express packet switch, 1 PCIe x1 Gen2 upstream port to 4 PCIe x 1 Gen2 downstream ports”. PCIe 2.0

x1

x1  Don’t buy that!

Don’t buy that!

If the CPU is indeed a D-1521, and Gigabyte did a proper engineering job, all that’s needed to enjoy four PCIe 3.0 x4 from the x16 slot is a fully passive adapter. If it is a D-1520, or if Gigabyte crippled the D-1521, you may need a PCIe switch after all but then pick one with a PCIe 3.0 switch.

Alternatives would be:

- Typically more expensive Supermicro X10SDV boards. (But with patience and some luck chasing on eBay…)

- The ultra-cheap MJ11-EC1, 8 SATA, 1 M.2, i210… and no realistic option to add a 10G NIC, or otherwise use the x8 SlimSAS, in the limited space of mini-ITX Jonsbo N case. Best plan without 10G.

- AsRock Rack E3C246D2I (available just under 300 E; 8 SATA, 1 M.2 2242, x16 slot for either more M.2 or 10G NIC—but not both with only a half-height slot in the case).

- A new A2SDI-4C-HLN4F (available just under 400 E), using the x4 slot for either a second M.2 or a 10G NIC.

- I’ve not found the higher spec A2SDi-H boards in stock anywhere around, so alternatives end here.

If you’re willing to be a guinea pig with this Datto-rebranded “MB10-DS2 rev. 1.0”, this is plausibly the best available plan with on-board 10G at this moment. (And for putative guinea pigs on the other side of the Big Pond, there are some cheap listings on eBay US.)

Is this too expensive? Lots of PCIe lanes. u-ATX.

No, but I’m looking for ITX boards ![]()

In micro-ATX size, options are plentiful. But the current price champion in Europe is the Gigabyte MC12-LE0.

Got the refurbished DATTO (MB10-DS4-OEM) for 250 €.

The Jonsbo N1 for around 155 €

Seasonic FOCUS SPX 650W 80 PLUS Platinum[1] for 136,02 €

4x 32GB ECC Registered M393A4K40BB1-CRC0Q for 40 € each.

@etorix’s PCIe expansion card for around 52 €.

Total without shipping expenses around 750 €.

Will update in the following months.

Should be well enough, can anyone confirm just to be safe? ↩︎

The power supply sizing guidance points to a 450 W PSU for a low TDP system (Avoton C2000 at the time) with 5-6 disks. 650 W should have some margin.

Great, wanted to have an extra head confirming I did not screw up something since I was bashing mine in order to find compatibile RAM in Europe that would not cost over 100 € at stick.

Really, the Gigabyte QVL uses some awful colours…

As a side note lower wattage good SFX PSU are unavailable in EU and when they are the prices are insane… over 170 € for a 550W GOLD SFX PSU? Yikes!

I suppose that “low wattage” PSU are simply not popular enough with gamers needing to power the triple slot GPU they’ve shoehorned into their portable “LAN party” mini-ITX case…

As for RAM QVL, I admit that I pay little attention to them and blindly assume that Micron/Samsung/SK Hynix-branded modules of ECC RAM just work with any compatible server/workstation motherboard. To this date I have yet to stumble into the counterexample where the assumption does not hold.

Flashy colours indeed… but the most impressive is that the table spans three pages. More often, you’re happy that there’s a grand total of three references listed!

I found a few of these used for $120 USD here in the states. Looks pretty nice and small. Six SATA ports, no M.2 connectors.

Can’t wait to see what happens and then a few photos as well.

Well, I kinda did that too for my current system… the result is that I cannot use dual channel but have to stick both sticks (pun intended) I have into che same channel (DIMMB2 and DIMMB1) in order to make the system post. I don’t know if that’s something with the motherboard (probably is), but this time I wanted to go safe route: eventually I found used compatible modules for a great price, let’s just hope they work.

You can be sure I will make a proper build report for this one!

So, next step is choosing the NVMe drives and their configuration.

I will have four slots available, and I am sure I want a (likely metadata-only) L2ARC… I was thinking of a 250GB drive, and the Western Digital RED SN700 250 GB Specs | TechPowerUp SSD Database looks promising:

- has a 250GB size, which is the adeguate number for L2ARC considering I will (hopefully) have 128GB of ARC.

- it has a DWPD of 1.1 which should be enough for the task

- it has 3,100 MB/s read speeds but a lousy 1,600 MB/s write speed

- it has a price of around 70 €

Then I want to place my jails/VMs/apps[1] on NVMe because of performance and power draw reasons[2] the Crucial T500 500 GB Specs | TechPowerUp SSD Database which:

- is a 500GB drive, leaving me a bit more space to play with

- has way greater performance than the WD RED SSD with more than double the read speed and more than triple the write speed.

- has a puny DWPD of 0.3

- a price of 70 €

Assuming I use two slots for my JAIL pool I am left with a single one empty, which could be a good thing if in the future I will ever need a SLOG… but honestly it feels a bit of a waste. What if I go with a L2ARC, a single drive for the jails, and go with a mirrored metadata vdev?

So, I ask your opinion about two things:

- Do you have any insight that could help me decide which way to go?

- What do you think about the drives I have in mind? Do they fit for the roles[3]? Do you have any suggestions?

As always, no sync writes = no SLOG.

With 128 GB RAM, the L2ARC could be 512 GB - 1 TB.

Special vdev is pool-critical, so it would need 2 or preferable 3 drives—and cannot be removed if attached to a raidz2. I don’t know your needs or requirements, but a persistent L2ARC is a whole lot easier and safer to handle, and will just do the job if the workload is mostly about reads.

![]() I meant L2ARC, fixed.

I meant L2ARC, fixed.

Seeing as how you already have an entire system that you can drop TrueNAS onto (3950x/ASUS board), why don’t you drop the money you were planning to build a new system with and just buy new drives?

Losing IPMI/ECC hurts, but you can use the old NAS/pool as a replication target…Two copies of your data are better than one.

Because it’s frying RAM module after RAM module, likely due to a motherboard defect. ![]()